1. Introduction

TATi contains a set of distinct guides aimed at different user audiences. All of them reside in <installation folder>/share/doc/thermodynamicanalyticstoolkit/ in the installation folder.

-

User guide (this document: userguide.html or userguide.pdf)

-

Programmer’s guide (see programmersguide.html or programmersguide.pdf)

-

Reference documentation (see thermodynamicanalyticstoolkit-API-reference.pdf or html/index.html)

-

Roadmap (see roadmap.html or roadmap.pdf)

1.1. Before you start

In the following we assume that you, the reader, has a general familiarity with neural networks. You should know what a classification problem is, what an associated dataset for (supervised) learning needs to contain. You should know about what weights and biases in a neural network are and what the loss function does. You should also have a rough idea of what optimization is and that gradients with respect to the chosen loss function can be obtained through so-called backpropagation.

If you are not familiar with the above terminology, then we recommend an introductory book on neural networks such as [Bishop2006].

1.2. What is ThermodynamicAnalyticsToolkit?

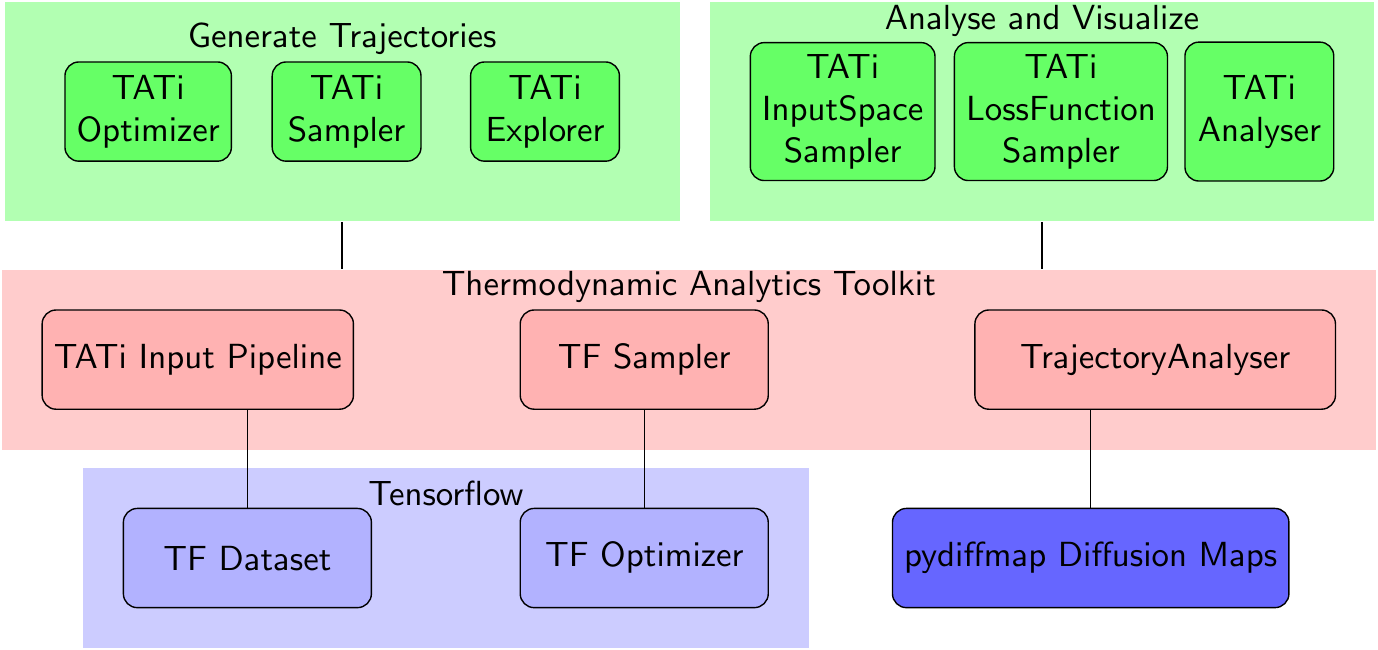

The Thermodynamic Analytics Toolkit allows to perform thermodynamic sampling and analysis of large neural network loss manifolds. It extends Tensorflow by several samplers, by a framework to rapidly prototype new samplers, by the capability to sample several networks in parallel and provides tools for analysis and visualization of loss manifolds, see Figure [introduction.figure.tools_module] for an overview. We rely on the Python programming language as only for that Tensorflow’s interface has an API stability promise.

There are two approaches to using TATi: On the one hand, it is a toolkit consisting of command-line programs such as TATiOptimizer, TATiSampler, TATiLossFunctionSampler, and TATiAnalyzer that, when fed a dataset and given the network specifics, directly allow to optimize and sample the network parameters and analyze the exlored loss manifold, see Figure [introduction.figure.tools_module].

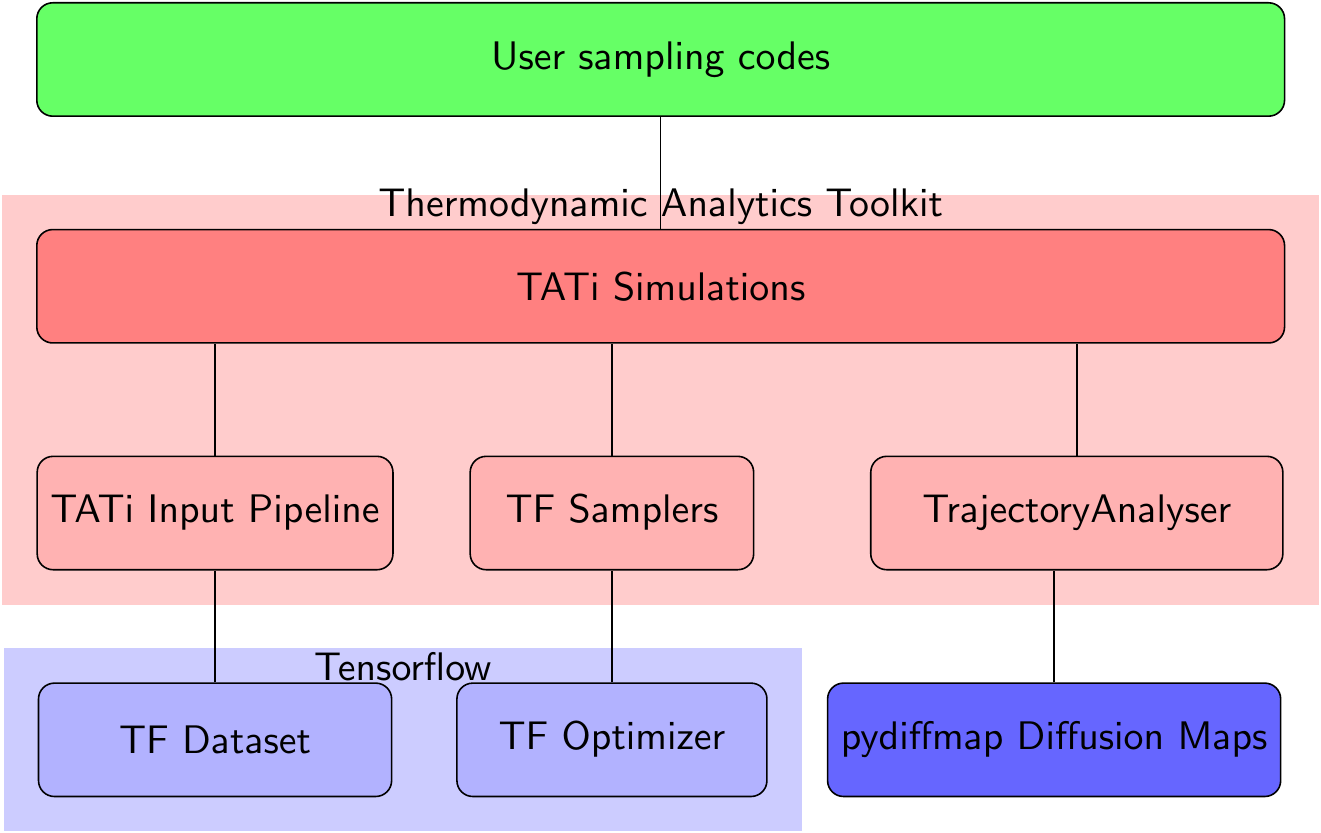

On the other hand, TATi can be readily used inside Python programs by using its modules: simulation, model, analysis. The simulation module, see Figure [introduction.figure.simulation_module], contains a very easy-to-use, high-level interface to neural network modelling, granting full access to the network’s parameters, its gradients, its loss and all other quantities of interest. It is especially practical for rapid prototyping. The module model is its low-level counterpart and allows for more efficient implementations.

Beginning with section [quickstart] we give an introduction to either way of using TATi.

1.3. Installation

In the following we explain the installation procedure to get ThermodynamicAnalyticsToolkit up and running.

The easiest way to tati is through ```` pip install tati ````

If you want to install from a cloned repository or from a release tarball, then read on.

1.3.1. Installation requirements

This program suite is implemented using python3 and the development mainly focused on Linux (development machine used Ubuntu 14.04 up to 18.04). At the moment other operating systems are not supported but may still work.

It has the following non-trivial dependencies:

-

TensorFlow: version 1.4.1 till currently 1.10 supported

Note that these packages can be easily installed using either the repository tool (using some linux derivate such as Ubuntu), e.g.

sudo apt install python3-numpy

or via pip3, i.e.

pip3 install numpy

For acor a few extra changes are required.

pip3 install acor sed -i -e "s#import _acor#import acor._acor as _acor#" <install path>/acor/acor.py

The last command replaces the third line in the file acor/acor.py such that the function acor (and not the module acor) is used.

|

Note

|

acor is only required for the Integrated Autocorrelation Time analysis and may be ignored if this functionality is not required. |

Moreover, the following packages are not ultimately required but examples or tests may depend on them:

-

gawk

The documentation is written in AsciiDoc and doxygen and requires a suitable package to compile to HTML or create a PDF, e.g., using dblatex

-

doxygen

-

asciidoc

-

dblatex

Finally, for the diffusion map analysis we recommend using the pydiffmap package, see https://github.com/DiffusionMapsAcademics/pyDiffMap.

In our setting what typically worked best was to use anaconda in the following manner:

conda create -n tensorflow python=3.5 -y

conda install -n tensorflow -y \

tensorflow numpy scipy pandas scikit-learn matplotlib

In case your machine has GPU hardware for tensorflow, replace “tensorflow” by “tensorflow-gpu”.

|

Note

|

On systems with typical core i7 architecture recompiling tensorflow from source provided only very small runtime gains in our tests which in most cases do not support the extra effort. You may find it necessary for tackling really large networks (>1e6 dofs) and datasets and especially if you desire to use Intel’s MKL library for the CPU-based linear algebra computations. |

|

Tip

|

acor cannot be installed using anaconda (not available). Hence, it needs to be installed using pip for the respective environment. See above for installation instructions. |

Henceforth, we assume that there is a working tensorflow on your system, i.e. inside the python3 shell

import tensorflow as tf a=tf.constant("Hello world") sess=tf.Session() print(sess.run(a))

should print “Hello world” or similar.

|

Tip

|

You can check the version of your tensorflow installation at any time by inspecting print(tf.__version__). Similarly, TATi’s version can be obtained through import TATi print(TATi.__version__) |

1.3.2. Installation procedure

Installation comes in three flavors: as a PyPI package, or through either via a tarball or a cloned repository.

In general, the PyPI (pip) packages are strongly recommended, especially if you only want to use the software.

The tarball releases are recommended if you only plan to use TATi and do not intend ito modify its code. If, however, you need to use a development branch, then you have to clone from the repository.

In general, this package is distributed via autotools, "compiled" and installed via automake. If you are familiar with this set of tools, there should be no problem. If not, please refer to the text INSTALL file that is included in the tarball.

|

Note

|

Only the tarball contains precompiled PDFs of the userguides. The cloned repository contains only the HTML pages. |

1.3.3. From Tarball

Unpack the archive, assuming its suffix is .bz2.

tar -jxvf thermodynamicanalyticstoolkit-${revnumber}.tar.bz2

If the ending is .gz, you need to unpack by

tar -zxvf thermodynamicanalyticstoolkit-${revnumber}.tar.gz

Enter the directory

cd thermodynamicanalyticstoolkit

Continue then in section Configure, make, install.

1.3.4. From cloned repository

While the tarball does not require any autotools packages installed on your system, the cloned repository does. You need the following packages:

-

autotools

-

automake

To prepare code in the working directory, enter

./bootstrap.sh

1.3.5. Configure, make, make install

Next, we recommend to build the toolkit not in the source folder but in an extra folder, e.g., “build64”. In the autotools lingo this is called an out-of-source build. It prevents cluttering of the source folder. Naturally, you may pick any name (and actually any location on your computer) as you see fit.

mkdir build64 cd build64 ../configure --prefix="somepath" -C PYTHON="path to python3" make make install

More importantly, please replace “somepath” and “path to python3” by the desired installation path and the full path to the python3 executable on your system.

|

Note

|

In case of having used anaconda for the installation of required packages, then you need to use $HOME/.conda/envs/tensorflow/bin/python3 for the respective command, where $HOME is your home folder. This assumes that your anaconda environment is named tensorflow as in the example installation steps above. |

|

Note

|

We recommend executing (after make install was run) make -j4 check additionally. This will execute every test on the extensive testsuite and report any errors. None should fail. If all fail, a possible cause might be a not working tensorflow installation. If some fail, please contact the author. The extra argument -j4 instructs make to use four threads in parallel for testing. Use as many as you have cores on your machine. In case you run the testcases on strongly parallel hardware, tests may fail because of cancellation effects during parallel summation. In this case you made degrade the test threshold using the environment variable TATI_TEST_THRESHOLD. If unset, it defaults to 1e-7. |

|

Tip

|

Tests may fail due to numerical inaccuracies due to reduction operations executed in parallel. Tensorflow does not emphasize on determinism but on speed and scaling. Therefore, if your system has many cores or is GPU-assisted, some tests may fail. In this case you can set the environment variable TATI_TEST_THRESHOLD when calling configure. Its default value is 1e-7. For the DGX-1 we found 4e-6 to work. If the threshold need to run all test successfully is much larger than this, you should contact us, see [introduction.feedback]. |

1.4. License

As long as no other license statement is given, ThermodynamicAnalyticsToolkit is free for use under the GNU Public License (GPL) Version 3 (see https://www.gnu.org/licenses/gpl-3.0.en.html for full text).

1.5. Disclaimer

Because the program is licensed free of charge, there is not warranty for the program, to the extent permitted by applicable law. Except when otherwise stated in writing in the copyright holders and/or other parties provide the program "as is" without warranty of any kind, either expressed or implied. Including, but not limited to, the implied warranties of merchantability and fitness for a particular purpose. The entire risk as to the quality and performance of the program is with you. Should the program prove defective, you assume the cost of all necessary servicing, repair, or correction.

— section 11 of the GPLv3 license

1.6. Feedback

If you encounter any bugs, errors, or would like to submit feature request, please open an issue at GitHub or write to frederik.heber@gmail.com. The authors are especially thankful for any description of all related events prior to occurrence of the error and auxiliary files. More explicitly, the following information is crucial in enabling assistance:

-

operating system and version, e.g., Ubuntu 16.04

-

Tensorflow version, e.g., TF 1.6

-

TATi version (or respective branch on GitHub), e.g., TATi 0.8

-

steps that lead to the error, possibly with sample Python code

Please mind sensible space restrictions of email attachments.

2. Quickstart

Before we come to actually using TATi, we explain what possible approaches there are to sampling a high-dimensional function such as the loss manifold of neural networks. To this end, we talk about grid-based sampling that however suffers from the Curse of Dimensionality. Moreover, we will discuss Monte Carlo and especially Markov Chain Monte Carlo methods. In the latter category we have what we will call dynamics-based sampling. This approach does not suffer in principle from the Curse of Dimensionality but moreover may have additional savings by looking only at areas of the manifold that have small loss. At the end, want to set the stage with a little example: We will look at a very simple classification task and see how it is solved using neural networks.

2.1. Sampling

Let be given a high-dimensional loss manifold

$L_{D}(\theta) = \sum_{(x_i,y_i) \in {D}} l_\theta(x_i,y_i)$

that depends explicitly on the parameters $\theta \in \Omega \subset \mathrm{R}^N$ of the network with $N$ degrees of freedom and implicitly on a given dataset ${D} = \{x_i,y_i\}$. Furthermore, the loss function $l_\theta$ itself depends implicitly on the prediction $f_\theta(x_i)$ of the network for a given input $x_i$ and fixed parameters $\theta$ and therefore on the chosen network architecture.

If ${D}$ were the set of all possible realisations of the data, then $L_{D}(\theta)$ would measure the so-called generalization error. As typically only a finite set of data is available, it is split into training and test parts, the latter set aside for approximating this error. Furthermore, during training the gradient is often computed on a subset of the training set ${D}$, the mini-batches. In the following we will ignore these details as they are irrelevant to the general procedure described.

In order to deduce information such as the number of minima, the number of saddle points, the typical barrier height between minima and other characteristics to classify the landscape, we need to explore the whole domain $\Omega$. As $L_{D}$ depends on an arbitrary dataset, we do not know anything a-priori apart from the general regularity properties

[As activation functions contained in the loss may be non-smooth, e.g., the rectified linear unit, even this can be not taken as solid grounds.]

and possible boundedness of $l_\theta$.

A very similar task is found in function approximation where an unknown and possibly high-dimensional function $f(x): \mathrm{R}^N \rightarrow \mathrm{R}$ is approximated through a set of point evaluations in conjunction with a basis set. A typical usecase is the numerical integration of this high-dimensional function. Naturally, the quality of the approximation hinges not only on the basis set but even more on the choice of the precise location of point evaluations, the sampling.

Very generally, sampling approaches can be placed into two categories: Grids and sequences. Sequences can be random, deterministic, or both. We briefly discuss them, focusing on their general computational cost in high dimensions.

2.1.1. Grids

Structured grids such as naive full grid approaches, where a fixed number of points with equidistant spacing is used per axis, suffer from the Curse of Dimensionality, a term coined by Bellman. With increasing number of parameters $N$ the computational cost becomes prohibitive. This Curse of Dimensionality is alleviated to some extent by so-called Sparse Grids, see [Bungartz2004], where the quality bounds on the approximation are kept but fewer grid points need to be evaluated. However, they only alleviate the curse to some extent, see [Pflueger2010], and are still infeasible at the moment for the extremely high-dimensional manifolds encountered in neural network losses.

2.1.2. Sequences

Random sequences are Monte Carlo approaches where a specific sequence of random numbers decides which point in the whole space to evaluate. Due to their inherent stochasticity these lack the rigor of the structured grid methods but neither rely on, nor exploit any regularity as the structured grid methods. Therefore, they suffer no Curse of Dimensionality with respect to their convergence rate. The rate is bounded by the Central Limit Theorem to ${O}(n^{-\frac 1 2})$ if $n$ is the number of evaluated points.

Quasi-Monte Carlo (QMC) methods use deterministic sequences, such as Lattice rules (Hammersley, \ldots) or digital nets, and are able to obtain higher convergence rates of ${O}(n^{-1} (\log{n})^d)$ at the price of a moderate dependence on dimension $d$.

In this category of sequences we also have dynamics-based sequences. As examples of Markov Chain Monte Carlo (MCMC) methods, they are closely connected to pure Monte Carlo approaches. They make use of the ergodic property of the chosen dynamics allowing to replace high-dimensional whole-space integrals by single-dimensional time-integrals, where the accuracy depends on the trajectory length.

The chain can be generated through suitable dynamics such as Hamiltonian,

$d\theta= M^{-1} p dt, \quad dp= -\nabla L(\theta) dt$

or Langevin dynamics,

$d\theta= M^{-1} p dt, \quad dp= \Bigl (-\nabla L(\theta) - \gamma p \Bigr ) dt + \sigma M^{\frac 1 2} dW$

for positions (or parameters) $\theta$, momenta $p$, mass matrix $M$, potential (or loss) $L(\theta)$, friction constant $\gamma$ and a stationary normal random process $W$ with variance $\sigma$. Note that Langevin dynamics has both Hamiltonian dynamics and Brownian dynamics as limiting cases of the friction constant $\gamma$ going to zero or infinity, respectively.

There are also hybrid approaches where a purely deterministic sequence is randomized by a Metropolis-Hastings criterion to remove possible bias, such as Hybrid or Hamiltonian Monte Carlo, see [Neal2011]. Note that in the case of Langevin dynamics the stochasticity enters through the random process.

If we consider the function $L_{D}(\theta)$ as a potential energy function, then we may cast this into a probability distribution using the canonical Gibbs distribution $Z \cdot \exp( -\beta L_{D}(\theta))$, where $Z$ is a normalization constant and $\beta$ is the inverse temperature factor. Then, we have sampling in the typical sense in statistics where an unknown distribution is evaluated. We remark that this Gibbs measure is known in the neural networks community through the energy interpretation ([LeCun2006) of a probability distribution in relation to a certain reference energy, given by the temperature.

And indeed, dynamics-based sampling does not aim to approximate $L$ as best as possible but its Gibbs (also called the canonical) distribution $\exp{(-\beta L)}$. In our case we are only interested in particular subsets of the space, namely those associated with a small loss. As we have given ample evidence, the central challenge in sampling is the computational cost. The dynamics-based sequences allow to save computational cost in the high-dimensional spaces by incorporating gradient information. Hence, the more accurately we sample from the Gibbs distribution, the more efficiently we sample only those subsets of interest. This saving could not be obtained with a pure Monte Carlo approach.

Therefore, we focus on dynamics-based sampling for this high-dimensional exploration problem.

2.1.3. Integrated Autocorrelation Time

In principle, the underlying challenge is to assure that the sampling trajectory covers a sufficient area of the whole space for validity. However, stating when to terminate is as difficult as the exploration task itself. Hence, the best measure is to assess when a new independent state has been obtained.

When inspecting sampled MCMC trajectories, we need to assess how many consecutive steps it takes to get from one independent state to another. This is estimated by the Integrated Autocorrelation Time (IAT) $\tau_s$. For any observable $A$, we have that its variance generally behaves as $var_{A} = \frac {var_{\pi} (\varphi(X))}{T_s/\tau_s}$, where $\pi$ is the target density, $\varphi(X)$ is the function of interest of the random variable $X$, and $T_s$ is the sampling time, see [Goodman2010]. Obviously, when time steps are discrete and $\tau_s$ is measured in number of steps, then $\tau_s = 1$ is highly desirable, i. e.~immediately stepping from one independent state to the next. The IAT $\tau_s$ is defined as

$\tau_s = \sum^{\infty}_{-\infty} \frac{ C_s(t)} {C_s(0)} \quad {with} \quad C_s(t) = \lim_{t' \rightarrow \infty} cov[\varphi \bigl (X(t'+t) \bigr ), \varphi \bigl (X(t) \bigr)$].

The above holds also for sampling approaches based on Langevin Dynamics. There, we may use the IAT to gauge the exploration speed for each sampled trajectory $X(t)$.

2.1.4. Example: Sampling of a Perceptron

Let us give a trivial example to illustrate the above with a few figures. We want to highlight in the following the key aspect about the dynamics-based sampling approach, namely sampling the probability distribution function associated with the Gibbs measure and not the loss manifold directly.

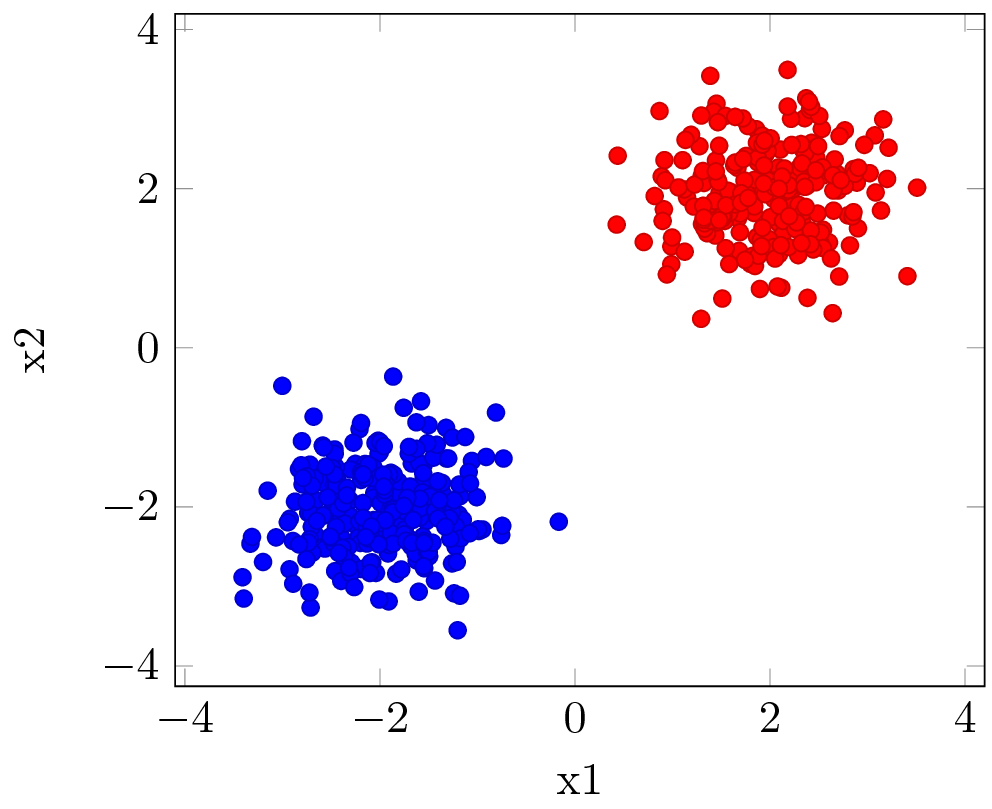

Assume we are given a very simple data set as depicted in Dataset. The goal is to classify all red and blue dots into two different classes. This problem is quite simple to solve: a line in the two-dimensional space can easily separate the two classes.

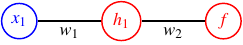

A very simple neural network, a perceptron: it would use one input node, either of the coordinate, $x_{1}$ or $x_{2}$, and a single output node with an activation function $f$ whose sign gives the class the input item belongs to. The network is given in Network. The network is chosen non-ideal by design to illustrate a point.

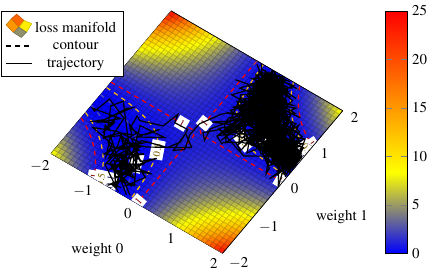

In Figure Loss manifold we then turn to the two-dimensional loss landscape depending on the two weights. In this very low-dimensional case we turn to the "naive grid" approach and partition each axis equidistantly. We see two minima basins both of hyperbole or "banana" shape. Here, we see that there is not a single minima but two of them. This is caused by the deliberate permutation symmetry of the two weights in the network.

Assume we additionally perform dynamics-based sampling. In the figure the resulting trajectory is given as squiggly black line. Here, we have chosen such an (inverse) temperature value such that it is able to pass the potential barrier and reach the other minima basin.

As we clearly see, the grid-based approach does not distinguish between the areas of high loss and the areas of low loss. Note that the coloring comes from a spline interpolation from the grid points. The dynamics-based trajectory on the other hand remains in areas of low loss all the time, where "low" is relative to its inverse temperature parameter. This is because the areas of low loss have a much higher probability in the Gibbs measure which our sampler is faithful to.

This quick description of the sampling loss manifolds in the context of neural networks in data science should have acquainted we with some of the concepts underlying the idea of sampling.

2.2. Using module simulation

The simulation module has been designed explicitly for ease-of-use. Typically, everything is achieved through two or three commands: One to setup TATi by handing it a dash of options, then calling a function to fit() or sample(). In the very end of this quickstart we will learn how to implement our first sampler using this interface.

For a more extensive description of the simulation module, we refer to its reference section.

If we have installed the TATi package in the folder /foo, i.e. we have a folder TATi with a file simulation.py residing in there, then we probably need to add it to the PYTHONPATH as follows

PYTHONPATH=/foo python3

In this shell, we may import the sampling part of the package as follows

import TATi.simulation as tati

This will import the Simulation interface class as the shortcut tati from the file mentioned before. This class contains a set of convenience functions that hides all the complexity of setting up of input pipelines and networks. Accessing the loss function, gradients and alike or training and sampling can be done in just a few keystrokes.

In order to make our own python scripts executable and know about the correct (possibly non-standard) path to ThermodynamicAnalyticsToolkit, place the following two lines at the very beginning of the script:

import sys sys.path.insert(1,"<path_to_TATi>/lib/python3.5/site-packages/")

where <path_to_TATi> needs to be replaced by our specific installation path and python3.5 needs to be replaced if we are using a different python version. However, for the examples in this quickstart tutorial it is not necessary if we use PYTHONPATH.

2.2.1. Notation

In the following, we will use the following notation:

-

dataset: $D = \{X,Y\}$ with features $X=\{x_d\}$ and labels $Y=\{y_d\}$

-

batch of the dataset: $D_i=\{X_i, Y_i\}$

-

network parameters: $w=\{w_1, \ldots, w_M\}$

-

momenta of network parameters: $p=\{p_1, \ldots, p_M\}$

-

neural network function: $F_w(x)$

-

loss function: $L_D(w) = \sum_i l(F_w(x_i), y_i)$ with a loss $l(x,y)$

-

gradients: $\nabla_w L_D(w)$

-

Hessians: $H_{ij} = \partial_{w_i} \partial_{w_j} L_D(w)$

2.2.2. Instantiating TATi

The first thing in all the following example we will do is instantiate the tati class.

import TATi.simulation as tati nn = tati( # comma-separated list of options )

Although it is the simulation module, we "nickname" it tati in the following and hence will simply refer to this instance as tati.

This class takes a list of options in its construction or __init__() call. These options inform it about the dataset to use, the specific network topology, what sampler or optimizer to use and its parameters and so on.

To see how this works, we will first need a dataset to work on.

|

Note

|

All of the examples below can also be found in the folders doc/userguide/python, doc/userguide/simulation, and doc/userguide/simulation/complex. |

Help on Options

tati has quite a number of options that control its behavior. we can request help to a specific option. Let us inspect the help for batch_data_files:

>>> from TATi.simulation as tati >>> tati.help("batch_data_files") Option name: batch_data_files Description: set of files to read input from Type : list of <class 'str'> Default : []

This will print a description, give the default value and expected type.

Moreover, in case we have forgotten the name of one of the options.

>>> from TATi.simulation as tati >>> tati.help() averages_file: CSV file name to write ensemble averages information such as average kinetic, potential, virial batch_data_file_type: type of the files to read input from <remainder omitted>

This will print a general help listing all available options.

Use get_options() to get a dict of all options or to request the currently set value of a specific option. Moreover, use set_options() to modify them.

import TATi.simulation as tati nn = tati( verbose=1, ) print(nn.get_options()) nn.set_options(verbose=2) print(nn.get_options("verbose"))

2.2.3. Setup

In the following we will first be creating a dataset to work on. This example code will be the most extensive one. All following ones are rather short and straight-forward.

Preparing a dataset

Therefore, let us prepare the dataset, see the Figure Dataset, for our following experiments.

At the moment, datasets are parsed from Comma Separated Values (CSV) or Tensorflow’s own TFRecord files or can be provided in-memory from numpy arrays. In order for the following examples on optimization and sampling to work, we need such a data file containing features and labels.

TATi provides a few simple dataset generators contained in the class ClassificationDatasets.

One option therefore is to use the TATiDatasetWriter that provides access to ClassificationDatasets, see Writing a dataset. However, we can do the same using python as well. This should give us an idea that we are not constrained to the simulation part of the Python interface, see the reference on the general Python interface where we go through the same examples without importing simulation.

from TATi.datasets.classificationdatasets \ import ClassificationDatasets as DatasetGenerator from TATi.common import data_numpy_to_csv import numpy as np # fix random seed for reproducibility np.random.seed(426) # generate test dataset: two clusters dataset_generator = DatasetGenerator() xs, ys = dataset_generator.generate( dimension=500, noise=0.01, data_type=dataset_generator.TWOCLUSTERS) # always shuffle data set is good practice randomize = np.arange(len(xs)) np.random.shuffle(randomize) xs[:] = np.array(xs)[randomize] ys[:] = np.array(ys)[randomize] # call helper to write as properly formatted CSV data_numpy_to_csv(xs,ys, "dataset-twoclusters.csv")

|

Warning

|

The labels need to be integer values. Importing will fail if they are not. |

After importing some modules we first fix the numpy seed to 426 in order to get the same items reproducibly. Then, we first create 500 items using the ClassificationDatasets class from the TWOCLUSTERS dataset with a random perturbation of relative 0.01 magnitude. We shuffle the dataset as the generators typically create first items of one label class and then items of the other label class. This is not needed here as our batch_size will equal the dataset size but it is good practice generally.

|

Note

|

The class ClassificationDatasets mimicks the dataset examples that can also be found on the Tensorflow playground. |

Afterwards, we write the dataset to a simple CSV file with columns "x1", "x2", and "label1" using a helper function contained in the TATi.common module.

|

Caution

|

The file dataset-twoclusters.csv is used in the following examples, so keep it around. |

This is the very simple dataset we want to learn, sample from and exlore in the following.

Setting up the network

Let’s first create a neural network. At the moment of writing TATi is constrained to multi-layer perceptrons but this will soon be extended to convolutional and other networks.

Multi-layer perceptrons are characterized by the number of layers, the number of nodes per layer and the output function used in each node.

import TATi.simulation as tati # prepare parameters nn = tati( batch_data_files=["dataset-twoclusters.csv"], hidden_dimension=[8, 8], hidden_activation="relu", input_dimension=2, loss="mean_squared", output_activation="linear", output_dimension=1, seed=427, ) print(nn.num_parameters())

In the above example, we specify a neural network of two hidden layers, each having 8 nodes. We use the "rectified linear" activation function for these nodes. The output nodes are activated by a linear function. At the end, we print the number of parameters, i.e. $M=105$ for the set of parameters $w=\{w_1, \ldots, w_M\}$.

The network’s weights are initialized randomly in the interval [-0.5,0.5] and the biases are set to 0.1 (small, non-zero values).

Let us briefly highlight the essential options (a full and up-to-date list is given in the API reference in the class PythonOptions):

-

input_columns: This option allows to add an additional layer after the input that selects a subset of the input nodes and additionally modifies them, e.g., by passing through a sine function. Example: input_columns=["sin(x1), x2^2"]

-

input_dimension: This is the number of input nodes of the network, one node per dimension of the supplied dataset. Example input_dimension=10

-

output_activation: Defines the activation function for the output layer. Example: output_activation="sigmoid"

-

output_dimension: Sets the number of output nodes. Example: output_dimension=1

-

hidden_activation: Defines the common activation function for all hidden layers. Example: hidden_activation="relu6"

-

hidden_dimension: Gives the hidden layers and the nodes per layer by giving a list of integers. Example: hidden_dimension=[2,2] defines two hidden layers, each with 2 nodes.

-

loss: Sets the loss function $l(x,y)$ to use. Example: loss="softmax_cross_entropy"

A complete set of all actications functions can be obtained.

import TATi.simulation as tati print(tati.get_activations())

Similar, there is a also a list of all available loss functions.

import TATi.simulation as tati print(tati.get_losses())

|

Note

|

At the moment it is not possible to set different activation functions for individual nodes or between hidden layers. |

|

Note

|

Note that (re-)creating the tati instance will always reset the computational graph of tensorflow in case we need to add nodes. |

Freezing network parameters

Sometimes it might be desirable to freeze parameters during training or sampling. This can be done as follows:

import TATi.simulation as tati nn = tati( batch_data_files=["dataset-twoclusters.csv"], fix_parameters="output/biases/Variable:0=2.", hidden_dimension=[8, 8], hidden_activation="relu", input_dimension=2, loss="mean_squared", output_activation="linear", output_dimension=1, seed=427, ) print(nn.num_parameters())

This is same code as before when setting up the network the only exception is the additional option fix_parameters.

Note that we give the parameter’s name in full tensorflow namescope: "output" for the network layer, "biases" for the weights ("weights" alternatively) and "Variable:0" is fixed (as it is the only one). This is followed by a comma-separated list of values, one for each component.

|

Note

|

Single values cannot be frozen but only entire weight matrices or bias vectors per layer at the moment. As each component has to be listed, at the moment this is not suitable for large vectors. |

2.2.4. Evaluating loss and gradients

Having created the dataset and explained how the network is set up, we know see how to evaluate the loss and the gradients.

The main idea of the simulation module is to be used as a simplified interface to access the loss and the gradients of the neural network without having to know about the internal of the neural network. In other words, we want to treat it as an abstract high-dimensional function, depending implicitly on the weights and explicitly on the dataset. To this end, the weights and biases are represented as one linearized vector. Moreover, we have another abstract high-dimensional function, the loss that depends explicitly on the weights and implicitly on the dataset, whose derivative (the gradients with respect to the parameters) is available as a numpy array, see also the section Notation.

In the following example we set up a simple fully-connected hidden network and evaluates loss and then the associated gradients.

import TATi.simulation as tati import numpy as np # prepare parameters nn = tati( batch_data_files=["dataset-twoclusters.csv"], batch_size=10, output_activation="linear" ) # assign parameters of NN nn.parameters = np.zeros([nn.num_parameters()]) # simply evaluate loss print(nn.loss()) # also evaluate gradients (from same batch) print(nn.gradients())

Again, we set up the network as before, here it is a single-layer perceptron as the default value for hidden_dimension is 0. Next, we evaluate the loss $L_D(w)$ using nn.loss() and the gradients $\nabla_w L_D(w)$ using nn.gradients(), returning a vector with the gradient per degree of freedom of the network.

|

Note

|

Under the hood it is a bit more complicated: loss and gradients are inherently connected. If batch_size is chosen smaller than the dataset dimension, naive evaluation of first loss and then gradients in two separate function calls would cause them to be evaluated on different batches. Depending on the size of the batch, the gradients will then not belong the to the respective loss evaluation and vice versa. Therefore, loss, accuracy, gradients, and hessians $H_{ij}$ (if do_hessians is True) are cached. Only when one of them is evaluated for the second time (e.g., inside the loop body on the next iteration), then the next batch is used. This makes sure that calling either loss() first and then gradients() or the other way round yields the same values connected to the same dataset batch. In essence, just don’t worry about it! |

As we see in the above example, tati forms the general interface class that contains the network along with the dataset and everything in its internal state.

This is basically all the access we need in order to use our own optimization, sampling, or exploration methods in the context of neural networks in a high-level, abstract way.

2.2.5. Optimizing the network

Let us then start with optimizing the network, i.e. learning the data.

import TATi.simulation as tati import numpy as np nn = tati( batch_data_files=["dataset-twoclusters.csv"], batch_size=500, learning_rate=3e-2, max_steps=1000, optimizer="GradientDescent", output_activation="linear", seed=426, ) training_data = nn.fit() print("Train results") print(training_data.run_info[-10:]) print(training_data.trajectory[-10:]) print(training_data.averages[-10:])

Again all options are set in the init call to the interface. These options control how the optimization is performed, what kind of network is created, how often values are stored, and so on. Next, we call nn.fit() to perform the actual training for the chosen number of training steps (max_steps). We obtain a single return structure within which we find three pandas dataframes: run info, trajectory, and averages. Of each we print the last ten values.

Let us quickly go through each of the new parameters:

-

batch_size

sets the subset size of the data set looked at per training step, $|D_i|=|\{X_i, Y_i\}|$, if smaller than dimension, then we add stochasticity/noise to the training but for the advantage of smaller runtime.

-

learning_rate

defines the scaling of the gradients in each training step, i.e. the learning rate. Values too large may miss the minimum, values too small need longer to reach it. For automatic picking of the learning_rate, use BarzilaiBorweinGradientDescent. This however is not compatible with mini-batching at the moment as it requires exact gradients.

-

max_steps

gives the amount of training steps to be performed.

-

optimizer

defines the method to use for training. Here, we use Gradient Descent (in case batch_size is smaller than dimension, then we actually have Stochastic Gradient Descent). Alternatively, you BarzilaiBorweinGradientDescent is also available. There the secant equation is solved in a minimal l2 error fashion which allows to automatically determine a suitable step width. This learning_rate is then only used for the initial guess and as a fall-back.

-

seed

sets the seed of the random number generator. We will still have full randomness but in a deterministic manner, i.e. calling the same procedure again will bring up the exactly same values.

|

Tip

|

In case we need to change these options elsewhere in our python code, use set_options(). |

|

Warning

|

set_options() may need to reinitialize certain parts of tati s internal state depending on what options we choose to reset. Keep in mind that modifying the network will reinitialize all its parameters and other possible side-effects. See simulation._affected_map in src/TATi/simulation.py for an up-to-date list of what options affects what part of the state. |

For these small networks the option do_hessians might be useful which will compute the hessian matrix at the end of the trajectory and use the largest eigenvalue to compute the optimal step width. This will add nodes to the underlying computational graph for computing the components of the hessian matrix. However, we will not do so here.

|

Caution

|

The creation of these hessian evaluation nodes (not speaking of their evaluation) is a $O(N^2)$ process in the number of parameters of the network N. Hence, this should only be done for small networks and on purpose. |

After the options have been provided, the network is initialized internally and automatically, we then call fit() which performs the training and returns a structure containing runtime info, trajectory, and averages as a pandas DataFrame.

In the following section on sampling we will explain what each of these three dataframes contains exactly.

|

Tip

|

In case more output of what is actually going on in each training step is needed, set verbose=1 or even verbose=2 in the options when constructing tati(). |

Let us have a quick glance at the decrease of the loss function over the steps by using matplotlib. In other words, let us look at how effective the training has been.

import pandas as pd import numpy as np import matplotlib # use agg as backend to allow command-line use as well matplotlib.use("agg") import matplotlib.pyplot as plt df_run = pd.read_csv("run.csv", sep=',', header=0) run=np.asarray(df_run.loc[:,\ ['step','loss','kinetic_energy', 'total_energy']]) plt.scatter(run[:,0], run[:,1]) plt.savefig('loss-step.png', bbox_inches='tight') #plt.show()

The loss per step is contained in both the run info and the trajectory dataframe in the column loss.

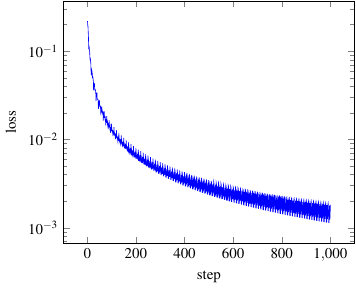

The graph should look similar to the one obtained with pgfplots here (see https://sourceforge.net/pgfplots).

As we see the loss has decreased quite quickly down to 1e-3. Go and have a look at the other columns such as accuracy. Or try to visualize the change in the parameters (weights and biases) in the trajectories dataframe. See 10 Minutes to pandas if we are unfamiliar with the pandas module, yet.

Obviously, we did not use a different dataset set for testing the effectiveness of the training which should commonly be done. This way we cannot check whether we have overfitted or not. However, our example is trivial by design and the network too small to be prone to overfitting this dataset.

Nonetheless, we show how to supply a different dataset and evaluate loss and accuracy on it.

Provide our own dataset

We can directly supply our own dataset, e.g., from a numpy array residing in memory. See the following example where we do not generate the data but parse them from a CSV file instead of using the pandas module.

import TATi.simulation as tati import numpy as np import pandas as pd nn = tati( output_activation="linear", ) # e.g. parse dataset from CSV file into pandas frame input_dimension = 2 output_dimension = 1 parsed_csv = pd.read_csv("dataset-twoclusters-test.csv", \ sep=',', header=0) # extract feature and label columns as numpy arrays features = np.asarray(\ parsed_csv.iloc[:, 0:input_dimension]) labels = np.asarray(\ parsed_csv.iloc[:, \ input_dimension:input_dimension \ + output_dimension]) # supply dataset (this creates the input layer) nn.dataset = [features, labels] # this has created the network, now set # parameters obtained from optimization run nn.parameters = np.array([2.42835492e-01, 2.40057245e-01, \ 2.66429665e-03]) # evaluate loss and accuracy print("Loss: "+str(nn.loss())) print("Accuracy: "+str(nn.score()))

The major difference is that batch_data_files in tati() is now empty and instead we simply later assign nn.dataset a numpy array to use. Note that we could also have supplied it directly with the filename dataset-twoclusters.csv, i.e. nn.dataset = "dataset-twoclusters.csv". In this example we have parsed the same file as the in the previous section into a numpy array using the pandas module. Natually, this is just one way of creating a suitable numpy array.

At the end we have stated loss() and the score(). While the loss is simply the output of the training function, the score gives the accuracy in a classification problem setting: We compare the label given in the dataset with the label predicted by the network and take the average over the whole dataset (or its mini-batch if batch_size is used). For multi-labels, we use the largest entry as the label in multi classification.

|

Note

|

Input and output dimensions are directly deduced from the the tuple sizes. |

|

Note

|

The nodes in the input layer can be modified using input_columns, e.g., input_columns=["x1", "sin(x2)", "x1^2"]. |

2.2.6. Sampling the network

Typically, as preparation to a sampling run, one would optimize or equilibrate the initially random positions first. This might be considered a specific way of initializing parameters.

|

Note

|

Statistical background

In general, when sampling from a distribution (to compute empirical averages for example), one wants to start close to equilibrium, i.e. from states which are of high probability with respect to the target distribution (therefore the minima of the loss). The initial optimization procedure is therefore a first guess to find such states, or at least to get close to them. |

However, let us first ignore this good practice for a moment and simply look at sampling from a random initial place on the loss manifold. We will come back to it later on.

import TATi.simulation as tati import numpy as np nn = tati( batch_data_files=["dataset-twoclusters.csv"], batch_size=500, max_steps=1000, output_activation="linear", sampler="GeometricLangevinAlgorithm_2ndOrder", seed=426, step_width=1e-2 ) sampling_data = nn.sample() print("Sample results") print(np.asarray(sampling_data.run_info[0:10])) print(np.asarray(sampling_data.trajectory[0:10])) print(np.asarray(sampling_data.averages[0:10]))

Here, the sampler setting takes the place of the optimizer before as it states which sampling scheme to use. See [reference.samplers] for a complete list and their parameter names. Apart from that the example code is very much the same as in the example involving fit().

|

Note

|

In the context of sampling we use step_width in place of learning_rate. |

Again, we produce a single data structure that contains three data frames: run info, trajectory, and averages. Trajectories contains among others all parameter degrees of freedom $w=\{w_1, \ldots, w_M\}$ for each step (or every_nth step). Run info contains loss, accuracy, norm of gradient, norm of noise and others, again for each step. Finally, in averages we compute running averages over the trajectory such as average (ensemble) loss, averag kinetic energy, average virial, see general concepts.

Take a peep at sampling_data.run_info.columns to see all columns in the run info dataframe (and similarly for the others.)

For the running averages it is advisable to skip some initial ateps (burn_in_steps) to allow for some burn in time, i.e. for kinetic energies to adjust from initially zero momenta.

Some columns in averages and in run info depend on whether the sampler provides the specific quantity, e.g. SGLD does not have momentum, hence there will be no average kinetic energy.

Using a prior

We may add a prior to the sampling. At the current state two kinds of priors are available: wall-repelling and tethering.

The options prior_upper_boundary and prior_lower_boundary give the admitted interval per parameter. Within a relative distance of 0.01 (with respect to length of domain and only in that small region next to the specified boundary) an additional force acts upon the particles to drives them back into the desired domain. Its magnitude increases with distance to the covered inside the boundary region. The distance is taken to the poour of prior_power. The force is scaled by prior_factor.

In detail, the prior consists of an extra force added to the time integration within each sampler. We compute its magnitude as

where w is the position of the particle, a is the prior_factor, $\pi$ is the position of the boundary (prior_upper_boundary $\pi_{ub}$ or prior_lower_boundary $\pi_{lb}$), and n is the prior_power. Finally, the force is only in effect within a distance of $\tau = 0.01 \cdot || \pi_{ub} - \pi_{lb} ||$ to either boundary by virtue of the Heaviside function $\Theta()$. Note that the direction of the force is such that it always points back into the desired domain.

If upper and lower boundary coincide, then we have the case of tethering, where all parameters are pulled inward to the same point.

At the moment applying prior on just a subset of particles is not supported.

|

Note

|

The prior force is acting directly on the variables. It does not modify momentum. Moreover, it is a force! In other words, it depends on step width. If the step width is too large and if the repelling force increases too steeply close to the walls with respect to the normal dynamics of the system, it may blow up. On the other hand, if it is too weak, then particles may even escape. |

First optimize, then sample

As we have already alluded to before, optimizing before sampling is the recommended procedure. In the following example, we concatenate the two. To this end, we might need to modify some of the options in between. Let us have a look, however with a slight twist.

The dataset shown in Figure Dataset can be even learned by a simpler network: only one of the input nodes is actually needed because of the symmetry.

Hence, we look at such a network by using input_columns to only use input column "x1" although the dataset contains both "x1" and "x2".

Moreover, we will add a hidden layer with a single node and thus obtain a network as depicted in Figure Network. We add this hidden node to make the loss manifold a little bit more interesting.

Additionally, we fix the biases to 0 for both the hidden layer bias and the output bias. Effectively, we have two degrees of freedom left. This is not strictly necessary but allows to plot all degrees of freedom at once.

Finally, we add a prior.

import TATi.simulation as tati import numpy as np nn = tati( batch_data_files=["dataset-twoclusters.csv"], batch_size=500, every_nth=100, fix_parameters="layer1/biases/Variable:0=0.;output/biases/Variable:0=0.", hidden_dimension=[1], input_columns=["x1"], learning_rate=1e-2, max_steps=100, optimizer="GradientDescent", output_activation="linear", sampler = "BAOAB", prior_factor=2., prior_lower_boundary=-2., prior_power=2., prior_upper_boundary=2., seed=428, step_width=1e-2, trajectory_file="trajectory.csv", ) training_data = nn.fit() nn.set_options( friction_constant = 10., inverse_temperature = .2, max_steps = 5000, ) sampling_data = nn.sample() print("Sample results") print(sampling_data.run_info[0:10]) print(sampling_data.trajectory[0:10])

|

Note

|

Setting every_nth large enough is essential when playing around with small networks and datsets as otherwise time spent writing files and adding values to arrays will dominate the actual neural network computations by far. |

As we see, some more options have popped up in the __init__() of the simulation interface: fix_parameters is explained in section [quickstart.simulation.setup.freezing_parameters], hidden_dimension which is a list of the number of hidden nodes per layer, input_columns which contains a list of strings, each giving the name of an input dimension (indexing starts at 1), and all sorts of prior_… that define a wall-repelling prior, again see for details. This will keep parameter values within the interval of [-2,2]. Last but not least, trajectory_file writes all parameters per every_nth step to this file.

Then, we call nn.fit() to perform the training as before.

Next, we need to change the number of steps, set a sampling step width and add the sampler (which might depend on additional parameters, see [reference.samplers] ). This is done by calling nn.set_options().

Having set the stage for the sampling, we commence it by nn.sample().

At the very end we again obtain the data structure containing the pandas DataFrame containing runtime information, trajectory, and averages as its member variables.

|

Warning

|

This time we need the trajectory file for the upcoming analysis. Hence, we write it to a file using the trajectory_file option. Keep the file around as it is needed in the following. |

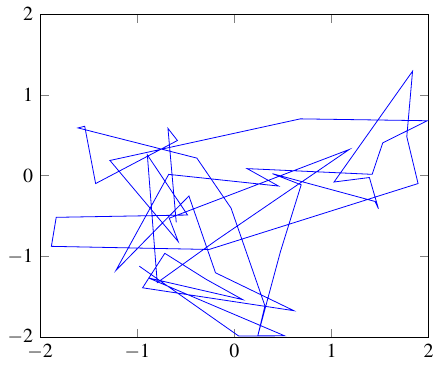

Let us take a look at the two degrees of freedom of the network, namely the two weights, where we plot one against the other similarly to the Sampled weights before.

First of all, take note that the prior (given prior_force is strong enough with respect to the chosen inverse_temperature) indeed retains both parameters within the interval [-2,2] as requested.

Compare this to the Figure Loss manifold. we will notice that this trajectory (due to the large enough temperature) has also jumped over the ridge around the origin.

|

Note

|

To bound the runtime of this example, we have set the parameters such that we obtain a good example of a barrier-jumping trajectory. The original values from the introduction are obtained when we reduce the inverse_temperature to 4. and increase max_steps to 20000 (or even more) if we do not mind waiting a minute or two for the sampling to execute. |

2.2.7. Analysing trajectories

Analysis involves parsing in run and trajectory files that we have written during optimization and sampling runs. Naturally, we could also perform this on the pandas dataframes directly, i.e. sampling and analysis in the same python script. However, for completeness we will read from files in the examples of this section.

The analysis functionality has been split into specific operations such as computing averages, computing the covariance matrix or generating a diffusion map analysis. See the source folder src/TAT/analysis, for all contained modules therein each represent such an operation.

In the following we will highlight just a few of them.

All these analysis operations are also possible through TATiAnalyser, see tools/TATiAnalyser.in in the repository.

Average parameters

from TATi.analysis.parsedtrajectory import ParsedTrajectory from TATi.analysis.averagetrajectorywriter import AverageTrajectoryWriter trajectory = ParsedTrajectory("trajectory.csv") avg = AverageTrajectoryWriter(trajectory) steps = trajectory.get_steps() print(avg.average_params) print(avg.variance_params)

We use the helper class ParsedTrajectory which takes a trajectory file and heeds additional options such as neglecting burn in steps or taking only every_nth step into account. Next, we instantiate the AverageTrajectoryWriter module. It computes the averages and variance of each parameter, whose result we print.

AverageTrajectoryWriter can also write the values to a file. Then, two rows are written (together with a header line) in CSV format. The first row (step 0) represents the averages while the second row (step 1) represents the variance of each parameter.

The loss column is the average over all loss values. If an inverse_temperature has been given, then it is the ensemble average, i.e. each loss (and parameter set) is weighted not equivalently but by $exp(-\beta L)$.

In general, taking such an average is only useful if the trajectory has remained essentially within a single minimum. If the loss manifold has the overall shape of a large funnel with lots of local minima at the bottom, this may be feasible as well.

|

Note

|

Averages depend crucially on the number of steps we average over. I.e. the more points we throw away (option every_nth), the less accurate it becomes. In other words, if large accuracy is required, the averages data frame (if it contains the value of interest) is a better place to look for. |

Covariance

The covariance matrix of the trajectory gives the joint variability of any two of its components. Its eigenvalues give a notion the magnitude between directions with large gradients and between directions with small gradients.

In sampling this is important as directions with small gradients take longer to be explored, see the concept of Integrated Autocorrelation Time (IAT) in section [quickstart.sampling.iat].

|

Note

|

If we look at the covariance of a distribution, we essentially replace it by a Gaussian mixture model defined by this covariance matrix. |

Let us compute the covariance using the analysis module Covariance.

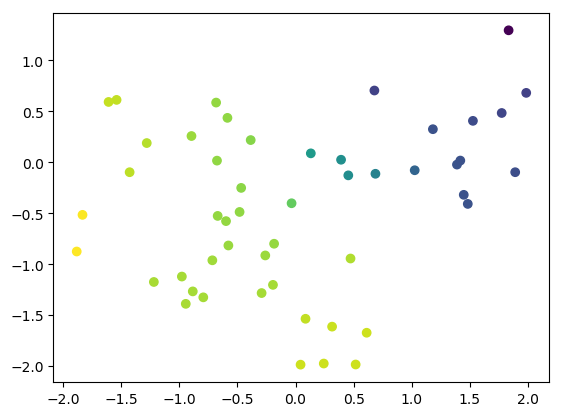

import numpy as np import matplotlib # use agg as backend to allow command-line use as well matplotlib.use("agg") import matplotlib.pyplot as plt from TATi.analysis.parsedtrajectory import ParsedTrajectory from TATi.analysis.covariance import Covariance trajectory = ParsedTrajectory("trajectory.csv") num_eigenvalues=2 cov = Covariance(trajectory) cov.compute( \ number_eigenvalues=num_eigenvalues) # plot in eigenvector directions x = np.matmul(trajectory.get_trajectory(), cov.vectors[:,0]) y = np.matmul(trajectory.get_trajectory(), cov.vectors[:,1]) plt.scatter(x,y, marker='o', c=-x) plt.savefig('covariance.png', bbox_inches='tight') #plt.show()

Note the helper class ParsedTrajectory which takes a trajectory file and heeds additional options such as neglecting burn in steps or taking only every_nth step into account. This instance is handed to the Covariance class whose compute() function performs the actual analysis. Typicallay, all analysis operations have such a compute() function. Again, we plot the result.

We have depicted the weights in the direction of each eigenvector. Essentially, we get a rotated view of the trajectory in [quickstart.simulation.analysis.optimize_sample.weights] where the x direction represents the dominant change.

Diffusion Map

Diffusion maps are a technique for unsupervised learning introduced by [Coifman2006].

Diffusion maps is a dimension reduction technique that can be used to discover low dimensional structure in high dimensional data. It assumes that the data points, which are given as points in a high dimensional metric space, actually live on a lower dimensional structure. To uncover this structure, diffusion maps builds a neighborhood graph on the data based on the distances between nearby points. Then a graph Laplacian L is constructed on the neighborhood graph. Many variants exist that approximate different differential operators.

— pydiffmap

pydiffmap is an excellent Python package that performs the analysis which consists of computing the eigendecomposition of a sparse neighborhood graph where the Euclidean metric is used as distance measure. If it is installed (using pip), it is used for this type of analysis (use method=pydiffmap in this case).

In a nutshell, the eigenvectors of the diffusion map kernel give us the main directions on our trajectory. They represent collective variables learned from the trajectory.

Let us take a look at the eigenvectors from our trajectory that we have sampled just before.

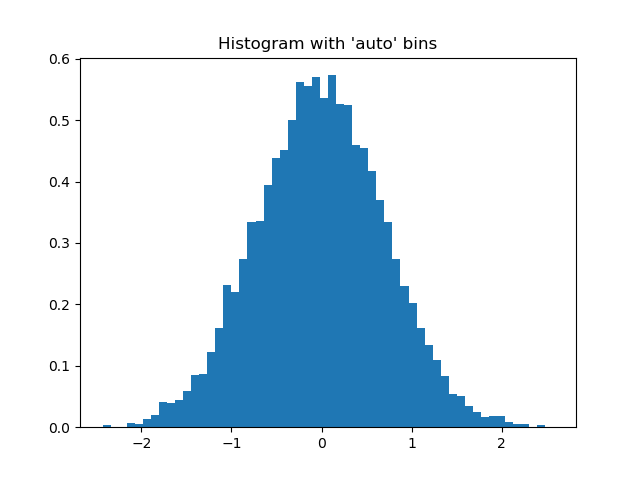

import matplotlib # use agg as backend to allow command-line use as well matplotlib.use("agg") import matplotlib.pyplot as plt from TATi.analysis.parsedtrajectory import ParsedTrajectory from TATi.analysis.diffusionmap import DiffusionMap # option values coming from the sampling inverse_temperature=2e-1 trajectory = ParsedTrajectory("trajectory.csv") num_eigenvalues=2 dmap = DiffusionMap.from_parsedtrajectory(trajectory) dmap.compute( \ number_eigenvalues=num_eigenvalues, inverse_temperature=inverse_temperature, diffusion_map_method="vanilla", use_reweighting=False) plt.scatter(trajectory.get_trajectory()[:,0], trajectory.get_trajectory()[:,1], c=dmap.vectors[:,0]) plt.savefig('eigenvectors.png', bbox_inches='tight') #plt.show()

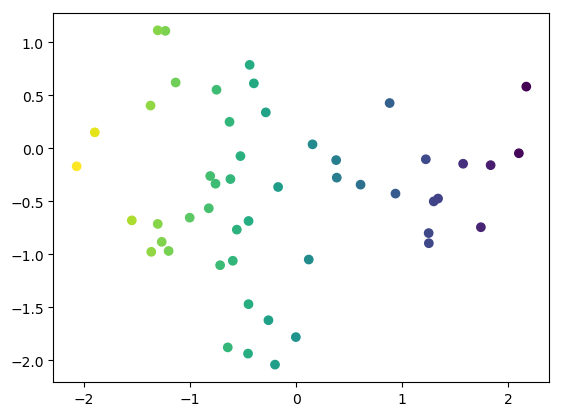

We should then obtain the Figure Diffusion map analysis.

|

Note

|

The true first eigenvector is constant and is therefore dropped in the function compute_diffusion_maps(). |

Keep in mind that the ridge is at the origin and there are two hperbolic basins on either side in the all-positive and all-negative orthant of the 2d space. We see that as we color the trajectory points by the value of the dominant eigenvector, the path between these two minima is highlighted: from light green to dark blue.

The eigenvector gives a direction: From the top right to the bottom left and therefore from one minimum basin to the other.

The eigenvector component give the implicit evaluation of the collective variable function at each trajectory point, i.e. $e_i = \xi(x_i)$, where $\xi(x)$ is the collective variable and $e_i$ is the i-th component and $x_i$ the i-th trajectory point.

The eigenvectors of the diffusion map kernel also give us a mean to assess distances between trajectory points by looking at the difference in values, $|e_i - e_j|$. This is the so-called diffusion distance. It tells us how difficult it is to diffuse from one point to the other.

The main difference between the covariance and diffusion maps is that the latter gives a non-linear mapping which is much more powerful.

2.2.8. Conclusion

This has been the quickstart introduction to the simulation interface.

If we want to take this further, we recommend reading how to implement a GLA2 sampler using this module.

If we still want to take it further, then we need to look at the programmer’s guide that should accompany our installation.

2.3. Using command-line interface

All the tests use the command-line interface which suits itself well for performing rigorous scientific experiments in scripted stages. We recommend using this interface when doing parameter studies and performing extensive runs using different seeds.

The examples on this interface will be much briefer as you are hopefully aware of the simulation module quickstart already and therefore much should be familiar.

|

Note

|

All command-line option have equivalently named counterparts in the options given to the simulation (or model) module on instantiation or to simulation.set_options(), respectively. |

2.3.1. Creating the dataset

As data is read from file, this file needs to be created beforehand.

For a certain set of simple classification problems, namely those that can be found in the tensorflow playground, we have added a TATiDatasetWriter that spills out the dataset in CSV format.

TATiDatasetWriter \ --data_type 2 \ --dimension 500 \ --noise 0.1 \ --seed 426 \ --train_test_ratio 0 \ --test_data_file testset-twoclusters.csv

This will write 500 datums of the dataset type 2 (two clusters) to a file testset-twoclusters.csv using all of the points as we have set the test/train ratio to 0. Note that we also perturb the points by 0.1 relative noise.

2.3.2. Parsing the dataset

Similarly, for testing the dataset can be parsed using the same tensorflow machinery as is done for sampling and optimizing, using

TATiDatasetParser \ --batch_data_files dataset-twoclusters.csv \ --batch_size 20 \ --seed 426

where the seed is used for shuffling the dataset.

This will print 20 randomly drawn items from the dataset.

2.3.3. Optimizing the network

As weights (and biases) are usually uniformly random initialized and the potential may therefore start with large values, we first have to optimize the network, using (Stochastic) Gradient Descent (GD).

2.3.4. Freezing parameters

Sometimes it might be desirable to freeze parameters during training or sampling. This can be done as follows:

from TATi.model import Model as tati import numpy as np FLAGS = tati.setup_parameters( batch_data_files=["dataset-twoclusters-small.csv"], fix_parameters="output/biases/Variable:0=2.", max_steps=5, seed=426, ) nn = tati(FLAGS) nn.init_input_pipeline() nn.init_network(None, setup="train") nn.reset_dataset() run_info, trajectory, _ = nn.train(return_run_info=True, \ return_trajectories=True) print("Train results") print(np.asarray(trajectory[0:5]))

Note that we fix the parameter where we give its name in full tensorflow namescope: "layer1" for the network layer, "weights" for the weights ("biases" alternatively) and "Variable:0" is fixed (as it is the only one). This is followed by a comma-separated list of values, one for each component.

|

Note

|

Single values cannot be frozen but only entire weight matrices or bias vectors per layer at the moment. As each component has to be listed, at the moment this is not suitable for large vectors. |

TATiOptimizer \ --batch_data_files dataset-twoclusters.csv \ --batch_size 50 \ --loss mean_squared \ --learning_rate 1e-2 \ --max_steps 1000 \ --optimizer GradientDescent \ --run_file run.csv \ --save_model `pwd`/model.ckpt.meta \ --seed 426 \ -v

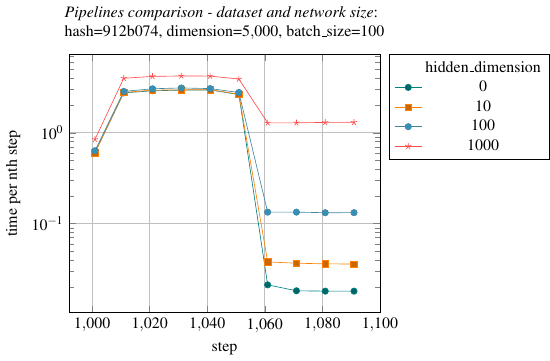

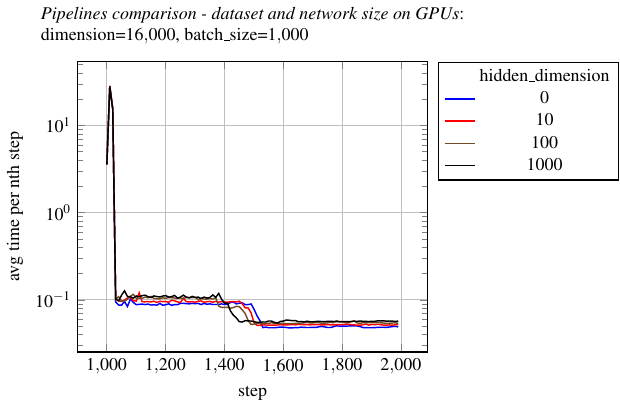

This call will parse the dataset from the file dataset-twoclusters.csv. It will then perform a (Stochastic) Gradient Descent optimization in batches of 50 (10% of the dataset) of the parameters of the network using a step width/learning rate of 0.01 and do this for 1000 steps after which it stops and writes the resulting neural network in a TensorFlow-specific format to a set of files, one of which is called model.ckpt.meta (and the other filenames are derived from this).

We have also created a file run.csv which contains among others the loss at each (every_nth, respectively) step of the optimization run. Plotting the loss over the step column from the run file will result in a figure similar to in Loss history.

|

Note

|

Since Tensorflow 1.4 an absolute path is required for the storing the model. In the example we use the current directory returned by the unix command pwd. |

If you need to compute the optimal step width, which is possible for smaller networks from the largest eigenvalue of the hessian matrix, then use the option do_hessians 1 to activate it.

|

Note

|

The creation of the nodes is costly, $O(N^2)$ in the number of parameters of the network N. Hence, may not work for anything but small networks and should be done on purpose. |

2.3.5. Sampling trajectories on the loss manifold

Next, we show how to use the sampling tool called TATiSampler.

TATiSampler \ --averages_file averages.csv \ --batch_data_files dataset-twoclusters.csv \ --batch_size 50 \ --friction_constant 10 \ --inverse_temperature 10 \ --loss mean_squared \ --max_steps 1000 \ --sampler GeometricLangevinAlgorithm_2ndOrder \ --run_file run.csv \ --seed 426 \ --step_width 1e-2 \ --trajectory_file trajectory.csv

This will cause the sampler to parse the same dataset as before. Afterwards it will use the GeometricLangevinAlgorithm_2nd order discetization using again step_width of 0.01 and running for 1000 steps in total. The GLA is a descretized variant of Langevin Dynamics whose accuracy scales with the inverse square of the step_width (hence, 2nd order).

The seed is needed as we sample using Langevin Dynamics where a noise term is present whose magnitude scales with inverse_temperature.

After it has finished, it will create three files; a run file run.csv containing run time information such as the step, the potential, kinetic and total energy at each step, a trajectory file trajectory.csv with each parameter of the neural network at each step, and an averages file averages.csv containing averages accumulated along the trajectory such as average kinetic energy, average virial ( connected to the kinetic energy through the virial theorem, valid if a prior keeps parameters bound to finite values), and the average (ensemble) loss. Moreover, for the HMC sampler the average rejection rate is stored there. The first two files we need in the next stage.

As it is good practice to first optimize and then sample, also referred as to start from an equilibrated position, we may load the model saved after optimization by adding the option:

... --restore_model ‘pwd‘/model.ckpt.meta \ ...

Otherwise we start from randomly initialized weights and non-zero biases.

2.3.6. Analysing trajectories

Eventually, we now want to analyse the obtained trajectories. The trajectory file written in the last step is simply a matrix of dimension (number of parameters) times (number of trajectory steps).

The analysis can perform a number of different operations where we name a few:

-

Calculating averages.

-

Calculating covariances.

-

Calculating the diffusion map’s largest eigenvalues and eigenvectors.

-

Calculating landmarks and level sets to obtain an approximation to the free energy.

Averages

Averages are calculated by specifying two options as follows:

TATiAnalyser \ average_energies average_trajectory \ --average_run_file average_run.csv \ --average_trajectory_file average_trajectory.csv \ --drop_burnin 100 \ --every_nth 10 \ --run_file run.csv \ --steps 10 \ --trajectory_file trajectory.csv

This will load both the run file run.csv and the trajectory file trajectory.csv` and average over them using only every 10 th data point (every_nth) and also dropping the first steps below 100 (drop_burnin). It will produce then ten averages (steps) for each of energies in the run file and each of the parameters in the trajectories file (along with the variance) from the first non-dropped step till one of the ten end steps. These end steps are obtained by equidistantly splitting up the whole step interval.

Eventually, we have two output file. The averages over the run information such as total, kinetic, and potential energy in average_run.csv. Also, we have the averages over the degrees of freedom in average_trajectories.csv.

This second file contains two rows (together with a header line) in CSV format. The first row (step 0) represents the averages while the second row (step 1) represents th variance of each parameter.

The loss column is the average over all loss values. If an inverse_temperature has been given, then it is the ensemble average, i.e. each loss (and parameter set) is weighted not equivalently (unit weight) but by $exp(-\beta L)$.

In general, taking such an average is only useful if the trajectory has remained essentially within a single minimum. If the loss manifold has the overall shape of a large funnel with lots of local minima at the bottom, this may be feasible as well. Use a covariance analysis and TATiLossFunctionSampler in directions of eigenvalues of the resulting covariance matrix whose magnitude is large to find out.

|

Note

|

Averages depend crucially on the number of steps we average over. I.e. the more points we throw away (option every_nth), the less accurate it becomes. In other words, if large accuracy is required, the averages file (if it contains the value of interest) is a better place to look for. |

Covariance

Computing the covariance is done as follows.

TATiAnalyser \ covariance \ --covariance_matrix covariance.csv \ --covariance_eigenvalues eigenvalues.csv \ --covariance_eigenvectors eigenvectors.csv \ --drop_burnin 100 \ --every_nth 10 \ --number_of_eigenvalues 3 \ --trajectory_file trajectory.csv

Here, we simply give the respective options to write the covariance matrix, its eigenvectors and eigenvalues to CSV files. We drop the first 100 steps and take only every 10th step into account.

The covariance eigenvectors give use the directions of strong and weak change while the eigenvalues give their magnitude. This correlates with strong and weak gradients and therefore with general directions of fast and slow exploration.

Diffusion map

See section [quickstart.simulation.analysis.diffusion_map] for some more information on diffusion maps.

its eigenvalues and eigenvectors can be written as well to two output files.

TATiAnalyser \ diffusion_map \ --diffusion_map_file diffusion_map_values.csv \ --diffusion_map_method vanilla \ --diffusion_matrix_file diffusion_map_vectors.csv \ --drop_burnin 100 \ --every_nth 10 \ --inverse_temperatur 1e4 \ --number_of_eigenvalues 4 \ --steps 10 \ --trajectory_file trajectory.csv

The files ending in ..values.csv contains the eigenvalues in two columns, the first is the eigenvalue index, the second is the eigenvalue.

The other file ending in ..vectors.csv is simply a matrix of the eigenvector components in one direction and the trajectory steps in the other. Additionally, it contains the parameters at the steps and also the loss and the kernel matrix entry.

Note that again the all values up till step 100 are dropped (due to option drop_burnin) and only every 10th trajectory point (due to option every_nth) is considered afterwards.

There are two methods available. Here, we have used the simpler (and less accurate) (plain old) vanilla method. The other is called TMDMap.

If you have installed the pydiffmap python package, this may also be specified as diffusion map method. It has the benefit of an internal optimal parameter choice. Hence, it should behave more robustly than the other two methods. TMDMap is different only in re-weighting the samples according to the specific temperature.

2.3.7. More tools

There are a few more tools available in TATi. They allow to inspect the loss manifold or the input space with respect to a certain parameter set.

The loss function

Let us give an example call of TATiLossFunctionSampler right away.

TATiLossFunctionSampler \ trajectory \ --batch_data_files dataset-twoclusters.csv \ --batch_size 20 \ --csv_file TATiLossFunctionSampler-output-SGLD.csv \ --parse_parameters_file trajectory.csv

It takes as input the dataset file dataset-twoclusters.csv and either a parameter file trajectory.csv. This will cause the program the re-evaluate the loss function at the trajectory points which should hopefully give the same values as already stored in the trajectory file itself.

However, this may be used with a different dataset file, e.g. the testing or validation dataset, in order to evaluate the generalization error in terms of the overall accuracy or the loss at the points along the given trajectory.

Interesting is also the second case, where instead of giving a parameters file, we sample the parameter space equidistantly as follows:

TATiLossFunctionSampler \ naive_grid \ --batch_data_files dataset-twoclusters.csv \ --batch_size 20 \ --csv_file TATiLossFunctionSampler-output-SGLD.csv \ --interval_weights -5 5 \ --interval_biases -1 1 \ --samples_weights 10 \ --samples_biases 4

Here, sample for each weight in the interval [-5,5] at 11 points (10

endpoint), and similarly for the weights in the interval [-1,1] at 5

points.

|

Note

|

For anything but trivial networks the computational cost quickly becomes prohibitively large. However, we may use fix_parameter to lower the computational cost by choosing a certain subsets of weights and biases to sample. |

TATiLossFunctionSampler \ naive_grid \ --batch_data_files dataset-twoclusters.csv \ --batch_size 20 \ --csv_file TATiLossFunctionSampler-output-SGLD.csv \ --fix_parameters "output/weights/Variable:0=2.,2." \ --interval_weights -5 5 \ --interval_biases -1 1 \ --samples_weights 10 \ --samples_biases 4

Moreover, using exclude_parameters can be used to exclude parameters from the variation, i.e. this subset is kept at fixed values read from the file given by parse_parameters_file where the row designated by the value in parse_steps is taken.

This can be used to assess the shape of the loss manifold around a found minimum.

TATiLossFunctionSampler \ naive_grid \ --batch_data_files dataset-twoclusters.csv \ --batch_size 20 \ --csv_file TATiLossFunctionSampler-output-SGLD.csv \ --exclude_parameters "w1" \ --interval_weights -5 5 \ --interval_biases -1 1 \ --parse_parameters_file centers.csv \ --parse_steps 1 \ --samples_weights 10 \ --samples_biases 4 \ -vv

Here, we have excluded the second weight, named w1, from the sampling. Note that all weight and all bias degrees of freedom are simply enumerated one after the other when going from the input layer till the output layer.

Furthermore, we have specified a file containing center points for all excluded parameters. This file is of CSV style having a column step to identify which row is to be used and moreover a column for every (excluded) parameter that is fixed at a value unequal to 0. Note that the minima file written by TATiExplorer can be used as this centers file. Moreover, also the trajectory files have the same structure.

The learned function

The second little utility programs does not evaluate the loss function itself but the unknown function learned by the neural network depending on the loss function, called the TATiInputSpaceSampler. In other words, it gives the classification result for data point sampled from an equidistant grid. Let us give an example call right away.

TATiInputSpaceSampler \ --batch_data_files grid.csv \ --csv_file TATiInputSpaceSampler-output.csv \ --input_dimension 2 \ --interval_input -4 4 \ --parse_steps 1 \ --parse_parameters_file trajectory.csv \ --samples_input 10 \ --seed 426

Here, batch_data_files is an input file but it does not need to be present. (Sorry about that abuse of the parameter as usually batch_data_files is read-only. Here, it is overwritten!). Namely, it is generated by the utility in that it equidistantly samples the input space, using the interval [-4,4] for each input dimension and 10+1 samples (points on -4 and 4 included). The parameters file trajectory.csv now contains the values of the parameters (weights and biases) to use on which the learned function depends or by, in other words, by which it is parametrized. As the trajectory contains a whole flock of these, the parse_steps parameter tells it which steps to use for evaluating each point on the equidistant input space grid, simply referring to rows in said file.

|

Note

|

For anything but trivial input spaces the computational cost quickly becomes prohibitively large. But again fix_parameters is heeded and can be used to fix certain parameters. This is even necessary if parsing a trajectory that was created using some parameters fixed as they then will not appear in the set of parameters written to file. This will raise an error as the file will contain too few values. |

2.4. Conclusion

This has been the quickstart introduction.

In the following reference section you may find the following pieces interesting after having gone through this quickstart tutorial.

-

[reference.examples.harmonic_oscillator] for a light-weight example probability distribution function whose properties are well understood.

-

[reference.implementing_sampler] explaining how to implement your own sampler using the Simulation module as rapid-prototyping framework.

-

[reference.simulation] giving detailed examples on each function in Simulationss interface.

3. The reference

3.1. General concepts

Here, we give definitions on a number of concepts that occur throughout this userguide.

-

Dataset