import os

import requests

from datetime import datetime

import zipfile

import numpy as np

import pandas as pd

from scipy.stats import pearsonr

import matplotlib.pyplot as plt

import seaborn as sns

sns.set(style='white')All the authors above contributed to this data story story with ideas, code development and story telling.

Reviewers

- Nick Barlow. The Alan Turing Institute. Github: @nbarlowATI

- Louise Bowler. The Alan Turing Institute. Github: @LouiseABowler

Welcome to the first Turing Data Story!

Our goal at Turing Data Stories is to produce educational data science content by telling stories with data.

A data story begins with a question about a societal issue that is close to our hearts, and covers our entire analysis process in trying to answer it. From gathering and cleaning the data, to using it for data analysis. We hope that our stories will not only provide the reader with insight into some societal issues, but also to showcase the explanatory power of data science, and enable the reader to try out some of these techniques themselves.

Each of our stories comes in the form of a Jupyter notebook, which will contain all the code required to follow along with the analysis, along with an explanations of our thought process.

COVID-19 and Deprivation

Everyone has been impacted by the COVID-19 pandemic. On the 23rd of March 2020, the UK Government announced various lockdown measures with the intention of limiting the spread of the virus and reducing the number of COVID-19 related deaths. These lockdown measures meant the temporary closure of many commercial shops and businesses, as well as the limiting of work based travel to only those jobs that could not be done at home.

We are concerned that the impact of COVID-19 has disproportionately affected certain groups of people. In particular, that the lockdown may have had a worse impact on those in the most deprived areas, whose livelihoods may have required them to leave the house more frequently.

There have been a number of concerns with Government COVID-19 reporting, in particular with testing and mortality statistics. This motivates independent, open analysis to validate and expand on our understanding of our current state of the pandemic.

Earlier in June, the Office of National Statistics (ONS) published a report exploring this exact question: to assess whether those living in the most deprived areas of the UK were disproportionately affected by COVID-19. The report seems to confirm our fear - between the months of March to May 2020 those in the most deprived areas of the UK were more than twice as likely to die as a result of COVID-19 than those in the least deprived areas.

There are two caveats that we have with the ONS analysis. The first is reproducibility. We want to confirm the ONS results by making the analysis procedure open. The second caveat is that the ONS report aggregates data over time, and therefore that it might miss interesting differences in outcomes between the different stages of lockdown. The lockdown was most severe between March and May, with measures relaxing from June onwards. We wonder whether the ONS analysis will continue to be relevant as lockdown eases. For this purpose, we wish to extend the ONS analysis to cover all available data, and at the same time, make a comparison between the different stages of lockdown.

Thus for our first story we ask:

Have the COVID-19 lockdown measures protected people equally across all socio-economic groups in society?

We have two main objectives

We want to replicate the ONS analysis using their provided data to ensure that we have all the inputs necessary to understand the problem.

We want to extend the ONS analysis to consider different time periods - representing the severity of the different stages of lockdown - to see how this affects people from different socio-economic groups.

Key Metrics

Our analysis will involve exploring the relationship between the following key metrics:

- COVID-19 mortality rates over time and across geographical regions.

- Index of multiple deprivation (IMD) by geographical region - a measure of the geographic spread of social deprivation (see definition and explanation).

Data Sources

We will use the following datasets:

- Mortality count time series

- IMD Rankings (England only)

- Populations provided by the ONS

- Local Authority District Code Region Lookup Table provided by the ONS

- ONS Mortality and Depravation Data

In case any of the data sources become unavailable in the future, a download mirror is available here.

For simplicity this study is only focusing on England. We understand the importance of investigating all of the regions of the UK. However due to the difference of lockdown measures across the nations of the UK, and also due to the way the IMD ranking is defined, an independent analysis is required for each nation. We encourage readers to replicate our analysis with the other nations.

Analysis Outline

Here’s a list of the different steps of the analysis:

- Download and process data from multiple deprivation and COVID-19 deaths.

- Combine the different datasets into a single table by joining on geographical region.

- Calculate age standardised mortality rates from mortality counts.

- Replicate the ONS analysis, looking at mortality rate by region.

- Visualise the distribution of COVID-19 deaths across the UK.

- Segment the data into time periods, corresponding to the different stages of lockdown.

- Explore at the relationship between our two key metrics (deprivation and mortality rates) in the different time periods.

Data Collation and Wrangling

🔧 Setup

We begin by setting up our environment and importing various python libraries that we will be using for the analysis. In particular, pandas and numpy are key data science libraries used for data processing. matplotlib and seaborn will help us visualise our data.

🔧 Let’s make some directories in which we can store the data we are going to download.

# downloaded data goes here

downloaded_data_dir = 'data/downloaded'

# processed data goes here

derived_data_dirname = 'data/derived'

# create the directory if it does not already exist

os.makedirs(downloaded_data_dir, exist_ok=True)

os.makedirs(derived_data_dirname, exist_ok=True)🔧 Here is a small helper function which will download files from a URL.

# This function can download data from a URL and then save it in a directory of our choice.

def download_file(url, filename):

# create the directory if it does not already exist

os.makedirs(os.path.dirname(filename), exist_ok=True)

# make the HTTP request

r = requests.get(url, allow_redirects=True)

# save file

_ = open(filename, 'wb').write(r.content)Index of Multiple Deprivation (IMD)

🔧 Now let’s download and process our deprivation data. This data provides a deprivation rank (lower rank meaning more deprived) for each geographical region in England (the geographical regions are here are called Lower Super Output Areas, or LSOAs). As a rough idea of scale, LSOAs contain on average around 1,500 people.

Download

# specify URL

url = 'https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/833970/File_1_-_IMD2019_Index_of_Multiple_Deprivation.xlsx'

# specify filename

filename = 'ONS_2019_Index_of_Multiple_Deprivation.xlsx'

# construct file path

filepath = os.path.join(downloaded_data_dir, filename)

# download and save file at the specified URL

download_file(url, filepath)

# read the relevant sheet

imd_df = pd.read_excel(filepath, sheet_name='IMD2019')If we sort by deprivation rank, we can get an idea of the most / least deprived LSOAs.

imd_df.sort_values(by='Index of Multiple Deprivation (IMD) Rank').head()| LSOA code (2011) | LSOA name (2011) | Local Authority District code (2019) | Local Authority District name (2019) | Index of Multiple Deprivation (IMD) Rank | Index of Multiple Deprivation (IMD) Decile | |

|---|---|---|---|---|---|---|

| 21400 | E01021988 | Tendring 018A | E07000076 | Tendring | 1 | 1 |

| 12280 | E01012673 | Blackpool 010A | E06000009 | Blackpool | 2 | 1 |

| 12288 | E01012681 | Blackpool 006A | E06000009 | Blackpool | 3 | 1 |

| 12279 | E01012672 | Blackpool 013B | E06000009 | Blackpool | 4 | 1 |

| 12278 | E01012671 | Blackpool 013A | E06000009 | Blackpool | 5 | 1 |

imd_df.sort_values(by='Index of Multiple Deprivation (IMD) Rank').tail()| LSOA code (2011) | LSOA name (2011) | Local Authority District code (2019) | Local Authority District name (2019) | Index of Multiple Deprivation (IMD) Rank | Index of Multiple Deprivation (IMD) Decile | |

|---|---|---|---|---|---|---|

| 17759 | E01018293 | South Cambridgeshire 012B | E07000012 | South Cambridgeshire | 32840 | 10 |

| 15715 | E01016187 | Bracknell Forest 002D | E06000036 | Bracknell Forest | 32841 | 10 |

| 30976 | E01031773 | Mid Sussex 008D | E07000228 | Mid Sussex | 32842 | 10 |

| 26986 | E01027699 | Harrogate 021A | E07000165 | Harrogate | 32843 | 10 |

| 17268 | E01017787 | Chiltern 005E | E07000005 | Chiltern | 32844 | 10 |

Derive Mean IMD Decile

At this point we want to join the two datasets together in order to explore the relationship between our two key metrics.

A problem is that the index of multiple deprivation comes with a geographical granularity at the LSOA level, whilst the COVID-19 mortality counts come with a geographical granularity at the Local Authority District (LAD) level. To complicate things, for each LAD there are generally multiple LSOAs, each with different indexes of multiple deprivation. For more information about the different geographical regions in the UK, read this.

We need to aggregate the LSOAs into LADs by averaging out the indexes of multiple deprivation. First let’s write some functions to help us.

def get_mean_IMD_decile(LAD_code):

# select relevant LSOAs

LSOAs = imd_df[imd_df['Local Authority District code (2019)'] == LAD_code]

# calculate mean IMD rank

mean_IMD_decile = round(LSOAs['Index of Multiple Deprivation (IMD) Decile'].mean(), 2)

std_IMD_decile = round(LSOAs['Index of Multiple Deprivation (IMD) Decile'].std(), 2)

return mean_IMD_decile, std_IMD_decileNow we can use these functions to calculate the mean IMD decile in each Local Authority District.

LAD_codes = imd_df['Local Authority District code (2019)'].unique()

mean_IMD_decile, std_IMD_decile = np.vectorize(get_mean_IMD_decile)(LAD_codes)

LAD_df = pd.DataFrame({'LAD Code': LAD_codes,

'LAD Name': imd_df['Local Authority District name (2019)'].unique(),

'Mean IMD decile': mean_IMD_decile,

'Std IMD decile': std_IMD_decile})

LAD_df = LAD_df.set_index('LAD Code')Let’s make a quick histogram of the mean IMD decile.

LAD_df['Mean IMD decile'].hist(range=(1,11), bins=10)

plt.xlabel('Mean IMD Decile')

plt.ylabel('Count')

plt.show()

It should be noted that we lose some information when averaging the IMD ranks in this way. The central region of the distribution is relatively flat, and so we cannot differentiate well between LADs in this region.

Notice there are no Local Authority Districts that have a mean IMD decile of 1 or 10. This is due to the presence of variance inside each Local Authority District. For example, there is no single LAD whose constituent LSOAs all have a IMD deciles of 1 (or 10). See the table below for the maximum and minimum mean IMD deciles. Note that Blackpool, the most deprived (on average) LAD in England, has a mean IMD decile of 2.41. This demonstrates that this LAD has some LSOAs that are not in the most deprived deciles. The opposite is true for the least deprived areas. The “Std IMD decile” column in the below table shows the level of variation of the IMD (measured by the standard deviation) within each LAD.

LAD_df.sort_values(by='Mean IMD decile')| LAD Name | Mean IMD decile | Std IMD decile | |

|---|---|---|---|

| LAD Code | |||

| E06000009 | Blackpool | 2.41 | 1.58 |

| E08000003 | Manchester | 2.54 | 1.84 |

| E08000011 | Knowsley | 2.56 | 1.91 |

| E09000002 | Barking and Dagenham | 2.68 | 1.01 |

| E09000012 | Hackney | 2.74 | 1.11 |

| ... | ... | ... | ... |

| E07000155 | South Northamptonshire | 8.78 | 1.32 |

| E07000176 | Rushcliffe | 8.82 | 1.58 |

| E07000005 | Chiltern | 8.86 | 1.51 |

| E06000041 | Wokingham | 9.27 | 1.43 |

| E07000089 | Hart | 9.39 | 1.08 |

317 rows × 3 columns

Derive Age Standardisation Weight

To account for the different population sizes in the different Local Area Districts, we want to use a mortality rate rather than an overall count. When we do this we convert a count into a rate per 100,000 people. Furthermore, we want to account for differences in the age distributions of the different LADs in order to make a valid comparison between the different geographic areas. An age standardised rate allows for this comparison. Ideally we would calculate this rate directly from the data, but as our mortality over time dataset does not contain information about age, we instead will need to extract a standardisation factor from a different dataset.

The dataset we will use to do this comes from the ONS study on COVID-19 and deprivation. We will use it to derive a standardisation factor which will allow us to convert our mortality counts into an age and population standardise mortality rate. This mortality rate is a European standard (2013 ESP). As we mentioned, we cannot calculate the factor directly as our mortality over time dataset does not include age information, so this reverse engineering is the best we can do.

For more information on how the mortality rate is calculated, see here. Simply put, this is the formula that we are assuming approximates the relationship between the age standardised rate and the mortality count:

age standardised mortality rate = [standardisation factor] * [mortality count]⚠️ The above procedure is not ideal because it assumes that the distribution of ages of those who died inside each Local Area District is constant in time, and therefore the standardisation factor we derive in one dataset (which doesn’t have information about time) can be applied to the other (which has information about time).

First, let’s download the data.

# download the ONS data from the deprivation study

url = 'https://www.ons.gov.uk/file?uri=%2fpeoplepopulationandcommunity%2fbirthsdeathsandmarriages%2fdeaths%2fdatasets%2fdeathsinvolvingcovid19bylocalareaanddeprivation%2f1march2020to17april2020/referencetablesdraft.xlsx'

# specify filename

filename = 'ONS_age_standarisation_April2020.xlsx'

# construct file path

filepath = os.path.join(downloaded_data_dir, filename)

# download and save file at the specified URL

download_file(url, filepath)

# read the relevant sheet

age_rate_df = pd.read_excel(filepath, sheet_name='Table 2', header=3)Next, we can do some minor selection and reformatting of the DataFrame.

# data if given for many categories and regios, lets choose the inclusive gender, and the unitary authority levels

age_rate_persons_df = age_rate_df[age_rate_df['Sex'] == 'Persons']

# rename columns

age_rate_persons_df.columns = ['Sex', 'Geography type', 'LAD Code', 'Area name', 'All causes Deaths',

'All causes Rate','' ,'All causes Lower CI', 'All causes Upper CI','' ,'COVID-19 Deaths',

'COVID-19 Rate', '','COVID-19 Lower CI', 'COVID-19 Upper CI' ]

# remove anomalous row (Isles of Scilly) without numerical data

age_rate_persons_df = age_rate_persons_df[age_rate_persons_df['All causes Rate'] != ':']

age_rate_persons_df = age_rate_persons_df[age_rate_persons_df['COVID-19 Rate'] != ':']

# remove columns where all entries are NaN due to the spreadsheet formating.

age_rate_persons_df.dropna(axis=1)

age_rate_persons_df.head()| Sex | Geography type | LAD Code | Area name | All causes Deaths | All causes Rate | All causes Lower CI | All causes Upper CI | COVID-19 Deaths | COVID-19 Rate | COVID-19 Lower CI | COVID-19 Upper CI | ||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | Persons | Unitary Authority | E06000001 | Hartlepool | 154 | 170.7 | NaN | 143.5 | 197.8 | NaN | 29 | 31 | NaN | 20.7 | 44.5 |

| 2 | Persons | Unitary Authority | E06000002 | Middlesbrough | 289 | 256 | NaN | 226.1 | 286 | NaN | 89 | 79 | NaN | 63.2 | 97.6 |

| 3 | Persons | Unitary Authority | E06000003 | Redcar and Cleveland | 215 | 142.6 | NaN | 123.5 | 161.8 | NaN | 40 | 26.5 | NaN | 18.9 | 36.2 |

| 4 | Persons | Unitary Authority | E06000004 | Stockton-on-Tees | 297 | 167 | NaN | 147.8 | 186.1 | NaN | 38 | 21 | NaN | 14.8 | 28.9 |

| 5 | Persons | Unitary Authority | E06000005 | Darlington | 169 | 151.5 | NaN | 128.6 | 174.4 | NaN | 26 | 22.9 | NaN | 15 | 33.7 |

Let us now calculate the factor by which we need to multiply the count of deaths to derive the age-standardised mortality rate per 100,000 habitants.

# derive standardisation factors

age_rate_persons_df['All causes rate factor'] = (

age_rate_persons_df['All causes Rate'] / age_rate_persons_df['All causes Deaths'] )

age_rate_persons_df['COVID-19 rate factor'] = (

age_rate_persons_df['COVID-19 Rate'] / age_rate_persons_df['COVID-19 Deaths'] )

# drop columns

age_rate_persons_df = age_rate_persons_df[['LAD Code', 'All causes rate factor', 'COVID-19 rate factor']]We can merge this into the previous DataFrame so all the information is accessible in one place.

LAD_df = LAD_df.reset_index()

LAD_df = LAD_df.merge(age_rate_persons_df, on='LAD Code', how='inner')

LAD_df = LAD_df.set_index('LAD Code')Finally, let’s save the standardisation factors for each LAD, stored in the DataFrame LAD_df, so that we can easily use them later.

# create filename

LAD_df_filename = 'Local_Authority_District_Lookup.csv'

LAD_df_filepath = os.path.join(derived_data_dirname, LAD_df_filename)

# write to csv

LAD_df.to_csv(LAD_df_filepath, index=False)Mortality Counts

Now we are ready to download the main dataset that we will be analysing: the number of COVID-19 and non COVID-19 deaths across time and place.

Download and Format

Let’s download the ONS dataset containing mortality counts by week and Local Authority District.

# specify URL

url = 'https://www.ons.gov.uk/file?uri=%2fpeoplepopulationandcommunity%2fhealthandsocialcare%2fcausesofdeath%2fdatasets%2fdeathregistrationsandoccurrencesbylocalauthorityandhealthboard%2f2020/lahbtablesweek35.xlsx'

# specify filename

filename = 'ONS_COVID_Mortality_Counts.xlsx'

# construct file path

filepath = os.path.join(downloaded_data_dir, filename)

# download and save file at the specified URL

download_file(url, filepath)# specify the sheet of the excel file we want to read

sheet_name = 'Occurrences - All data'

# read the sheet into a pandas DataFrame

mortality_df = pd.read_excel(filepath, sheet_name=sheet_name, header=3)Let’s quickly check if all the LADs are represented in both datasets so that we can join the IMD rank with the mortality information for each LAD.

not_in_imd = set(mortality_df['Area code']) - set(imd_df['Local Authority District code (2019)'])

not_in_mortality = set(imd_df['Local Authority District code (2019)']) - set(mortality_df['Area code'])

print('There are', len(not_in_mortality), 'codes in the IMD dataset but not in the mortality dataset.')

print('There are', len(not_in_imd), 'codes in the mortality dataset but not in the IMD dataset.')There are 4 codes in the IMD dataset but not in the mortality dataset.

There are 30 codes in the mortality dataset but not in the IMD dataset.We have 346 LAD codes in the mortality data set, and only 317 in the IMD dataset. Upon closer inspection, it turned out that IMD dataset does not contain any Welsh entries (as the IMD ranking is defined for England only). Additionally, the mortality dataset contains a single entry for Buckinghamshire, a new unitary authority in 2020 (E06000060). The IMD dataset, meanwhile, contains 4 LAD codes for Buckinghamshire. We will drop these anomalous locations from the analysis for now.

# extract those LAD codes which are present in the mortality dataset but not the IMD dataset (Wales)

missing_LAD_codes_df = mortality_df[~mortality_df['Area code'].isin(imd_df['Local Authority District code (2019)'])]

missing_LAD_codes = missing_LAD_codes_df['Area code'].unique()

# filter by common LAD codes

mortality_df = mortality_df[~mortality_df['Area code'].isin(missing_LAD_codes)]Furthermore, the age standardisation factor derived previously was not able to be derived for one LAD (the Isles of Scilly). Let’s drop that now too to avoid any problems later down the line.

# remove LADs from the mortality DataFrame if we do not have an entry for them in the LAD_df

mortality_df = mortality_df[mortality_df['Area code'].isin(LAD_df.index)]Finally, since we are interested in looking at the effect of COVID-19 and the lockdown policies on the working population, we can remove deaths that took place in care homes or hospices.

# select only deaths outside of care homes and hospices

mortality_df = mortality_df[(mortality_df['Place of death'] != 'Care home') &

(mortality_df['Place of death'] != 'Hospice')]

# to instead select only deaths in care homes or hospices, use this line:

#mortality_df = mortality_df[(mortality_df['Place of death']=='Care home') |

# (mortality_df['Place of death']=='Hospice')]The mortality data starts from Wednesday 1st Jan 2020. Let’s use that to convert the supplied week numbers into a date.

# first day of 2020 is a Wednesday

mortality_df['Date'] = [datetime.strptime(f'2020 {n-1} 3', '%Y %W %w').strftime('%Y-%m-%d')

for n in mortality_df['Week number']]

# drop week number column

mortality_df = mortality_df.drop(columns='Week number')Finally, we can take a random sample of 5 rows from the DataFrame to check everything looks okay, and to get an idea of its structure.

mortality_df.sample(n=5)| Area code | Geography type | Area name | Cause of death | Place of death | Number of deaths | Date | |

|---|---|---|---|---|---|---|---|

| 43031 | E07000136 | Local Authority | Boston | COVID 19 | Other communal establishment | 0 | 2020-03-11 |

| 27659 | E08000002 | Local Authority | Bury | COVID 19 | Other communal establishment | 0 | 2020-02-12 |

| 41644 | E06000043 | Local Authority | Brighton and Hove | All causes | Hospital | 13 | 2020-03-11 |

| 28045 | E08000036 | Local Authority | Wakefield | All causes | Elsewhere | 2 | 2020-02-12 |

| 77533 | E09000007 | Local Authority | Camden | All causes | Elsewhere | 0 | 2020-05-06 |

If you want to reproduce the results from the initial ONS report, you can restrict the date ranges of the data by uncommenting these lines.

#mortality_df = mortality_df[mortality_df['Date'] > '2020-03-01']

#mortality_df = mortality_df[mortality_df['Date'] < '2020-04-18']Download Local Area District to Region Lookup Table

As shown in the ONS report, a nice plot to make is the total number of mortalities in each region of England (a region is composed of many LADs). To do this, we need to know which region each LAD belongs. Let’s download this data now from the following website: https://geoportal.statistics.gov.uk/datasets/local-authority-district-to-region-april-2019-lookup-in-england.

# specify URL

url = 'https://opendata.arcgis.com/datasets/3ba3daf9278f47daba0f561889c3521a_0.csv'

# specify filename

filename = 'LAD_Code_Region_Lookup.csv'

# construct file path

filepath = os.path.join (downloaded_data_dir, filename)

# download and save file at the specified URL

download_file(url, filepath)

# read the relevant sheet

LAD_code_region_lookup_df = pd.read_csv(filepath, index_col='FID').set_index('LAD19CD')Taking a look at the data, we can see that the index “LAD19CD” contains our familiar LAD code, and the column “RGN12NM” gives us the name of the region in which that LAD is located. Perfect!

LAD_code_region_lookup_df.head()| LAD19NM | RGN19CD | RGN19NM | |

|---|---|---|---|

| LAD19CD | |||

| E09000001 | City of London | E12000007 | London |

| E06000054 | Wiltshire | E12000009 | South West |

| E09000002 | Barking and Dagenham | E12000007 | London |

| E09000003 | Barnet | E12000007 | London |

| E09000004 | Bexley | E12000007 | London |

Split Data Into Different Time Periods

Now, the final step before we can really start our analysis is to split our dataset into different time periods. As mentioned in the introduction, we want to compare COVID-19 mortality before, during and after lockdown was in place. Within each time period, we sum over all deaths, and add the IMD decile for each LAD. Finally, we’ll also include Region information.

Let’s write a function to sum the mortality data over time to get the total number of deaths. Then, we’ll reformat the DataFrame to separate COVID-19 and non COVID-19 deaths. Finally, we’ll use the table downloaded in the previous section to get the Region name for each LAD. The function combines information from all the previous DataFrames and produces a DataFrame with everything we need to do our analysis.

def filter_date_and_aggregate(df, date_range=None):

"""

The function:

- Selects dates that are inside the supplied date range.

- Sums over time in this date range.

- Separates COVID-19 vs non COVID-19 deaths.

- Decorates rows with area and region name columns.

- Calculates the standardised mortality rate using previously calculated factors.

- Pulls in the mean IMD decile as previously calculated.

"""

# filter dates

if date_range:

df = df[(df['Date'] >= date_range[0]) & (df['Date'] < date_range[1])]

if len(df) == 0:

print('Error: Please make sure there is some data availbile for the supplied date range!')

return None

# sum over time

df = df.groupby(by=['Area code', 'Cause of death']).sum()

df = df.reset_index(level=[-1])

# seperate out all deaths and COVID deaths as their own columns

df = df.pivot(columns='Cause of death', values='Number of deaths')

df.columns.name = ''

# rename columns

df = df.rename(columns={'All causes': 'Total deaths', 'COVID 19': 'COVID deaths'})

# add non-COVID deaths as column

df['Non COVID deaths'] = df['Total deaths'] - df['COVID deaths']

# add area names

df['Area name'] = LAD_df.loc[df.index]['LAD Name']

# add region names

df['Region name'] = LAD_code_region_lookup_df.loc[df.index]['RGN19NM']

# Calculate the rate per 100k using the age-standardisation factor estimated previously

df['COVID-19 rate'] = (LAD_df.loc[df.index]['COVID-19 rate factor'] * df['COVID deaths']).astype(float)

df['All causes rate'] = (LAD_df.loc[df.index]['All causes rate factor'] * df['Total deaths']).astype(float)

# import mean IMD rank

df['Mean IMD decile'] = LAD_df['Mean IMD decile']

return df# sum over "Place of death" column

mortality_sum_df = mortality_df.groupby(by=['Area code', 'Date', 'Cause of death']).sum().reset_index()First, let’s agreggate the data without splitting into different time periods.

# this line performs the agreggation step (summing mortality over time).

# and also includes information about deprivation, no date filtering yet.

total_deaths_df = filter_date_and_aggregate(mortality_sum_df)Now we can split up the data into three periods. The first period is from January 1st to April 7th - 16 days from the beginning of lockdown. The second period runs from April 7th to June 1st - 16 days after the stay at home order was lifted. The final period runs from June 1st to August 28th.

We use a time delay of 16 days after key policy decisions to account for the time lag between onset of the disase and death. The number was taken from this study: “Clinical characteristics of 113 deceased patients with coronavirus disease 2019: retrospective study” BMJ 2020;368:m1091.

# first date range

first_date_range = ('2020-01-01', '2020-04-07')

first_df = filter_date_and_aggregate(mortality_sum_df, first_date_range)

first_df['period'] = 1

# second date range

second_date_range = ('2020-04-07', '2020-06-01')

second_df = filter_date_and_aggregate(mortality_sum_df, second_date_range)

second_df['period'] = 2

# second date range

third_date_range = ('2020-06-01', '2020-08-28')

third_df = filter_date_and_aggregate(mortality_sum_df, third_date_range)

third_df['period'] = 3print('Total deaths from COVID-19 in before lockdown period:\t', first_df['COVID deaths'].sum())

print('Total deaths from COVID-19 in during lockdown period:\t', second_df['COVID deaths'].sum())

print('Total deaths from COVID-19 in after lockdown period:\t', third_df['COVID deaths'].sum())Total deaths from COVID-19 in before lockdown period: 6521

Total deaths from COVID-19 in during lockdown period: 24179

Total deaths from COVID-19 in after lockdown period: 3217Let’s also divide the rate by the number of days in each time period, which will give us the the age standardised mortality rate per day.

def get_num_days(date_range):

d0 = datetime.strptime(date_range[1], '%Y-%m-%d')

d1 = datetime.strptime(date_range[0], '%Y-%m-%d')

return (d0 - d1).days

full_date_range = ('2020-01-01', '2020-08-28')

total_deaths_df['All causes rate'] = total_deaths_df['All causes rate'] / get_num_days(full_date_range)

total_deaths_df['COVID-19 rate'] = total_deaths_df['COVID-19 rate'] / get_num_days(full_date_range)

first_df['All causes rate'] = first_df['All causes rate'] / get_num_days(first_date_range)

first_df['COVID-19 rate'] = first_df['COVID-19 rate'] / get_num_days(first_date_range)

second_df['All causes rate'] = second_df['All causes rate'] / get_num_days(second_date_range)

second_df['COVID-19 rate'] = second_df['COVID-19 rate'] / get_num_days(second_date_range)

third_df['All causes rate'] = third_df['All causes rate'] / get_num_days(third_date_range)

third_df['COVID-19 rate'] = third_df['COVID-19 rate'] / get_num_days(third_date_range)# recombining with the additional column

all_df = pd.concat([first_df,second_df, third_df])Now we are finished with all of the processing steps and we are ready for the fun part: analysing the data!

Study #1 - Regional Mortality Counts & Rates

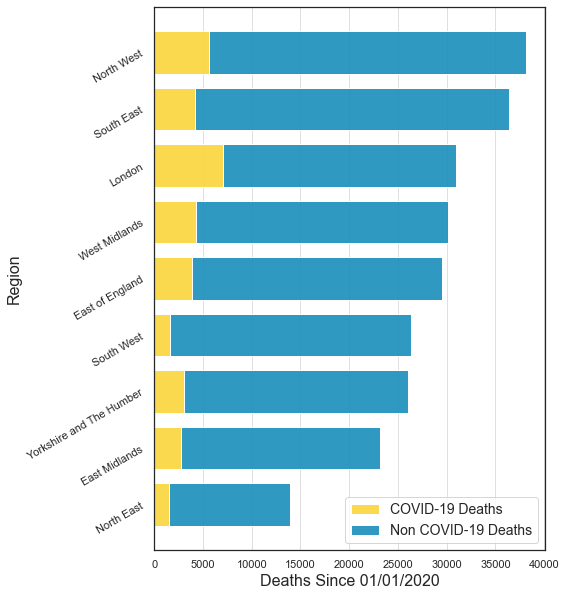

Our first objective was to reproduce the ONS analysis to ensure that we have all the pieces we need to understand the problem. The first plots that we will replicate compare COVID-19 mortality across the different regions in England (Figures 1 & 2 in the ONS analysis).

For more information about what a “replicate” means in this context, check out the definitions over at the Turing Way. In this case, a replicate means a different team coming to the same conclusion, using the same analysis procedure.

Total Mortalities by Region

For Figure 1, we will produce a stacked bar chart showing the mortality count (split up into COVID-19 and non COVID-19 deaths) for each Region in England.

Summing over region and sorting the values, we are almost ready to make the plot.

# sum over LADs in each region

total_deaths_by_region_df = total_deaths_df.groupby(by='Region name').sum()

# sort ascending

total_deaths_by_region_df = total_deaths_by_region_df.sort_values(by='Total deaths', ascending=True)Finally, let’s write the code to actually make the plot.

# region names

xs = total_deaths_by_region_df.index

# mortality counts

non_covid_deaths = total_deaths_by_region_df['Non COVID deaths']

covid_deaths = total_deaths_by_region_df['COVID deaths']

# set bar width

width = 0.75

# colors similar to ONS

covid_color = (251/255, 213/255, 59/255, 0.9)

noncovid_color = (25/255, 142/255, 188/255, 0.9)

# create a figure and plot data

plt.figure(figsize=(7,10))

p1 = plt.barh(xs, covid_deaths, width, color=covid_color, label='COVID-19 Deaths')

p2 = plt.barh(xs, non_covid_deaths, width, left=covid_deaths, color=noncovid_color, label='Non COVID-19 Deaths')

# label axes

plt.xlabel('Deaths Since 01/01/2020', fontsize=16)

plt.ylabel('Region', fontsize=16)

plt.yticks(rotation=30)

# add vertical grid lines

plt.gca().xaxis.grid(True, linestyle='-', which='major', color='grey', alpha=.25)

# show legend and plot

plt.legend(fontsize=14)

plt.show()

There it is! A lot of work but now we can already begin to try and understand what this data is telling us. Here are some conclusions: - For all regions, the number of COVID-19 deaths is smaller than the number of non COVID-19 deaths. - The number of deaths varies a lot between different regions. This can be due to the fact that there are different numbers of people living in each region (for example, there are more people living in the South East than there are in the North East). On top of that, we know that older people have a higher risk of dying after contracting COVID-19, and as the age distributions are different for different regions, this can also affect the overall number of deaths.

Standardised Mortality Rate by Region

To account for the varying population sizes and age distributions, let’s look at the age-standardised mortality rates per 100,000 people, standardised to the 2013 European Standard Population. Age-standardised mortality rates allow for differences in the age structure of populations and therefore allow valid comparisons to be made between geographical areas, the sexes and over time.

# calculate the mean rate per region and sort

total_rates_df_by_region = total_deaths_df.groupby(by='Region name', as_index=False).agg('mean')

total_rates_df_by_region = total_rates_df_by_region.sort_values(by='All causes rate', ascending=True)# region names

x_labels = total_rates_df_by_region['Region name']

xs = np.array(range(len(x_labels)))

# mortality counts

non_covid_rate = total_rates_df_by_region['All causes rate']

covid_rate = total_rates_df_by_region['COVID-19 rate']

# set bar width

width = 0.4

# create a figure and plot data

plt.figure(figsize=(7,10))

p2 = plt.barh(xs+0.2, non_covid_rate, width, color=noncovid_color, label='All Causes Mortality Rate', tick_label=x_labels)

p1 = plt.barh(xs-0.2, covid_rate, width, color=covid_color, label='COVID-19 Mortality Rate')

# label axes

plt.xlabel('Age standardised mortality rate per 100,000 people per day since 01/01/2020', fontsize=16)

plt.ylabel('Region', fontsize=16)

plt.yticks(rotation=30)

# add vertical grid lines

plt.gca().xaxis.grid(True, linestyle='-', which='major', color='grey', alpha=.25)

# show legend and plot

plt.legend(fontsize=14, loc='lower right')

plt.show()

Note that as we plot the rates, we switch from a stacked bar chart (showing counts of COVID-19 and non COVID-19 mortalities), to two bar charts side by side (showing the COVID-19 mortality rate, and the all causes mortality rate). Even with this caveat in mind, when looking at the chart we see it tells a very different story to the previous plot. For example, in the previous plot, the South East had the highest number of total deaths, but looking at the standardised rates in this plot we see that it is ranked second from the bottom. This shows that the raw mortality counts do not tell the whole story, and so we cannot rely solely on them to make meaningful comparisons between different Regions.

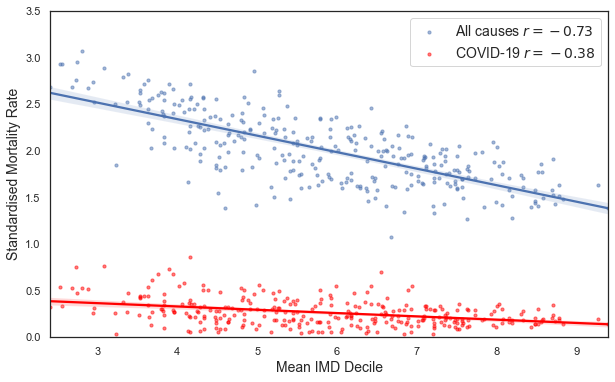

Study #2 - Mortality by Deprivation

We can now study the relationship between COVID-19 mortality and deprivation. To do this we will make some plots showing the standardised mortality rate as a function of the mean IMD decile for each LAD in England. Recall that the rate has been normalised by the number of days in each time period, so that they are comparable.

In the plots, you will see the \(y\) axis labelled with “Standardised Mortality Rate”. In fact, this rate is the age standardised count of deaths observed per 100,000 people per day.

# define a function that we can use to calculate the correlation and slope of a linear fit

def get_corr_and_slope(xs, ys):

# calculate the correlation coefficient

corr = round(pearsonr(xs, ys)[0], 2)

# calcualte the slope of a linear fit

slope = round(np.polyfit(xs, ys, 1)[0], 2)

return corr, slopeComparing All Causes and COVID-19 Mortality Rates

To get started, let’s overlay the mortality rates for all causes of death and COVID-19 on the same plot.

# plot formatting

po = {'s': 10, 'alpha':0.5}

# select data

IMD_decile = total_deaths_df['Mean IMD decile']

all_causes_rate = total_deaths_df['All causes rate']

covid_rate = total_deaths_df['COVID-19 rate']

# calcualte correlations and slopes

ac_stats = get_corr_and_slope(IMD_decile, all_causes_rate)

c19_stats = get_corr_and_slope(IMD_decile, covid_rate)

# make plots

plt.figure(figsize=(10,6))

sns.regplot(x=IMD_decile, y=all_causes_rate, label=f'All causes $r={ac_stats[0]}$', scatter_kws=po)

sns.regplot(x=IMD_decile, y=covid_rate, color='red', label=f'COVID-19 $r={c19_stats[0]}$', scatter_kws=po)

# format plot

plt.ylabel('Standardised Mortality Rate', fontsize=14)

plt.xlabel('Mean IMD Decile', fontsize=14)

plt.legend(fontsize=14); plt.ylim((0, 3.5))

plt.show()

In the plot legends, \(r\) is the correlation coefficient. If you are unfamiliar with the concept of correlation, or want a refresher, take a look here.

Recall that the lower the mean IMD rank in each LAD, the more deprived the area is. There is a negative correlation between the standardised rate and the IMD decile, for both the all causes rate and the COVID-19 rate. The negative correlation tells us that in more deprived areas (those with a lower mean IMD decile), the standardised mortality rate is higher.

Note however that the strength of the correlations are quite different. COVID-19 appears to discriminate less based on social deprivation than all causes of death combined. This link between social deprivation and mortality has been previously observed - see discussions here and here.

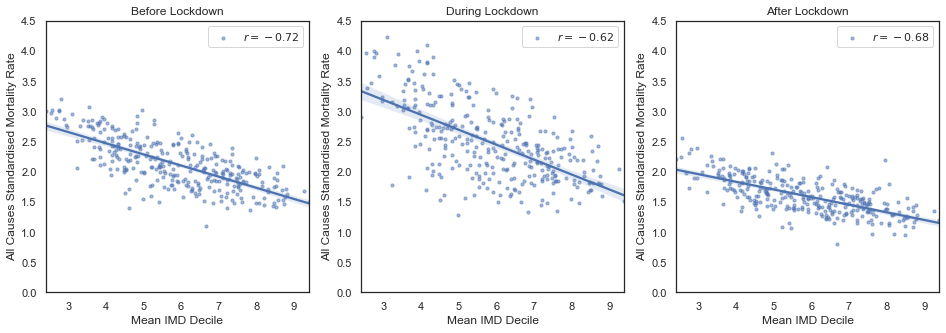

Time Periods

We are now finally ready to investigate the relationship between mortality and deprivation in the different periods of lockdown. The three periods we defined are: - Before lockdown: January 1st to April 7th - Duing lockdown: April 7th to June 1st - After lockdown: June 1st to August 28th

We will look at this relationship for all causes of death, and for COVID-19 deaths separately.

All Causes

ymax = 4.5

plt.figure(figsize=(16,5))

# calcualte correlations and slopes

pre_stats = get_corr_and_slope(first_df['Mean IMD decile'], first_df['All causes rate'])

dur_stats = get_corr_and_slope(second_df['Mean IMD decile'], second_df['All causes rate'])

pos_stats = get_corr_and_slope(third_df['Mean IMD decile'], third_df['All causes rate'])

plt.subplot(131)

plt.title('Before Lockdown')

sns.regplot(x='Mean IMD decile', y='All causes rate', data=first_df, label=f"$r={pre_stats[0]}$", scatter_kws=po)

plt.xlabel('Mean IMD Decile', fontsize=12)

plt.ylabel('All Causes Standardised Mortality Rate', fontsize=12)

plt.legend(); plt.ylim((0, ymax))

plt.subplot(132)

plt.title('During Lockdown')

sns.regplot(x='Mean IMD decile', y='All causes rate', data=second_df, label=f"$r={dur_stats[0]}$", scatter_kws=po)

plt.xlabel('Mean IMD Decile', fontsize=12)

plt.ylabel('All Causes Standardised Mortality Rate', fontsize=12)

plt.legend(); plt.ylim((0, ymax))

plt.subplot(133)

plt.title('After Lockdown')

sns.regplot(x='Mean IMD decile', y='All causes rate', data=third_df, label=f"$r={pos_stats[0]}$", scatter_kws=po)

plt.xlabel('Mean IMD Decile', fontsize=12)

plt.ylabel('All Causes Standardised Mortality Rate', fontsize=12)

plt.legend(); plt.ylim((0, ymax))

plt.show()

After splitting the data into the three time periods, we that the the negative correlation persists for each period, but has different strengths. The potential reasons for the differences in strength are numerous. The middle plot refers to the peak period in the number of COVID-19 deaths. This could be one explanation for the interesting effect that we observe in the middle plot: the correlation is lower (the variance is larger), but the slope seems to be steeper.

To get a better sense of the number of deaths as a function of time take a look at this ONS study.

COVID-19

Next we’ll make the same set of three plots, but look specifically at deaths involving COVID-19.

ymax = 2.5

plt.figure(figsize=(16,5))

# calcualte correlations and slopes

pre_stats = get_corr_and_slope(first_df['Mean IMD decile'], first_df['COVID-19 rate'])

dur_stats = get_corr_and_slope(second_df['Mean IMD decile'], second_df['COVID-19 rate'])

pos_stats = get_corr_and_slope(third_df['Mean IMD decile'], third_df['COVID-19 rate'])

plt.subplot(131)

plt.title('Before Lockdown')

sns.regplot(x='Mean IMD decile', y='COVID-19 rate', data=first_df, label=f"\t$r={pre_stats[0]}$ $m={pre_stats[1]}$", scatter_kws=po)

plt.xlabel('Mean IMD Decile', fontsize=12)

plt.ylabel('COVID-19 Standardised Mortality Rate', fontsize=12)

plt.legend(); plt.ylim((0, 1))

plt.subplot(132)

plt.title('During Lockdown')

sns.regplot(x='Mean IMD decile', y='COVID-19 rate', data=second_df, label=f"\t$r={dur_stats[0]}$ $m={dur_stats[1]}$", scatter_kws=po)

plt.xlabel('Mean IMD Decile', fontsize=12)

plt.ylabel('COVID-19 Standardised Mortality Rate', fontsize=12)

plt.legend(); plt.ylim((0, ymax))

plt.subplot(133)

plt.title('After Lockdown')

sns.regplot(x='Mean IMD decile', y='COVID-19 rate', data=third_df, label=f"\t$r={pos_stats[0]}$ $m={pos_stats[1]}$", scatter_kws=po)

plt.xlabel('Mean IMD Decile', fontsize=12)

plt.ylabel('COVID-19 Standardised Mortality Rate', fontsize=12)

plt.legend(); plt.ylim((0, 1))

plt.show()

⚠️ The “During Lockdown” middle plot contains the peak of COVID-19 mortalities in the UK. The standardised rate is therefore much higher in this plot, and the range of the \(y\)-axis has been increased to reflect this.

Looking only at COVID-19 mortalities, we observe that during lockdown and the peak of the pandemic, the strength of the correlation increases. Again, there are many things that could be the cause of this. Our hypothesis is that, during lockdown, those in more socially deprived areas were more likely to be in circumstances that increased their exposure to COVID-19 (for example key workers who are unable to work remotely - see here and here).

Conclusions

We started writing this story in order to investigate whether COVID-19 deaths were related to social deprivation. We’ve also learnt a lot of other things along the way.

First, there is a very strong relationship between deprivation and general mortality. This means that deprived areas have a higher rate of mortality from all causes than less deprived areas. Life is more difficult if you live in deprivation. This effect is still observed when looking at COVID-19 specific morality rates, although is less strong than the trend from all causes. So COVID-19 appears to be less discriminatory across the different parts of society compared to other causes of death.

We suspected at the beginning that the the state of lockdown may have different affects on different groups of people. We thus wanted to know if this relationship between COVID-19 and death rates changed during the different stages of lockdown. Our analysis shows that the relationship is stronger during the main lockdown period. Note that the relationship we see is only a correlation. More work would need to be done to give any answers about the causal processes that generate this correlation.

There are many things that could be the cause of the observed correlation. Speculatively, it could be down to the fact that people from working class backgrounds are less likely to be able to work from home made them more prone to contracting the virus during lockdown and take it home with them. This would explain their higher rates of mortality. But, as stated, more sophisticated data and analysis techniques would be needed to attempt answer the question of what is the cause of what.

At the moment of writing we are entering a second wave. We hope that we will be able to learn from the challenges that were faced earlier this year, and that more consideration and support is offered to people living in the most deprived areas of the country.

Thank you for taking the time to read this first Turing Data Story, we hope you have found it both interesting and informative. We encourage you to expand on the ideas presented in this story and as well as explore your own questions on this topic. 🙂

We’re now taking in external submissions for new stories 📗 so if you are interested in contributing, please get in touch!