%matplotlib inlineReviewers

- Jack Roberts, Alan Turing Institute (Github: @jack89roberts)

- Nick Barlow, Alan Turing Institute (Github: @nbarlowATI)

Introduction

I was recently chatting with a friend about the respective merits of the French pastries available at our local bakery:

Friend: What do you think of the pastries? Are they good?

Me: Yes, though there is another bakery further out of town that I prefer.

Friend: You don’t think these are as good?

Me: No, but not so much worse that I’d walk a half hour each way to get those since I don’t have a car. They are definitely better than the ones you get at Pret A Manger which you can find in every train station and city center, so definitely well above a replacement level crossaint.

Friend: What do you mean by that, replacement level?

Me: Oh, it’s a term that baseball statisticians use to measure if it’s worth paying money for a particular player. The idea is to come up with some baseline that’s easily available, and then try to determine how much better something is than that. Economists call this “Marginal Utility.”

Friend: [Silence]

Me: [To myself] Did I really just say “replacement level crossaint?”

While I took a course in Economics when I was an undergraduate, I definitely did not completely understand the concept of Marginal Utility until I started reading more about baseball statistics (no doubt beginning with my dog-eared copy of Moneyball by Michael Lewis). The idea is that to compare two goods that have different prices, I need to determine how much additional value I get out of the more expensive one to justify the extra expense. Regarding crossaints, the extra cost of the better bakery was my time, which I determined would be better spent doing something else and thus the marginal utility of the fancier bakery was not worth it.

The undergraduate version of me certainly nodded when the professor talked about this in class, as it sounds simple enough. But what I failed to appreciate back then is the idea that to really understand value in some absolute sense, we need to compare these things to some baseline level that I can always acquire without any effort and minimal expense, something that the baseball statisticians were able to very clearly articulate. In terms of pastries, this was the fare available at the Pret just around the corner – always available for 2 pounds no matter where I am without having to walk a long way, always the same product as they are no doubt churned out en masse at some factory bakery.

Replacement Level

In baseball, this baseline level is referred to as “replacement level.” Conceptually, replacement level does not refer to any player in particular, but rather some theoretical player that any team can acquire at any time with minimal effort (either time or money). We can think of these players as being effectively in infinite supply (by infinite, what we really mean is that “there are far more players of this caliber than there are jobs available on Major League Baseball teams”). If we put a bunch of these theoretical players on the field and played 162 games (a full MLB season), they would not be a particularly good team, but they would be cheap. To win more games than this theoretical team would win, I need to spend some additional time or money in finding players that are better than this replacement level.

One thing to note is that this level is not the average of all players in the league – presumably all of the teams have made considerable effort to spend resources to make their team better, so the league average will reflect some amount of value above this level. How much more valuable is an average player than a replacement level? Most statisticians put replacement level at around 80% of the league average (see for instance the Wikipedia page).

On face, this all seems quite sensible. But recently, I asked myself: why is this a reasonable level? Why 80%? Why not 25%, or 95%? And can we be more precise about why 80% represents this “easily findable value” and what it really means in a statistical sense? This is one of the main criticisms of the statistical effort to estimate replacement value. In the following analysis, I present one way to try and make this estimate more concrete and not include any arbitrary numbers in it.

A Primer on Baseball

Given that baseball is more popular in some parts of the world than others, I give a brief description of the rules of the game in this section. If you are already familiar with the sport, feel free to skip ahead. If the summary in this section is too brief, the Wikipedia page contains more details.

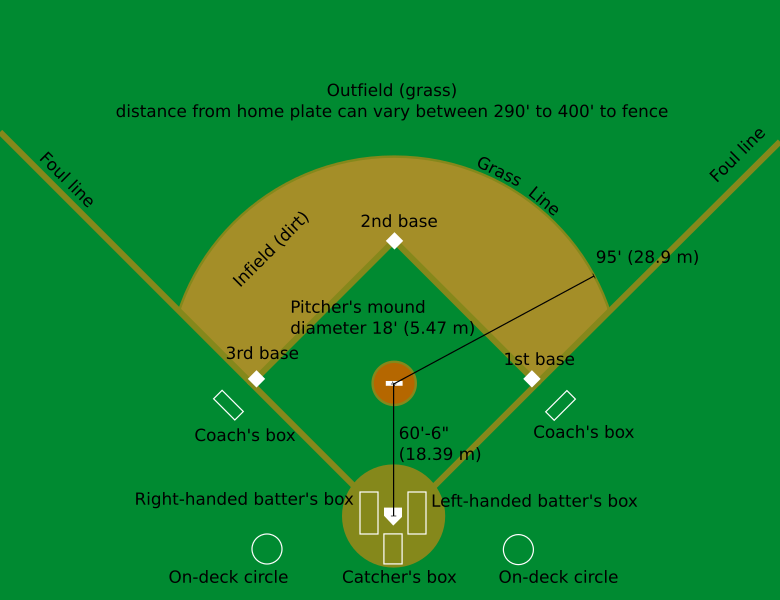

Baseball is a bat and ball game where teams take alternate turns attempting to hit a ball thrown by the defensive team. The field of play is roughly diamond shaped, with four bases placed in a counterclockwise direction around a square:

The hitters start from “home plate” at the base of the diamond (not labelled on the above image, but it is between the batter’s and catcher’s boxes), then first, second, and third bases proceed around the square. The objective of the offensive team is to hit the ball, thrown from the pitcher’s mound at the center of the bases towards home plate, into the field of play between the foul lines in a way that the defensive players are (1) unable to catch the ball before it hits the ground and (2) not able to retrieve the ball and throw it to first base before the offensive player is able to run from home plate to first base. If the offensive player is not successful, then an “out” is recorded, while if the player is able to hit the ball safely, then they are able to remain on the bases and the next member of the team can attempt to hit the ball.

However, the offensive player may not always be able to hit the ball. If the pitcher can successfully cause the hitter to miss the ball three times (called “strikes”), the offensive player makes an out, while if the pitcher throws four pitches that are deemed not to be hittable (called “balls”), then the hitter does not make an out and is awarded first base (a “walk” or “base on balls”, abbreviated BB). Alternatively, if the pitcher throws the ball and it hits the body of the batter (hit by pitch, abbreviated HBP), then that player is awarded first base in the same way as a walk. All attempts by an offensive player thus either result in an out, or the player successfully reaches the bases and the offensive team is able to continue hitting.

If the offensive team is able to advance a player completely around the bases (first, second, third, and then back to home plate), then a point is scored (also called a “run”). If the defensive team is able to record three outs, then the offensive team is out of chances to score and the defensive and offensive teams switch places. Each successive series of attempts is known as an “inning,” and a game consists of 9 innings.

Different types of hits are able to advance runners more bases, so players that are able to do this more often are inherently more valuable. Hits that go for 2 bases are known as “doubles” (2B), 3 bases are known as “triples” (3B) and hits that leave the field of play are known as “home runs” (HR) and all players on the bases are able to advance to home plate. Additionally, one base hits (“singles”, or 1B) tend to advance existing runners multiple bases, as they are often able to get a running start, while walks only allow players to advance if the runner at the previous base is forced to advance. However, the analysis below will ignore the value of baserunning, and instead focus on the above types of hits.

Finally, some types of outs are traditionally treated differently in baseball statistics, as they have a positive effect in that they are able to advance a runner along the bases despite the negative value associated with making an out. These are known as sacrifice hits (abbreviated SH or SF for sacrifice flies), and are usually not counted towards the number of attempts a player is credited with when looking at hitting statistics. Additionally, for historical reasons walks are not counted towards the number of attempts a player is credited with either. Because of these quirks, the number of official “at bats” (abbreviated AB) will be different from the number of “plate appearances” (abbreviated PA). However, since walks create positive value, while sacrifice hits still make an out, we will care more about the number of plate appearances rather than the more common at bats.

Data

In this story, I use the publically available and widely used Lahman Baseball Database. This database is known for its full coverage of most of MLB history, and includes all of the standard batting and pitching statistics for all players for all seasons. It is maintained by a group of volunteers who tabulate statistics and have gone through past newspaper archives to collect the extensive data that it contains. It is available in a number of SQL formats, as well as the raw CSV files, and is updated every year.

In the following, I will use the SQLite version of the database that runs through the 2019 season (it can be found here). I choose this mostly because Python has built-in support for SQLite, and since I will be using this database as a standalone data source, I don’t have to worry about setting up a server, dealing with concurrent users, or modifying the database.

The tables of the Lahman database are described in the documentation. I will mainly be using the batting table, which contains the relevant hitting statistics and can easily be queried to pull out the specific data that I need to perform this analysis. As a reference, here is a table of the abbreviations used in the database and our data frame:

| Symbol | Description | How to Calculate |

|---|---|---|

AB |

At Bats | |

H |

Hits | |

2B |

Doubles | |

3B |

Triples | |

HR |

Home Runs | |

BB |

Walks | |

IBB |

Intentional Walks | |

HBP |

Hit By Pitch | |

SH |

Sacrifice Hits | |

SF |

Sacrifice Flies | |

PA |

Plate Appearances | AB + BB + IBB + HBP + SH + SF |

O |

Outs | AB + SH + SF - H |

1B |

Singles | H - 2B - 3B - HR |

Note there are a few statistics we need that are derived from the other statistics: * Plate Appearances (PA) are all at bats, plus all special cases (i.e. walks and sacrifices) where the player came up but did not result in an at bat. * Outs are the number at bats plus all sacrifices (i.e. outs that are not counted in at bats), minus the number of hits. * Singles (1B) are the number of hits minus all hits resulting on multiple bases (doubles, triples, and home runs).

import pandas

import numpy as np

import sqlite3

import scipy.stats

import matplotlib.pyplot as plt

conn = sqlite3.connect("lahmansbaseballdb.sqlite")

year = 2019Once I have connected to the database, I can query the batting table to get the full statistics for all hitters that registered at least 10 at bats in the most recent full season (2019) and load into a data frame using pandas:

df = pandas.read_sql('select * from batting where yearID = {} and AB > 10'.format(year), conn)

df.head()| ID | playerID | yearID | stint | teamID | team_ID | lgID | G | G_batting | AB | ... | RBI | SB | CS | BB | SO | IBB | HBP | SH | SF | GIDP | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 105864 | abreujo02 | 2019 | 1 | CHA | 2900 | AL | 159 | None | 634 | ... | 123 | 2 | 2 | 36 | 152 | 4 | 13 | 0 | 10 | 24 |

| 1 | 105865 | acunaro01 | 2019 | 1 | ATL | 2897 | NL | 156 | None | 626 | ... | 101 | 37 | 9 | 76 | 188 | 4 | 9 | 0 | 1 | 8 |

| 2 | 105866 | adamecr01 | 2019 | 1 | SFN | 2920 | NL | 10 | None | 22 | ... | 2 | 0 | 0 | 2 | 8 | 0 | 0 | 0 | 0 | 0 |

| 3 | 105867 | adamewi01 | 2019 | 1 | TBA | 2922 | AL | 152 | None | 531 | ... | 52 | 4 | 2 | 46 | 153 | 1 | 3 | 3 | 1 | 9 |

| 4 | 105874 | adamsma01 | 2019 | 1 | WAS | 2925 | NL | 111 | None | 310 | ... | 56 | 0 | 0 | 20 | 115 | 1 | 2 | 0 | 1 | 7 |

5 rows × 25 columns

This only gives us the raw numbers for these players, and the player is identified with a playerID rather than their full name. If I want to get the names, I need to do a join with the people table:

df = pandas.read_sql('''select nameLast, nameFirst, AB, H, "2B", "3B", HR, BB

from batting inner join people on batting.playerID = people.playerID

where yearID = {} and AB > 10'''.format(year), conn)

df.head()| nameLast | nameFirst | AB | H | 2B | 3B | HR | BB | |

|---|---|---|---|---|---|---|---|---|

| 0 | Abreu | Jose | 634 | 180 | 38 | 1 | 33 | 36 |

| 1 | Acuna | Ronald | 626 | 175 | 22 | 2 | 41 | 76 |

| 2 | Adames | Cristhian | 22 | 7 | 1 | 0 | 0 | 2 |

| 3 | Adames | Willy | 531 | 135 | 25 | 1 | 20 | 46 |

| 4 | Adams | Matt | 310 | 70 | 14 | 0 | 20 | 20 |

While it is useful to be able to identify particular players, I am most interested the distribution of outcomes over the entire league, I don’t particularly care which players are which. Thus, most of the time I will just be doing the same query of the batting table to pull out hitting statistics.

However, one non-trivial thing to consider is that players that play different defensive positions have different expectations associated with their batting ability. The most important correction by far is the fact that pitchers tend to be significantly worse hitters than players that play other defensive positions (colloquially referred to as “position players”), though this also applies to other defensive positions to a lesser extent. Pitchers tend to be worse hitters than average, in large part because they get less regular at bats due to the fact that they only pitch every few days. To make a fair comparison, I would thus like to look only at position players, so for the query that I need I will need to do a join on the appearances table. This table lists how many games a player appeared as a pitcher, so I can filter out all players that appeared in a game as a pitcher to exclude them from the analysis.

Runs Created

At a basic level, baseball comes down to two things: runs and outs. Outs occur when a player fails to get a hit. Each game consists of 27 outs per team, so the goal is to not make outs, and instead produce a positive outcome. If a player does not make an out, then there are several possible outcomes: the player can safely hit the ball somewhere, which allows the player and the player’s teammates to advance around the bases a certain amount, or the player can be awarded a base without getting a hit (either through a walk or a hit by pitch). Hits can be singles, doubles, triples, or home runs which advance the batter 1, 2, 3, or 4 bases, respectively (n.b. hits tend to advance any teammates already on the bases a different number of bases, but it turns out that the metric below does a reasonable job of accounting for this as it turns out that a double is about twice as valuable as a single, a triple is three times as valuable, etc.).

While the main goal is to not make outs, hitters that are more likely to advance players by more than one base at a time are more likely to create more runs, as if they have other players already on the bases, they are more likely to score runs. The simplest way that we can account for this is through a metric known as “Runs Created,” which was designed by the baseball statistician Bill James and tries to approximate the number of runs that a player is likely to have produced through their hitting ability. The formula is

\[RC = {\rm Success\: rate}\times{\rm Total\: bases} = \frac{H+BB}{PA}\times TB\]

Here, \(H\) is the number of hits, \(BB\) is the number of walks, \(PA\) is the number of attempts, and \(TB\) is the total number of bases the player advanced through their hits. Note that this is a rate multiplied by a counting stat, ultimately resulting in a counting stat. Note however that the added rate factor means that if two players produce the same number of total bases, but one does it in fewer opportunities or draws more walks, the metric will give more value to the player with the better rate stat.

This statistic was derived mostly on an empirical basis, but for some intution as to why it works imagine that someone gets 4 hits in 16 opportunities, of which two are singles, one is a double, and one is a home run. Then the success rate is 1/4, while the total number of bases are eight, leading to two runs created. Obviously, the home run must have created one run. Thus, the combined value of all the other hits (plus the chances that someone else was on the bases when they hit the home run) is such that statistically, this player’s production increased the number of runs the team scored over the entire season by one. Note that it does not mean that those hits produced one actual run, but rather that given a typical set of scenarios under which those hits took place, the player’s team likely scored one additional run due to those hits.

I can pull out the basic statistics that I need to compute Runs Created with the following SQL query, which I will wrap in a function for convenient further use. As mentioned above, to exclude pitchers I need to do an inner join on the appearances table, which allows me to filter out the pitchers and just focus on position players in this analysis.

I generate 7 columns in this query: the total number of attempts (PA or “Plate Appearances”), number of outs (O), and then 5 additional columns for the types of hits/walks so that I can convert these to the total number of bases. Some statistics, such as sacrifice flies (SF) and intentional walks (IBB) are not recorded for all years, so I use the SQL coalesce function to convert the null values to zero if necessary.

def rc_base_query(year, conn):

"Base query for getting statistics for computing Runs Created"

return pandas.read_sql('''select AB + BB + coalesce(IBB,0) + HBP + SH + coalesce(SF,0) as PA,

AB + SH + coalesce(SF, 0) - H as O,

BB + coalesce(IBB,0) + HBP as BB,

H - "2B" - "3B" - HR as "1B",

"2B",

"3B",

"HR"

from batting inner join appearances on batting.playerID = appearances.playerID

and batting.yearID = appearances.yearID

where batting.yearID = {} and AB > 10 and appearances.G_p = 0'''.format(year),

conn)

print(len(rc_base_query(year, conn)))

rc_base_query(year, conn).head()708| PA | O | BB | 1B | 2B | 3B | HR | |

|---|---|---|---|---|---|---|---|

| 0 | 627 | 428 | 58 | 83 | 33 | 6 | 19 |

| 1 | 38 | 22 | 7 | 4 | 3 | 1 | 1 |

| 2 | 78 | 57 | 6 | 5 | 4 | 0 | 6 |

| 3 | 452 | 311 | 49 | 72 | 11 | 2 | 7 |

| 4 | 702 | 475 | 56 | 97 | 29 | 10 | 35 |

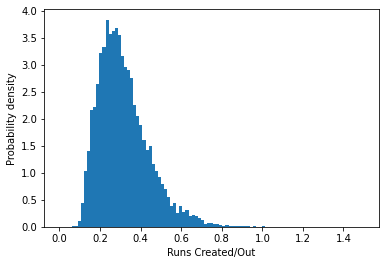

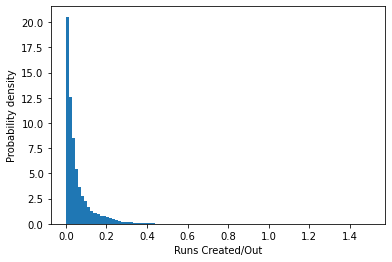

Because players make outs in the process of producing runs, we will want to normalize the runs created by the outs consumed to create these runs, which turns this back into a rate stat and thus allows us to compare across players regardless of the number of attempts. We can thus view the results of all players over a full season on a histogram this way.

def runs_created(season_stats):

"computes runs created per out from a data frame/array holding plate appearances, outs, singles, doubles, triples, and home runs"

if isinstance(season_stats, pandas.DataFrame):

plate_appearances = season_stats["PA"]

outs = season_stats["O"]

walks = season_stats["BB"]

singles = season_stats["1B"]

doubles = season_stats["2B"]

triples = season_stats["3B"]

home_runs = season_stats["HR"]

else:

if season_stats.ndim == 1: # Only the stats of a single player

season_stats = np.reshape(season_stats, (1, -1))

plate_appearances = np.sum(season_stats, axis=-1)

outs = season_stats[:,0]

walks = season_stats[:,1]

singles = season_stats[:,2]

doubles = season_stats[:,3]

triples = season_stats[:,4]

home_runs = season_stats[:,5]

# scrub any player that made no outs

nz_outs = (outs > 0)

rc = (1.-outs/plate_appearances)*(singles + 2*doubles + 3*triples + 4*home_runs)

return rc[nz_outs]/outs[nz_outs]

def rc_histogram(*args, scale="linear"):

"plot a histogram of any number of runs created arrays/sequences on a linear or logarithmic scale"

for rc in args:

if scale == "log":

rc_data = runs_created(rc)

dist = np.log(rc_data[rc_data > 0.])/np.log(10.)

bins = np.linspace(-4., 1., 100)

else:

dist = runs_created(rc)

bins = np.linspace(0, 1.5, 100)

plt.hist(dist, bins=bins, density=True)

if scale == "log":

plt.xlabel("Log Runs Created/Out")

else:

plt.xlabel("Runs Created/Out")

plt.ylabel("Probability density")

season_stats = rc_base_query(year, conn)

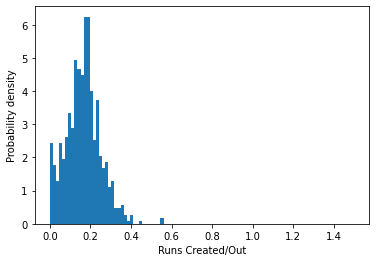

rc_histogram(season_stats)

As we can see, the bulk of the distribution is around 0.2, and then the numbers fall off as we move out towards 0.4, with only a few players managing more than that.

As an aside, the raw numbers here are illustrative of the general idea behind using Runs Created to measure a player’s ability, but this simple version does not account for a number of well-known additional effects:

- We have not measured baserunning ability. Total bases only includes the bases advanced by the players hitting, not their ability to run the bases once on base. More complex formulae can better account for this effect by including stolen bases. However, more general baserunning ability that allows a runner to advance on another teammate’s hits is not measured in the stats we have available in this database so we cannot account for that here.

- We also have not corrected for the fact that different stadiums tend to produce more runs than others, so a player that has half of their opportunities in a stadium that is friendly to hitters will get a boost for which an empirical correction can be made.

- One final, and more subtle issue, is the fact that the best players will be overvalued here because they cannot possibly be on base when they come up to hit (i.e. a player that is significantly better than their teammates will have fewer people on base than would be expected from the average). Since the statistic assumes that everyone has an average number of people on base for their hits, this will be biased towards the better players that will in general have fewer people on base.

More sophisticated techniques are needed to treat these effects, but won’t change the overall way that we convert this distribution into a replacement level value, so we will ingore these additional effects going forwards. As mentioned earlier, a good heuristic estimate of replacement level can be found by taking 80% of the mean, so from these calculations we can estimate replacement level:

def heuristic_replacement_level(season_stats):

"calculate empirical replacement level statistic using 80% heuristic"

return 0.8*np.mean(runs_created(season_stats))

print("Heuristic replacement level for {}: {} runs/out".format(year, heuristic_replacement_level(season_stats)))Heuristic replacement level for 2019: 0.1321843895833081 runs/outThis suggests that creating 0.13 runs per out is a good baseline for comparison for a position player. However, it is not clear why this is the case. The distribution is strongly peaked near 0.2, then falls off towards zero, so it’s not obvious where in the distribution below the mode we should set a cut off for replacement level. The tail of the distribution is also quite heavy, with small numbers of players producing lots of runs. Are these players really so much better than the others, or is this just luck? To address this, we need a way to simulate the season many times to estimate what we expect this distribution to look like with more samples so that we can scrutinize it in more depth.

Multinomial Bayesian Inference

To simulate many versions of the same season, we turn to Bayesian Inference. Bayesian models treat their parameter values as probability distributions, which allows us to quantify the uncertainty in the underlying model and propagate that uncertainty forward in additional simulations. Formally, we treat each parameter \(\theta\) in the model as a probability distribution, and determine what our updated beliefs about the parameter values are once we view the data \(y\), \(p(\theta|y)\). Computing this probability distribution is done via Bayes’ Rule:

\[p(\theta|y) = \frac{p(y|\theta)p(\theta)}{p(y)}\]

\(p(y|\theta)\) here is the likelihood, or the probability of the data given a particular value of the model parameters. \(p(\theta)\) is the prior, our initial beliefs about reasonable values of the parameter. \(p(y)\) is known as the evidence, and is the probability of getting the data over all possible realizations of the experiment. This rule lets us convert the likelihood, which is generally more straightforward to compute, to the posterior by weighting it using the prior and evidence.

Multinomial Distribution

What model might we use to describe a hitter? At the most basic level, we can think of a hitter as a set of probabilities that they will make an out, draw a walk hit a single, hit a double, etc. Thus, a set of six probabilities that sum to 1 is a straightforward way of capturing the abilities of a hitter. Once we have those five probabilities, the outcome of a series of hitting attempts is described by a Multinomial distribution.

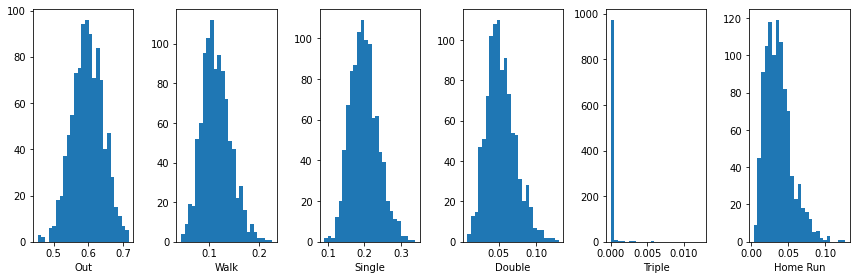

For example, if I am a hitter and I have a 60% chance of making an out, a 10% chance of drawing a walk, 20% chance of hitting a single, 5% chance of hitting a double, 1% chance of hitting a triple, and 4% chance of hitting a hume run, then if I come up to bat 100 times I would expect the following distribution of outcomes:

def plot_multiple_distributions(samples):

"make a plot of multiple components of probability samples"

assert samples.shape[-1] == 6, "samples must have 6 components"

labels = ["Out", "Walk", "Single", "Double", "Triple", "Home Run"]

plt.figure(figsize=(12,4))

for i in range(6):

plt.subplot(int("16"+str(i+1)))

plt.hist(samples[:,i], bins=25)

plt.xlabel(labels[i])

plt.tight_layout()

def plot_multinomial(n_samples, n_trials, p):

"plot the distribution of outcomes from a set of multinomial trials"

assert n_samples > 0, "number of samples must be greater than zero"

assert n_trials > 0, "number of trials must be greater than zero"

assert p.ndim == 1, "p must be a 1D array"

assert np.all(p >= 0.) and np.sum(p) == 1., "all probabilities must be positive and sum to 1"

samples = scipy.stats.multinomial.rvs(n=n_trials, p=p, size=n_samples)

plot_multiple_distributions(samples)

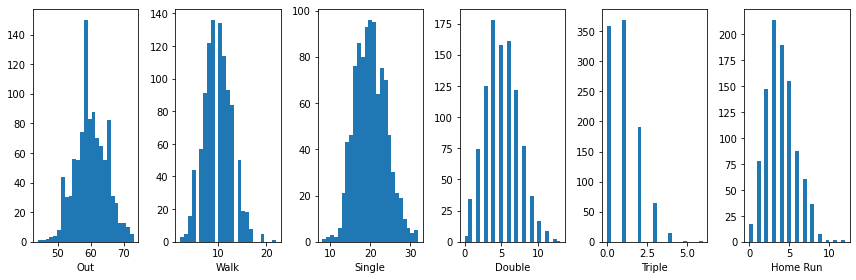

hypothetical_player_probs = np.array([0.6, 0.1, 0.2, 0.05, 0.01, 0.04])

plot_multinomial(1000, 100, hypothetical_player_probs)

Thus, if I repeated my hypothetical set of 100 hitting attemps many times, this shows the range of outcomes I might expect.

Prior

Now I need to specify my prior beliefs about what I probabilities I might expect a hitter to have before I see any data. One natural way to handle this is a Dirichlet distribution, which has the nice property that it plays nicely with a multinomial distribution and gives me an analytical way to compute the posterior. A Dirichlet distribution is a generalization of the Beta distribution in multiple dimensions (in this case, 6 as we need our 6 probabilities for our multinomial distribution).

A Beta distribution takes 2 parameters, while an \(n\)-dimensional Dirichlet distribution takes \(n\) parameters, usually referred to as a vector \(\alpha\). As with the beta distribution, if the parameters are small the probability density is concentrated towards one outcome being more likely, while if the weights are large then probability density is more even across the different dimensions. Combining small and large values let us tweak the distribution in a wide range of different ways, giving plenty of flexibility in describing our prior beliefs.

For this model, I am going to specify a prior that is rather pessimistic about a player’s ability, thus the probabilities should be large for making an out, and relatively small for the more valuable hits. Only once a player shows sustained ability to do better than this threshold will I have more faith in their run producing capabilities. (One caveat here is that I need to be sure at the end of my analysis that my estimation of replacement level is significantly higher than this level, though we will see in the end that the analysis here can identify those players and separate them out of the overall distribution). Note that these choices are inherently subjective and are based on my personal understanding of what I think a good baseball player is likely to do based on many years of watching and following the game.

The following illustrates the priors that I use in my analysis:

def plot_dirichlet(n_samples, alpha):

"plot samples of the probabilities drawn from a Dirichlet distribution"

assert n_samples > 0, "number of samples must be greater than zero"

assert np.all(alpha >= 0.), "all alpha components must be positive"

samples = scipy.stats.dirichlet.rvs(alpha, size=n_samples)

plot_multiple_distributions(samples)

priors = np.array([12., 1., 2., 0.05, 0.01, 0.03])

plot_dirichlet(1000, priors)

Posterior

As noted above, if I use a Dirichlet prior, I can compute the posterior analytically. In this case, the posterior is also a Dirichlet distribution, but with the \(\alpha\) parameters summed with the number of observations of each of the outcomes. In this way, if the absolute number of outcomes is high the posterior weights will reflect that of the data, while if there are only a few observations the prior will still hold some sway over the distribution. Thus, if I take my hypothetical player described above with 100 plate appearances, the posterior given a particular set of observations will be:

hypothetical_player_stats = scipy.stats.multinomial.rvs(size=1, n=100, p=hypothetical_player_probs).flatten()

plot_dirichlet(1000, priors + hypothetical_player_stats)

Simulating full seasons using the posterior

Given the posterior samples, we can use these samples from the posterior of the probabilities describing this player to project their performance over a full season (say over 600 plate appearances, a typical number for someone that plays a full season):

def project_player_season(player_stats, n_samples, plate_appearances, priors):

"project multiple samples from the season of a player given their stats"

assert n_samples > 0, "number of samples must be greater than zero"

assert plate_appearances > 0, "number of plate appearances must be greater than zero"

assert player_stats.shape == (6,), "player stats must be a 1D array of length 5"

assert np.all(player_stats >= 0), "player stats must be non-negative integers"

assert np.all(priors > 0.), "all prior weights must be greater than zero"

outcomes = np.zeros((n_samples, n_samples, 6), dtype=np.int64)

samples = scipy.stats.dirichlet.rvs(alpha=priors + player_stats, size=n_samples)

# occasionally roundoff error causes the sum of these sampled probabilities to be larger than 1

if np.any(np.sum(samples, axis=-1) > 1.):

rounded_error = (np.sum(samples, axis=-1) > 1.)

samples[rounded_error] = (samples[rounded_error]/

(np.sum(samples[rounded_error], axis=-1)[:,np.newaxis] + 1.e-15))

for i in range(n_samples):

outcomes[i] = scipy.stats.multinomial.rvs(n=plate_appearances, p=samples[i], size=n_samples)

return np.reshape(outcomes, (-1, 6))

full_season_pa = 600

print("Hypothetical {} plate appearance season [Outs, Walks, Singles, Doubles, Triples, Home Runs]: {}".format(

full_season_pa, project_player_season(hypothetical_player_stats, 1, full_season_pa, priors)[0]))Hypothetical 600 plate appearance season [Outs, Walks, Singles, Doubles, Triples, Home Runs]: [365 49 135 25 0 26]With all of this, we are now in a position to simulate the Runs Created over many hypothetical seasons to see the variation in the value of this hypothetical player:

hypothetical_player_seasons = project_player_season(hypothetical_player_stats, 100, full_season_pa, priors)

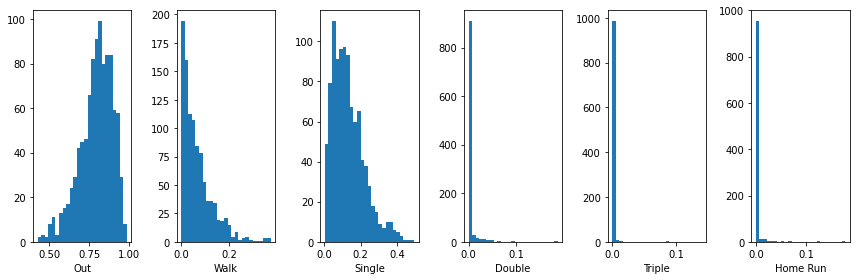

rc_histogram(hypothetical_player_seasons)

From this, we can see that after 100 chances, we still have quite a bit of uncertainty about how well a player will perform over an entire season, though we are somewhat confident that this player is better than average based on comparing this distribution to the observed data for the season.

Simulating League Seasons

Now we can do this for every player in the 2019 season repeatedly to examine what we might expect if this season were to be re-run several times. Once we have simulated this, we can overlay the actual distribution to see what differences we notice.

def project_league_season(season_stats, n_samples, priors):

if isinstance(season_stats, pandas.DataFrame):

season_stats_array = np.array(season_stats)[:,1:]

else:

season_stats_array = season_stats

league_projections = np.zeros((len(season_stats), n_samples*n_samples, 6), dtype=np.int64)

for i in range(len(season_stats)):

league_projections[i] = project_player_season(season_stats_array[i],

n_samples, np.sum(season_stats_array[i]), priors)

return np.reshape(league_projections, (-1, 6))

def project_year(season_stats, stats, n_samples=10):

"project multiple realizations of a full league season"

return project_league_season(season_stats, n_samples, priors)

simulated_seasons = project_year(season_stats, priors)

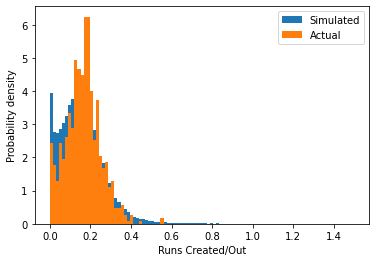

rc_histogram(simulated_seasons, season_stats)

plt.legend(["Simulated", "Actual"])<matplotlib.legend.Legend at 0x12da04d90>

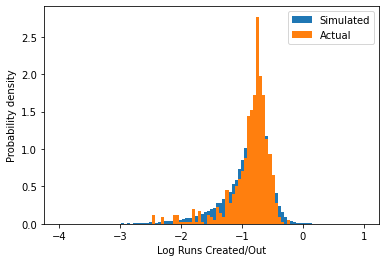

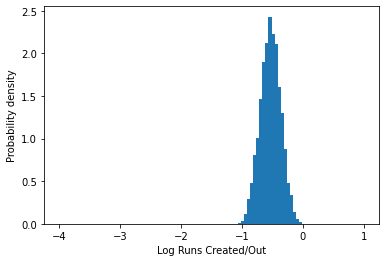

And on a logarithmic scale, to better see the behavior for small values of runs/out:

rc_histogram(simulated_seasons, season_stats, scale="log")

plt.legend(["Simulated", "Actual"])<matplotlib.legend.Legend at 0x12d7f2bb0>

From this, we can see that our simulation method is able to reproduce the distribution in the real data, though it is smoother as we are able to draw many more samples than are available in a single season. However, one important difference is that since we needed to specify a prior (and one that was fairly pessimistic), there is an extra peak near zero runs/out in the simulation. These players are not really relevant for estimating replacement level, so we will need to determine a way to scrub them from the distribution.

Additionally, on the logarithmic scale we note that distribution is not symmetric – there are more players in the real data that are worse than average than players that are better than average. This suggests that there are some players getting attempts that are significantly worse than most major league players, which are likely to be indicative of replacement level.

We can check this by plotting what distribution we would expect if all players’ abilities were drawn from our prior:

season_prior_level = project_player_season(np.zeros(6), 1000, full_season_pa, priors)

rc_histogram(season_prior_level)

And again on a logarithmic scale:

rc_histogram(season_prior_level, scale="log")

This looks similar to the peak closer to zero in the above simulated distribution, confirming that we have a number of players for which we do not have evidence that they are significantly better than our prior. The remaining players that perform better than this level are players where we have evidence of how good they are. These are either “good” major league players, or if they are too far on the negative side of the distribution, then I propose that these are a good representation of replacement players. Thus, we need to determine a method to separate out these parts of the overall distribution.

Approximating the distributions

To try and separate out distributions of the “good” players, “sub-replacement” players, and “not enough data” players, we need to first hypothesize the form we might expect the distributions of simulated player seasons to take. One constraint is that we know that Runs Created has to be positive. A commonly used distribution for positive random variables is a Lognormal distribution. It is fairly easy to check if a variable is Lognormal, as we simply can make a histogram with a log horizontal scale and check if the data looks roughly normal on this scale:

rc_histogram(hypothetical_player_seasons, scale="log")

This looks reasonably symmetric, so fitting a normal distribution to the data on a log scale is probably a reasonble way to approximate this distribution. While I might in some situations dig into the log-normality of these distributions more carefully, for the sake of this analysis this is probably a reasonable approximation to make.

Bayesian Gaussian Mixture Models

To separate out the three parts of the distribution, I will fit a Gaussian Mixture Model to the data on a logarithmic scale. A Gaussian Mixture Model approximates a probability distribution as the sum of several Normal distributions, allowing us to tease out the three components by scrutinizing the parameters of the distributions. I can fit this type of model readily using the Scikit-Learn package:

from sklearn.mixture import BayesianGaussianMixture

from sklearn.utils._testing import ignore_warnings

from sklearn.exceptions import ConvergenceWarning

@ignore_warnings(category=ConvergenceWarning)

def fit_mixture_model(simulated_seasons, n_components):

"Fit a Gaussian Mixture model to the given season"

rc = runs_created(simulated_seasons)

rc = np.reshape(rc[rc > 0.], (-1, 1))

return BayesianGaussianMixture(n_components=n_components).fit(np.log(rc)/np.log(10.))

n_components = 3

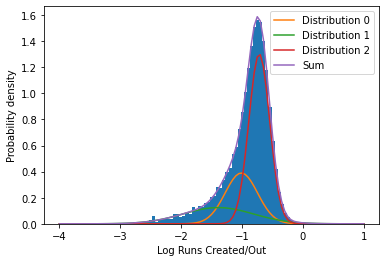

gmm = fit_mixture_model(simulated_seasons, n_components)I occasionally get ConvergenceWarnings when fitting this model. Further inspection has shown that these models usually seem to make reasonable predictions, so for the sake of clean presentation I suppress such warnings here. We can plot the mixture model on top of the data to which it has been fit as follows:

def get_normal_from_gmm(gmm, index):

"converts gmm model to callable pdf function"

assert index >= 0

assert index < len(np.squeeze(gmm.weights_))

weights_array = np.reshape(np.squeeze(gmm.weights_), (-1, 1))

mean_array = np.reshape(np.squeeze(gmm.means_), (-1, 1))

cov_array = np.reshape(np.squeeze(gmm.covariances_), (-1, 1))

def norm_pdf(x):

return(weights_array[index]*

scipy.stats.norm.pdf(x, loc=mean_array[index],

scale=np.sqrt(cov_array[index])))

return norm_pdf

def plot_distribution_sum(simulated_seasons, n_components):

"plot the Gaussian mixture models with the underlying data"

rc_histogram(simulated_seasons, scale="log")

gmm = fit_mixture_model(simulated_seasons, n_components)

pdfs = [get_normal_from_gmm(gmm, i) for i in range(n_components)]

logrc_vals = np.linspace(-4., 1., 101)

for (pdf, i) in zip(pdfs, range(n_components)):

plt.plot(logrc_vals, pdf(logrc_vals), label="Distribution {}".format(i))

plt.plot(logrc_vals, np.sum(np.array([pdf(logrc_vals) for pdf in pdfs]), axis=0), label="Sum")

plt.legend()

print("Means: {}".format(np.squeeze(gmm.means_)))

print("Variances: {}".format(np.squeeze(gmm.covariances_)))

plot_distribution_sum(simulated_seasons, n_components)Means: [-1.01128144 -1.39076184 -0.71722101]

Variances: [0.07110075 0.33645528 0.02934296]

We see that the Gaussian Mixture Model was able to fit the three components approximately where we would expect. (Note that because the Gaussian Mixture Model does not always put the components in the same order after fitting, the numbering of the different distributions may not always be the same. Thus, I try not to refer to the legend labels in the following for consistency.)

At the lowest end, we see a very broad distribution over the full range of the plot, which is quite similar to what was observed when just plotting samples from the prior on a logarithmic scale above. These are players for which data is scarce, and we will ignore these.

Then there is the high end, where most of the mass in the distribution can be found. These are the good, every day major league players. While we could use this distribution to understand how good a particular player is, it likely does not contain any replacement players so we cannot use it to independently assess what level replacement is. Note that this is precisely why we went to the trouble of doing this simulation – the standard 80% of league average heuristic is arbitrary because it is based on players that are clearly not replacement level.

This leaves the intermediate distribution – the players that are clearly below the typical major league player, but also those where we have evidence of how good they are over the prior distribution that I specify. Thus, perhaps this distribution is representative of the production of replacement players in this particular season. To get a replacement level estimate, I just have to separate out this distribution, and for simplicity’s sake, use the mean of this to estimate replacement level:

def extract_replacement_level(gmm):

"""

Determine the mean runs created/out for players in the sub-replacement distribution

(assumes the highest mean is associated with good players, while the second highest

mean is replacement level)

"""

means = np.sort(np.squeeze(gmm.means_))

assert len(means) >= 2

return 10.**(means[-2])

print("Estimated replacement level: {}".format(extract_replacement_level(gmm)))

print("Heuristic replacement level: {}".format(heuristic_replacement_level(season_stats)))Estimated replacement level: 0.09505133831076636

Heuristic replacement level: 0.1321843895833081This is somewhat below the heuristic estimate, but we have a much less arbitrary way of estimating it so we can better understand what it means. Our estimate of replacement level is the expected runs created per out from the population of players where (1) we have evidence from their hitting that they are better than our pessimistic prior, and (2) we cannot explain the presence of these players in the distribution of simulated season statistics based on an assumption of normality in the simulated data. This happens to be about 60% of the league average, rather than 80% of the league average, though I am less interested in getting the exact numbers to line up than better understanding the various distributions that fall out of the analysis and using them to explain replacement level more precisely, so this will be fine for these purposes.

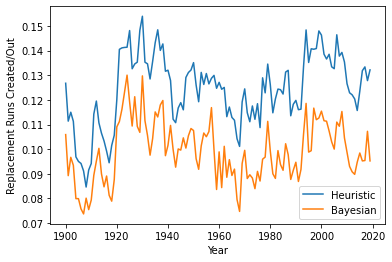

With this machinery in place, one thing we can easily do is compute this for every year since 1900 in baseball history:

def bayesian_replacement_level(year, conn, priors, n_components):

"Full function for Bayesian replacement level estimation"

season_stats = rc_base_query(year, conn)

league_season = project_year(season_stats, priors)

gmm = fit_mixture_model(league_season, n_components)

return extract_replacement_level(gmm)

def compute_heuristic_replacement_level(year, conn):

"full function for heuristic replacement level calculation"

season_stats = rc_base_query(year, conn)

return heuristic_replacement_level(season_stats)

def make_time_plot(start, end, priors, n_components, conn):

"plot the evolution of estimates for replacement level over time"

start = int(start)

end = int(end)

n_components = int(n_components)

years = np.arange(start, end)

heuristic_estimates = [compute_heuristic_replacement_level(yr, conn) for yr in years]

bayesian_estimates = [bayesian_replacement_level(yr, conn, priors, n_components) for yr in years]

plt.plot(years, heuristic_estimates, label="Heuristic")

plt.plot(years, bayesian_estimates, label="Bayesian")

plt.legend()

plt.xlabel("Year")

plt.ylabel("Replacement Runs Created/Out")

make_time_plot(1900, 2020, priors, n_components, conn)

As we can see, this method gives a good indication of the variability in replacement level over the course of the history of baseball that mirrors the traditional heuristic, though the level is somewhat lower (as mentioned above). We also note that the difference between the two measures is smaller prior to 1920, with more stable differences after that despite some significant fluctuations over time. We see that it captures the overall trends in run production that are well documented – runs were scarce in the “dead ball” era prior to 1920, then show an uptick, a decrease in the 1940’s that holds fairly stable until an increase in the late 1990’s and early 2000’s during the “steriod” era, and a lull again in the 2010’s that has since increased again.

Discussion

As mentioned above, our estimate of runs created is somewhat crude for this analysis. Extending this to be more accurate requires factoring in base running, correcting for the effect of each stadium on run production, and being more precise in how the runs each team scores can be attributed to each player (i.e. the “a player cannot be on base for themselves” effect). One additional factor to consider is fielding luck – more modern measurement techniques can track the ball when it is hit, and corrections can be made for hard hit balls that are likely to have been hits but the defense was positioned correctly to make an out. However, this data is only available for modern play, so projecting these effects over the course of baseball history is not possible.

A similar analysis can be done for a pitchers. Pitchers are fundamentally measured by their ability to get outs and not giving up hits or walks, just with the desired outcomes reversed when compared to hitters. However, much analysis by statisticians have shown that there is significant randomness in the fraction of batted balls that turn into hits versus outs, so the simplest way to study pitchers is to look at the things that are entirely in the pitcher’s control: walks, strikeouts (i.e. the batter was unable to hit the ball and this out can entirely be credited to the pitcher’s ability), and home runs over a number of innings pitched. This is known as Fielding Independent Pitching (FIP), and can be converted into a number of runs saved. This can then be analyzed in a similar fashion to the runs that a hitter creates to measure pitcher value.

How would a team be likely to use this data and approach? First, we can simulate the range of seasons that any player would be likely to produce given their actual statistics, which is useful for evaluating individual players. This approach can also determine if a team has been lucky or unlucky in terms of their hits producing runs. Very often, a team that produces more runs than would be expected with a statistic like runs created is likely doing so based on luck, and would not necessarily be expected to sustain the same level over the remainder of a full season. There are a number of ways we could use this approach to further evaluate players and teams, though this is beyond the scope of this story.

Beyond baseball, measuring marginal utility is more difficult because the value is often subjective and hard to boil down to a clear metric from which a baseline level can be estimated. For instance, I love getting coffee from my local cafe. Should I be comparing this to coffee I make at home? Get from Starbucks? From the instant machine at my local convenience store? Depending on the circumstance, “available with minimal effort” is highly dependent on context and mood. Until we find a way to quantify that in an objective way, sports will remain one of the most popular avenues to rigorously quantify and analyze marginal utility.