What is Fairness?¶

The Children and the Flute: An Example of Value Pluralism¶

Three children are arguing about which of them should have a flute.

- Anne claims the flute should belong to her on the grounds that she is the only one who can play the flute.

- Bob claims the flute should belong to him on the grounds that he is the only one among the three who is so poor that he has no toys.

- Carla claims the flute should belong to her on the grounds that she was the one who made the flute.

None of the children deny the claims of the others.

Question

Is it fair to give the flute to Anne, Bob, or Carla?

The story of the children and the flute is proposed by Amartya Sen, in his seminal work The Idea of Justice.1 It is designed as a way to test our intuitions about our ability to reconcile incommensurable values (i.e. values that cannot be judged by the same standards), when evaluating claims regarding fair treatment or outcomes. In the story, the three children represent competing theories of fairness that emphasise different values.

- Anne represents utlitarian theories of fairness (e.g. fairness involves maximising the overall welfare of the group)

- Bob represents economic egalitarian theories of fairness (e.g. fairness involves ensuring equal distribution of goods)

- Carla represents libertarian theories of fairness (e.g. fairness involves freedom to pursue one's own interests and benefit from one's own efforts)

While the values expressed by these theories do not always have to come into conflict, the purpose of Sen's example is to present a toy example of how and where they can.

This module is not about incommensurable values. However, it is about how to manage and address (sometimes) competing claims of fairness in the context of data-driven technologies. As such, Sen's example provides a useful bookend and a reminder that fairness is not a single, objective concept, but rather a process of evaluating various claims and reasons on the basis of their respective merits, and in a way that strives to ensure all voices (and values) are heard and included.

What would you save?

Your house is on fire and you can take only three things with you before the entire structure becomes engulfed in flames.

What would you save?[^taboo]

Taboo trade-offs

People answer this question in very different ways.

What can be invaluable to one person might seem like a trinket to another or vice versa.

We seem to have a lot of flexibility in the way we ascribe value to our possessions, in a way that sometimes gives them the quality of the _sacred_.

This quality can be extended to values as well, and we usually hold on extra tightly to those we (consciously or not) consider sacred.

Research suggests that once something has become sacred or holds sacred value, people are (perhaps unsurprisingly) much more reluctant to compromise over them.

When they are asked to, they often express moral outrage, anger, and even disgust, a phenomenon that has been referred to as the _taboo trade-off_.[^tetlock]

This means that they become insensitive to cost-benefit analysis, and they are _less_ likely to accept an exchange if they are offered money to relinquish whatever they hold as of sacred value (think of how you would react if someone offered you money to compromise on your principles).

This is related as it is another example of a kind of _value pluralism_. Individuals, groups, and even cultures can value certain objects, ideas, and practices in widely different ways.

Defining Fairness¶

Given the previous example, you will find it unsurprising that this module will not attempt to define fairness in the traditional way. Rather, it will explore different facets of the concept, how they relate to each other, and most importantly how they can be practically applied to the design, development, and deployment of data-driven technologies.

In doing so, we will appeal to a variety of interrelated theories and concepts, including:

- Legal concepts of non-discrimination and equality

- Moral concepts of social justice and distributive justice

- Practical forms of bias mitigation and statistical fairness

- Social concepts of diversity, inclusion, and representation

We will strive to identify common (or, underlying) themes that can be used to operationalise and proceduralise fairness in the context of data-driven technologies.

Clarification

It is important to note that this module is not a summary of or introduction to any of these areas.

Therefore, while we will strive to make explicit where specific concepts are drawn from (e.g. legal versus moral literature), it is important to note that many of the concepts are inherently multi-disciplinary and interrelated.

Let's start by looking at some questions that span the different perspective outlined above.

Questions of Fairness (1): Procedures¶

Here are three questions that all relate to fair procedures:

- Who should get what?

- How should we decide?

- Who decides?

These three questions are related. The first question relates to the challenges of deciding how to allocate or distribute goods. For instance, who should be granted a mortgage? And, which factors are relevant to the way we carve up different groups (e.g. those eligible and those ineligible)?

The second question relates to the decision procedures (or rules) we use to actually allocate or distribute goods. For instance, will different groups be offered different mortgage rates? If so, what will influence this decision (e.g. will weights be applied to the previous set of factors)?

And the third question relates to the authority of the decision procedure. That is, who is designing and implementing the decision procedure, and what provides them with the authority (or, legitimacy) to do so?

To give one example, perhaps senior decision-makers for a national department of health and social care are trying to decide how to allocate limited resources for accessing counselling services. They start by deciding that the services should be allocated to those who are most in need. However, they soon realise that they do not have a clear definition of what "most in need" means. As such, they reach out to a group of stakeholders (e.g. patients, clinicians, and researchers) to help them define the criteria for eligibility.

Here, the procedures for answering each of the above questions is inclusive and aims to uphold values such as democratic forms of deliberation. But whether the procedures will a) maximise the overall welfare of the group, b) ensure equal distribution of goods, or c) allow people to pursue their own interests and benefit from their own efforts, is not directly addressed.

So, we can now see that the underlying values that characterise fairness can be separated from the procedures used to achieve them.

What is a good?

As we use the term in this module, a good is anything that is valued by a person or a group of people.

This can be some resource (e.g. money, food, water), a service (e.g. healthcare, education, housing), an outcome (e.g. improved mobility, winning the lottery), or a status (e.g. power, prestige, respect).

It is important to note that a good can be both tangible and intangible, and, therefore, is intentionally an ambiguous term.

Questions of Fairness (2): Outcomes, Comparisons, and Trade-Offs¶

In addition to the previous questions, there are also questions that help us identify which elements are responsible for any comparisons and trade-offs between outcomes. For example:

- Is a system that gives everyone an equal opportunity fairer than one that ensures everyone has an equal outcome?

- Is an algorithm that follows consistent and impartial logic fairer than a human that shows empathy and compassion?

- Is it fairer to use a more accurate model, which is complex and opaque in its workings, than a less accurate but interpretable model?

Answering questions such as these may involve both the identification and evaluation of competing values that pertain to specific outcomes, as well as the different decision procedures employed.

When we ask questions such as these, or those from the previous set, it is important to be clear on our target. Is our question focused on a comparison of different values, as is the case with the choice between which of the children should get the flute? Is our question seeking to clarify whether a decision procedure is legitimate, or is it trying to determine if it is effective in realising some pre-determined outcome? Or, is our question seeking to clarify the reasons that are relevant to resolving some trade-off?

Until we can clearly specify the question we are asking, it is unlikely we will be able to form consensus on an answer or even be sure that we are disagreeing over the same thing. Over the course of this module we will slowly build up our conceptual vocabulary to develop both a shared understanding of the different questions that can be asked, as well as the different answers that can be given.

We have already started this process, but let's now turn to the first set of conceptual distinctions that directly address data-driven technologies.

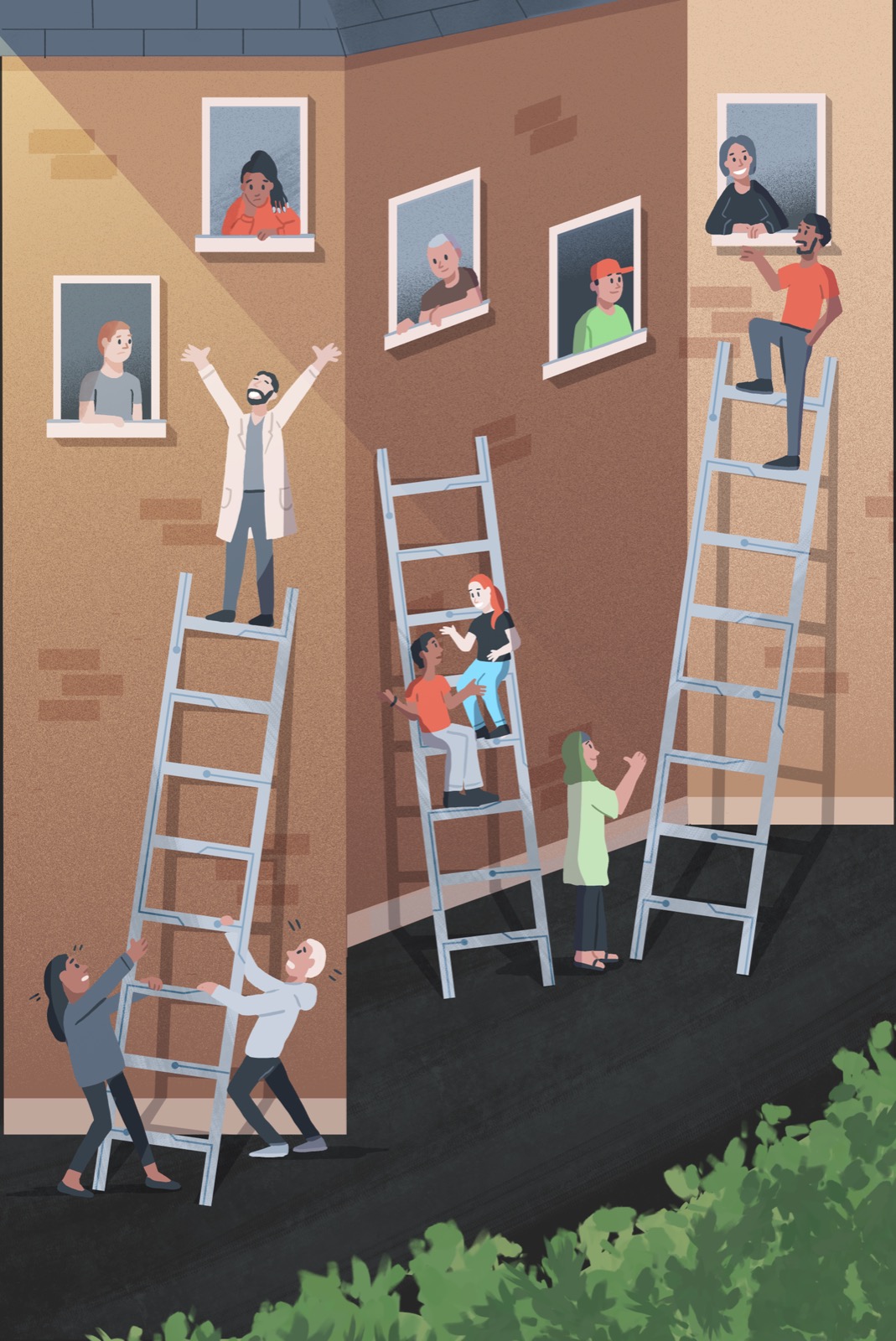

Conceptual Matters (1): Two Perspectives on Fairness¶

There are two perspectives on fairness that we will use throughout this module to help us emphasise different features of data-driven technologies that relate to matters of fairness:

- Sociocultural Fairness

- Statistical Fairness

What do we mean by 'perspective'?

Our use of the term 'perspective' is intended to draw attention to the fact that each perspective is focused on the same questions, but approaches the questions from a different stance or starting point.

No single perspective will be able to capture everything that matters about fairness.

Rather, an inclusive and diverse set of backgrounds and people will be required to ensure that the different perspectives are well represented and that all considerations have been taken into account.

There are many ways that we could have defined or labelled these perspectives.

However, the perspectives we have chosen were selected to achieve a balance between a) maximising applicability across different domains while b) minimising the number of categories.

Let's look at each of these perspectives in turn.

Sociocultural Fairness¶

Sociocultural fairness emphasises the importance of the social and cultural context in which a data-driven technology is developed, deployed, and used. Adopting this perspective helps us to consider the ways in which different groups or communities may be impacted by a technology, whether the implementation of a system into a particular context is appropriate, and whether the technology is being used in a way that is consistent with the values of the community.

Addressing questions about sociocultural issues typically requires a deep understanding of the historical and current practices and dynamics of the society or community in question.

Sociocultural fairness in healthcare

In the context of healthcare for example, it might be important to consider relevant aspects at the research and deployment stages of the system.

Which research projects get funded?

What kinds of conditions get the most attention?

For example, a disproportionate percentage of resources might go to funding treatments which are very lucrative (e.g., treatment for chronic conditions). Similarly, a lot of resources might end up in research for medical (and cosmetic) anti-aging products and procedures, not necessarily because they are life-saving treatments, but because there is a high demand for them.

On the other hand, sometimes quite deadly conditions go underfunded due to their rarity. For example, research on a vaccine for the Hantavirus Pulmonary Syndrome (HPS) transmitted by rodents in Southern Chile and Argentina, one of the most sparsely populated regions on Earth, has been halted due to lack of funds even though the disease proves to be fatal for around 30-40% of those who contract it.

Additionally, at the deployment stage, it will be important to ask who will be most likely to access the new treatment, drug, or procedure?

Are there any barriers of access to protected or other groups?

Statistical Fairness¶

As a module on data-driven technologies, especially those that can be described as "algorithmic" or "autonomous", statistical fairness is a vital perspective to adopt. This perspective focuses on the statistical properties of the data-driven technology in question, including properties of the data used to develop the system, the metrics chosen to evaluate its performance, as well as formal ways of representing the sociocultural context in which the technology is deployed.

Addressing questions about statistical fairness typically requires high levels of technical expertise and familiarity with up-to-date statistical techniques and concepts.

Statistical fairness in healthcare

Say your team is developing a new ML model which helps diagnose people for a novel disease with symptoms that do not become apparent immediately.

When training the model, some of the questions your team will need to think of in terms of statistical fairness are:

- Are the classes in the training set balanced? As we will see, this is often not the case in healthcare data. So if they are not, how will your team address this issue?

- Are there any relevant biases in your training data?

- Which metrics will your team use to evaluate model performance?

- Does the model perform similarly across different subgroups?

- How will you decide when inevitable trade-offs, such as the trade-off between minimising false negatives or false positives, come up?

The topic of statistical fairness can quickly get quite technical.

If you are feeling confused, do not worry.

We will focus on statistical fairness in the context of healthcare in the [third section](rri-203-3.md) of our module.

These perspectives will form the basis of the remaining sections in this module, with a final section on the different biases that may arise during the design, development, and deployment of AI systems which can negatively impact the system's fairness.

Conceptual Matters (2): Equality and Equity¶

Before we end this section, we should also address another (smaller) conceptual distinction: the difference between equality and equity.

Fairness is often spoken of in connection with these two neighbouring concepts. And, while related, they are not synonymous. As we will see, in some cases equality or equity may not even be desirable goals to pursue in the context of a particular project. And, if equality was our only goal, we would not be as interested in fair machine learning and AI, because we would be able to use simpler algorithms that just automate our chosen procedural rules and treat everyone the same.

Equal Rights

While moral and legal concepts, such as universal human rights, ascribe some *fundamental notion* of equality to all people, at a practical level the idea of equality rarely goes very far as most people are not equal in a whole variety of ways.

As such, it is often not a good idea to treat people *equally* except in rare cases (e.g. sports or talent competitions).

This is why we often speak of "equal opportunity" rather than "equal outcomes".

For now, let's put these issues aside and just define the two concepts as follows:

- Equality is when all people are treated the same, regardless of any relevant differences in characteristics (e.g. age, sex, religious beliefs).

- Equity is where people are treated differently based on what they deserve, which will vary between contexts.

Both require us to define key metrics.

- Equality requires us to define what counts as "the same" in terms of procedural rules or decisions.

- Equity requires us to determine how to measure what people "deserve".

And, both decisions connect us back to our three procedural rules from earlier (e.g. who gets to decide on what people deserve), as well as the possible trade-offs and comparisons between relevant values (e.g. whether all stakeholders agree about whether a person is deserving of a particular outcome, and how desirable the outcome may be).

If you are feeling a bit overwhelmed by all of this, please don't worry. Understanding fairness is challenging, as it requires a deep understanding of a wide network of concepts and a practical grasp on how to move around this network in a dynamic but purposeful way—this is where our perspectives will come in handy, as they will help us focus on which part of the network is most relevant or salient to our concerns. To help you develop this ability, we will be returning to these concepts a lot throughout this module and anchoring them in concrete and illustrative examples. Doing so will allow you to build up a more intuitive understanding of the concepts, and how they relate to each other.

In the next section we are going to start to build up our conceptual vocabulary by introducing the first perspective: sociocultural fairness.

-

Sen, A. (2009). The idea of justice. Penguin, United Kingdom. ↩

-

This example is taken from the following article. Waytz, A. (2010, March 9). The psychology of the taboo trade-off. Scientific American. https://www.scientificamerican.com/article/psychology-of-taboo-tradeoff/ ↩

-

Fiske, A. P., & Tetlock, P. E. (1997). Taboo trade-offs: Reactions to transactions that transgress the spheres of justice. Political psychology, 18(255-297). https://doi.org/10.1111/0162-895X.00058 ↩