What is Explainability?¶

When digital artist Jason M. Allen of Pueblo West, Colorado created the image, 'Théâtre D’opéra Spatial', and entered it into the Colorado State Fair's annual art competition, he caused quite a controversy. The admittedly captivating image was in one sense of the term "created" by Mr. Allen, but not with the traditional software tools of a digital artist (e.g. Photoshop, Illustrator's Tablet). Instead, all it took for Mr. Allen to create this image was a single text prompt used as input into a generative AI model known as Midjourney.

Like its more messy and organic counterpart, digital art typically requires hours of painstaking work. But AI images like those generated by Midjourney can be created in a matter of seconds, using carefully chosen prompts such as the following:

/imagine prompt: unexplainable AI, surrealism style

The results are often stunning, as is the case with 'Théâtre D’opéra Spatial'. But in other cases, its clear from minor details that the AI lacks any real understanding of certain concepts or objects, as can be seen from the image generated by the above prompt:

When Mr. Allen entered his image into the competition, which he ended up winning, the public response was mixed. Notably, other artists whose livelihoods and welfare depend on the public valuing and appreciating the fruits of their labour were anxious and angry about the widespread adoption of AI-generated art. Many decried the fact that no real skill or effort was required to create such images, and that the widespread use of AI image generators would in turn devalue both human labour and an important source of aesthetic value in human society.

You may have an opinion on this controversy, but this module is not about digital art, the value of AI art, or whether such images are in fact "art". Rather, it is about explainability of data-driven technologies.

So, what does an AI generated image have to do with explainability? Simply put, it is the fact that no one has any idea how these systems produce the images they do. Like other use cases of generative AI, such as large language models, they are a great example of black box systems.

You may not think an ability to explain how a system produces images matters much from a societal perspective, as the harms they can cause do not arise from the fact that the generative models are unexplainable. Or, you may think that the value of generative AI in such sectors can improve regardless of the model's transparency or interpretability.

In both cases, a plausible case could be made in favour of either stance, and vice versa.

But, as we will see over the course of this model, many use cases of such systems, and even more simple algorithmic tools, depend crucially on properties such as transparency, interpretability, and accessible explanations. This module is, therefore, about understanding what these properties mean and require from us, and when and why they are important.

The Scope of Explainability¶

A lot of research has already been published on the concept of 'explainability' and neighbouring concepts (e.g. model interpretability, project transparency). Some research seeks to introduce practical methods and techniques for making predictive models more interpretable, whereas other research seeks to clarify the differences between the related concepts. Because this module is focused on understanding why explainability matters for responsible research and innovation, we will explore both areas. However, there are two important caveats here:

- This is not a module to teach data scientists or machine learning engineers how to use or implement existing methods or techniques.

- This module aims to be consistent with widely agreed uses of concepts and terminology, but also has its own unique perspective on the topic.

On the second point, the first thing to note is that 'explainabilty' is employed with the broadest scope of any of the concepts we discuss and, as such, it subsumes many of the neighbouring concepts. For instance, 'interpretability' is necessary for 'explainability', but not sufficient. Or, to put it another way, 'explainability' is our catch-all term that encompasses many of the related and neighbouring concepts. Therefore, it makes sense to start the module by explaining what we mean by 'explainability'.

What is Explainability?¶

Before we discuss explainability, let's look at a closely related concept, 'interpretability'. Following Miller (2019), let's define 'interpretability' as follows:

Interpretability is the degree to which a human can understand the cause of a decision.1

In the context of data-driven technologies, interpretability is the degree to which a human can understand the cause of an output given by a predictive model, or why a generative AI model produced a particular image.

As a property of the model or system, interpretability can be measured in degrees, and it is often the case that a model is more or less interpretable dependent on factors such as 'who is doing the interpreting' and 'what is being interpreted'.

We will look at these factors in more detail later in the module.

Interpreting Images

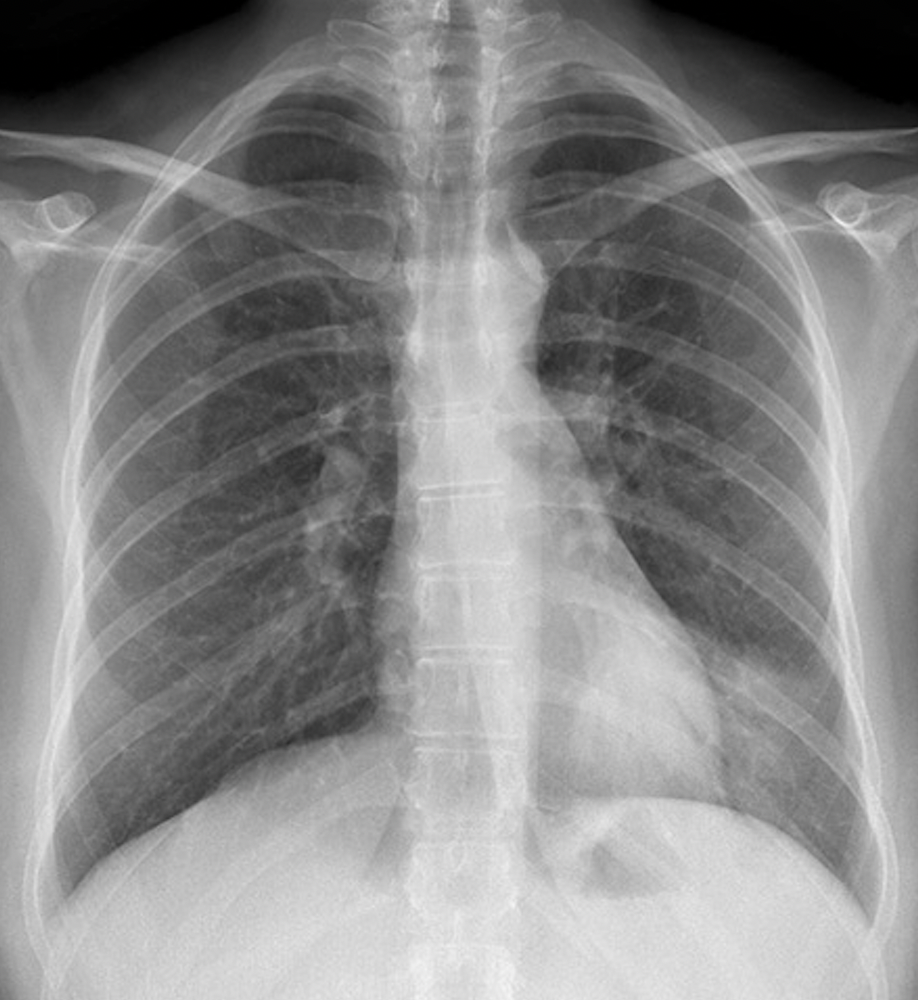

To give two examples of the above aspects of interpretability, ask yourself if you can interpret the meaning behind the following images:

Unless you are a chess player or radiologist you will not be able to interpret the significance of the patterns in either of these images. And, dependant on how complex the chess game or physiological issue, it may be the case that only highly experience chess players or radiologists could interpret similar images.

Understanding the cause of a decision is no guarantee that the decision can be explained in the appropriate manner, however, nor that the explanation would be deemed acceptable by the recipient of the explanation.

Consider the following example. You are asked to provide an explanation for how you made a highly accurate prediction about your partner returning home from work at a very specific time one day. If you responded, "They messaged me as they left the office.", this would probably suffice as what we can call a 'folk psychological explanation'—that is, an explanation provided in everyday discourse. Furthermore, most people would be able to infer from this explanation that your partner follows the same route home each day and that, traffic permitting, their journey takes 25 minutes.

But now, let's assume you are asked to explain how you were able to predict the behaviour of a complex, dynamic system, such as the local weather in the next 12 hours. Perhaps in this scenario, you are a data scientist working for a national meteorological centre, who are using data-driven technologies to improve forecasting. A government representative is in attendance at a seminar, trying to determine whether to invest centralised funds in improving the techniques you have been trialling. This time around, an explanation such as "our system provided me with a notification" is not going to cut it.

These two examples highlight an important point about explanations: they are situated within a specific context that has its own expectations and norms for what constitutes a valid or acceptable explanation. As such, we can define explainability as follows:

"The degree to which a system or set of tools support a person's ability to explain and communicate the behaviour of the system or the processes of designing, developing, and deploying the system within a particular context."

Defining explainability in this way help us draw attention to the fact that it will vary depending on the sociocultural context in which it is being assessed. While this context sensitivity also holds true, to some extent, for interpretability, there is a greater emphasis on communicability of reasons in the definition of explainability. To see why, let's look at one reason why being able to explain the behaviour of a system or the processes by which it produces an output is important.

Reliable Predictions and Inferential Reasoning¶

The philosopher, Bertrand Russell, had a good (albeit morbid) illustrative example of what is known as the 'problem of induction'. A turkey that is fed by a farmer each morning for a year comes to believe that it will always be fed by the farmer—it's a smart turkey! Each morning is a new observation (or data point) that confirms the turkey's hypothesis. Until, on the morning of Christmas Eve, the turkey eagerly approaches the farmer expecting to be fed but this time has its neck broken instead!

The problem of induction that is highlighted by this cautionary tale can be summarised as follows:

Cautionary tale

What reasons do we have to believe, and justify, that the future will be like the past? Or, what grounds do we have for justifying the reliability of our predictions?

Being able to deal satisfactorily with problem of induction matters because we do not want to be in the position of the turkey. We want reliable and valid reasons for why we can trust the predictions made by our systems, especially those that are embedded within safety critical parts of our society and infrastructure.

This is also why we care about more than only measuring the accuracy of our predictions. The turkey's predictions were highly accurate (99.7% over the course of its life), but the one time it was wrong really mattered!

Obviously, not all predictions carry the same risk to our mortality. Where we were making a prediction about when our partner would arrive home, for example, we all intuitively understand the inherent uncertainty associated with the inference from past observations—traffic is unreliable, and journeys during rush hours can vary widely. In such cases, we are happy with both the high level of variance in our predictions and a corresponding folk explanation that we can provide to others. But, in cases where the consequences of unreliable or false predictions are more severe or impactful, it is important that we can provide justifiable assurances to others about the grounds for trusting the behaviour and processes of a system.

In this regards, explainability is about ensuring we have justifiable reasons and evidence for why the predictions and behaviour of a model or system are trustworthy and valid. Understanding this communicative and social perspective also helps us appreciate why explainability is so important in safety-critical domains, as well as other areas like criminal justice where false predictions have high costs associated with them.

If we don't have trustworthy reasons for the reliability and validity of a model's predictions, we are not going to want to deploy it in a context like healthcare where people's well-being depend on high-levels of accuracy or low levels of uncertainty.

So, now we have a grasp on what explainability is and why matters, what do we need to do to ensure its existence?

Factors that support explanations¶

Over the course of this module, we will look at the following three factors in more detail. For now, just consider how they are both conceptually different from explainability but also support it as a goal:

- Transparent and accountable processes of project governance that help explain and justify the actions and decisions undertaken throughout a project's lifecycle

- Interpretable models used as components within encompassing sociotechnical systems (e.g. AI systems)

- An awareness of the sociocultural context in which the explanation is required. A special focus should be given to the potential communication barriers that may be in place, with an emphasis in building explanations which are suited to the relevant stakeholders.

-

Miller, T. (2019). Explanation in artificial intelligence: Insights from the social sciences. Artificial intelligence, 267, p. 1-38. https://doi.org/10.1016/j.artint.2018.07.007 ↩