Conclusion¶

Co-Creating a Culture of Trust¶

Uncertainty breeds distrust.

When the consequences of potential actions or interventions are hard to identify or evaluate, inaction or inertia can follow. And, such uncertainty and deliberative inertia is only exacerbated in the context of mental health (e.g. challenges that are faced by those with depression or anxiety disorders, such as catastrophising, when attempting to evaluate actions).

As we have seen over the course of this report, vague privacy policies, poorly-specified objectives, pervasive and invasive data extraction, and dubious claims about user safety and clinical efficiency of services, are all sources of uncertainty.

This report has laid out a methodological proposal for how we can begin to address the current culture of distrust that casts a shadow over the digital mental healthcare landscape. While we believe that the methodology and recommendations will support this goal, and have presented tentative evidence to justify this belief, the development of a trustworthy ecosystem of digital mental healthcare requires a more collaborative effort.

Therefore, in addition to our methodological proposal of trustworthy assurance and the initial argument patterns, we have also made a series of supporting recommendations (summarised in the Executive Summary). But what comes next?

Next Steps¶

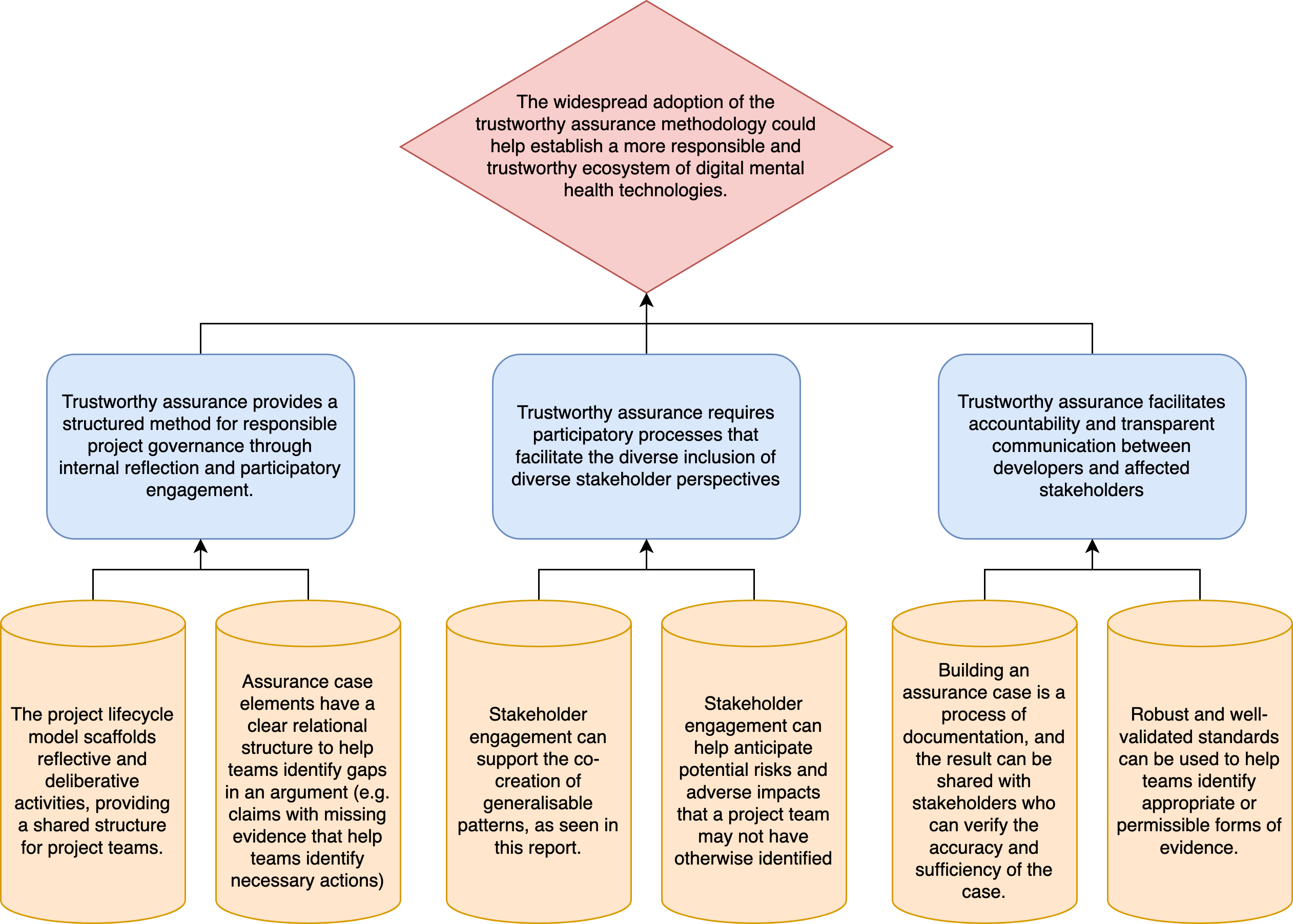

In addition to the individual recommendations presented in the previous chapters, this report can also be viewed as a general recommendation itself—one that calls for the more widespread adoption of the trustworthy assurance methodology. We can even formulate this recommendation as an argument in a pseudo assurance case:

Ultimately, the veracity of the goal claim depends on how and whether the methodology is adopted. This report is not a user guide, however, so more work needs to be done to ensure the adoption by organisations is made as straightforward as possible (e.g. alignment with existing regulation and current practices, including complementary quality assurance procedures).

A key next step will be to develop user guidance that can help with this objective. This has already commenced, and we currently have a) a full-length article that goes into further detail about the methodology1, in relation to domain-general ethical principles, and b) a prototype platform that can enable the production of assurance cases2. However, these proposals have hitherto not been connected directly with the more formal work undertaken by those working in safety assurance. This is primarily because we endeavoured to make the trustworthy assurance methodology simple and accessible for the purpose of our stakeholder engagement. But, the adoption of the methodology by developers and engineers would likely benefit from closer integration with standardisation efforts, such as the Goal Structuring Notation (GSN) and the GSN Standard Working Group.

These efforts will need to remain receptive to ongoing developments in this domain, whether technological (e.g. development of new computational techniques or devices), legislative (e.g. reforms to UK legislation such as the Draft Mental Health Bill, 2022), regulatory (e.g. report on the Public Sector Equality Duty by the Equality and Human Rights Commission, the formation of the Multi Agency Advice Service; proposal of the Digital Technology Assessment Criteria (DTAC), or societal (e.g. changing perceptions or attitudes of users towards mental health and well-being services, including data-driven technologies).

We hope that the proposal and recommendations set out in this report offers some clarity, structure, and positive direction to help navigate this complex (and multitudinous) space. And, more importantly, we hope that it can (indirectly) improve mental health services for those who need them.

-

Burr, C., and Leslie, D. (2022). Ethical assurance: a practical approach to the responsible design, development, and deployment of data-driven technologies. AI Ethics. https://doi.org/10.1007/s43681-022-00178-0 ↩

-

Details of the platform and the code is available through the following GitHub repository, and we welcome contributions to its ongoing development from any open-source developers: https://github.com/alan-turing-institute/AssurancePlatform ↩