Quickstart#

AutoEmulate’s goal is to make it easy to create an emulator for your simulation. Here’s the basic workflow:

import numpy as np

import random

import torch

from autoemulate.compare import AutoEmulate

from autoemulate.experimental_design import LatinHypercube

from autoemulate.simulations.projectile import simulate_projectile

seed = 43

np.random.seed(seed)

random.seed(seed)

_ = torch.manual_seed(seed)

Design of Experiments#

Before we build an emulator or surrogate model, we need to get a set of input/output pairs from the simulation. This is called the Design of Experiments (DoE) and is currently not a key part of AutoEmulate, as this step is tricky to automate and will run on more complex compute infrastructure for expensive simulations. There are lots of sampling techniques, but here we are using Latin Hypercube Sampling.

Below, simulate_projectile is a simulation for a projectil motion with drag (see here for details). It takes two inputs, the drag coefficient (on a log scale) and the velocity and outputs the distance the projectile travelled. We sample 100 sets of inputs X using a Latin Hypercube Sampler and run the simulator for those inputs to get the outputs y.

# sample from a simulation

lhd = LatinHypercube([(-5., 1.), (0., 1000.)]) # (upper, lower) bounds for each parameter

X = lhd.sample(100)

y = np.array([simulate_projectile(x) for x in X])

X.shape, y.shape

((100, 2), (100,))

Comparing emulators#

This is the core of AutoEmulate. With a set of inputs / outputs, we can run a full machine learning pipeline, including data processing, model fitting, model selection and potentially hyperparameter optimisation in just a few lines of code. First, we initialise an AutoEmulate object. Then, we run setup(X, y), providing the simulation inputs and outputs. Lastly, compare() will fit a range of different models to the data and evaluate them using cross-validation, returning the best emulator.

# compare emulator models

ae = AutoEmulate()

ae.setup(X, y)

ae.compare()

AutoEmulate is set up with the following settings:

| Values | |

|---|---|

| Simulation input shape (X) | (100, 2) |

| Simulation output shape (y) | (100,) |

| Proportion of data for testing (test_set_size) | 0.2 |

| Scale input data (scale) | True |

| Scaler (scaler) | StandardScaler |

| Scale output data (scale_output) | True |

| Scaler output (scaler_output) | StandardScaler |

| Do hyperparameter search (param_search) | False |

| Reduce input dimensionality (reduce_dim) | False |

| Reduce output dimensionality (reduce_dim_output) | False |

| Cross validator (cross_validator) | KFold |

| Parallel jobs (n_jobs) | 1 |

InputOutputPipeline(regressor=Pipeline(steps=[('scaler', StandardScaler()),

('model', GaussianProcess())]),

transformer=Pipeline(steps=[('scaler_output',

StandardScaler())]))In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

InputOutputPipeline(regressor=Pipeline(steps=[('scaler', StandardScaler()),

('model', GaussianProcess())]),

transformer=Pipeline(steps=[('scaler_output',

StandardScaler())]))Pipeline(steps=[('scaler', StandardScaler()), ('model', GaussianProcess())])StandardScaler()

GaussianProcess()

Pipeline(steps=[('scaler_output', StandardScaler())])StandardScaler()

We can have a look at the average cross-validation results for each model:

ae.summarise_cv()

| preprocessing | model | short | fold | rmse | r2 | |

|---|---|---|---|---|---|---|

| 0 | None | GaussianProcess | gp | 1 | 215.710659 | 0.999316 |

| 1 | None | RadialBasisFunctions | rbf | 3 | 156.417924 | 0.999051 |

| 2 | None | GaussianProcess | gp | 3 | 264.321808 | 0.997291 |

| 3 | None | GaussianProcess | gp | 4 | 408.727951 | 0.995065 |

| 4 | None | GaussianProcess | gp | 2 | 125.034775 | 0.992672 |

| 5 | None | RadialBasisFunctions | rbf | 1 | 732.435825 | 0.992109 |

| 6 | None | RadialBasisFunctions | rbf | 2 | 136.440735 | 0.991275 |

| 7 | None | RadialBasisFunctions | rbf | 4 | 711.761396 | 0.985034 |

| 8 | None | GaussianProcess | gp | 0 | 1780.417640 | 0.981903 |

| 9 | None | GradientBoosting | gb | 2 | 273.836699 | 0.964854 |

| 10 | None | SupportVectorMachines | svm | 4 | 1181.608512 | 0.958753 |

| 11 | None | SupportVectorMachines | svm | 3 | 1337.447973 | 0.930648 |

| 12 | None | RadialBasisFunctions | rbf | 0 | 4086.448169 | 0.904663 |

| 13 | None | SecondOrderPolynomial | sop | 1 | 2549.663199 | 0.904382 |

| 14 | None | GradientBoosting | gb | 4 | 1892.265092 | 0.894219 |

| 15 | None | GradientBoosting | gb | 1 | 2938.313071 | 0.873010 |

| 16 | None | RandomForest | rf | 1 | 3410.741925 | 0.828891 |

| 17 | None | RandomForest | rf | 2 | 626.749121 | 0.815887 |

| 18 | None | SupportVectorMachines | svm | 1 | 3575.251486 | 0.811987 |

| 19 | None | SecondOrderPolynomial | sop | 4 | 2824.670426 | 0.764289 |

| 20 | None | SupportVectorMachines | svm | 2 | 773.283978 | 0.719732 |

| 21 | None | GradientBoosting | gb | 0 | 7165.248828 | 0.706889 |

| 22 | None | LightGBM | lgbm | 1 | 4540.593827 | 0.696751 |

| 23 | None | SecondOrderPolynomial | sop | 0 | 7783.304650 | 0.654142 |

| 24 | None | RandomForest | rf | 0 | 7958.397214 | 0.638406 |

| 25 | None | SecondOrderPolynomial | sop | 3 | 3290.505481 | 0.580213 |

| 26 | None | SupportVectorMachines | svm | 0 | 9131.334752 | 0.523966 |

| 27 | None | LightGBM | lgbm | 4 | 4297.671828 | 0.454353 |

| 28 | None | LightGBM | lgbm | 3 | 3950.981370 | 0.394779 |

| 29 | None | LightGBM | lgbm | 0 | 10445.438731 | 0.377093 |

| 30 | None | RandomForest | rf | 4 | 5120.675910 | 0.225361 |

| 31 | None | GradientBoosting | gb | 3 | 4638.631583 | 0.165774 |

| 32 | None | RandomForest | rf | 3 | 4960.919508 | 0.045824 |

| 33 | None | LightGBM | lgbm | 2 | 2476.650706 | -1.874919 |

| 34 | None | SecondOrderPolynomial | sop | 2 | 3057.633480 | -3.381945 |

And create plots comparing the models:

ae.plot_cv()

Using best preprocessing method: None

No preprocessing was applied (using raw target values)

Evaluating on the test set#

AutoEmulate has already split the data into a training set and a test set. After looking at the cross-validation results, we can retrieve a fitted emulator and evaluate it on the test set. The GP predicts well on unseen data.

gp = ae.get_model("GaussianProcess")

ae.evaluate(gp)

| model | short | preprocessing | rmse | r2 | |

|---|---|---|---|---|---|

| 0 | GaussianProcess | gp | None | 100.5854 | 0.9997 |

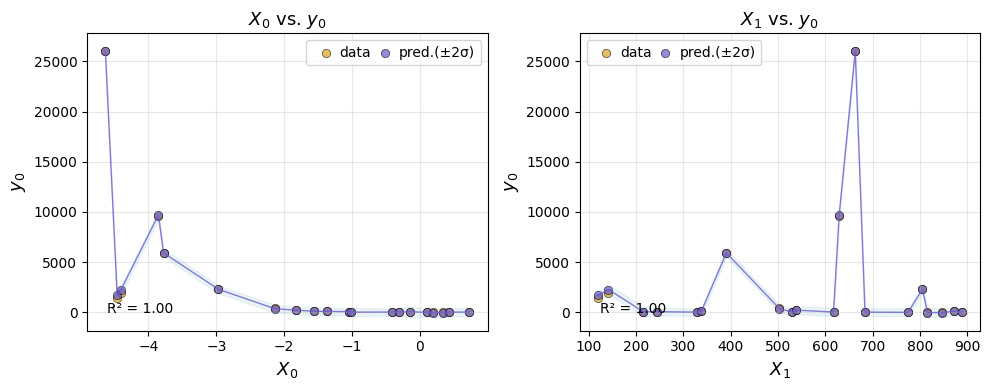

But it’s always useful to plot the predictions too.

ae.plot_eval(gp, input_index=[0, 1])

Refitting the emulator#

Before applying the emulator, we refit it on the entire dataset, including training and test set. This is done with the refit() method.

gp_final = ae.refit(gp)

Predictions#

We can use the best model to make predictions for new inputs. Emulators in AutoEmulate are scikit-learn estimators, so we can use the predict method to make predictions.

gp_final.predict(X[:10])

array([ 5.92758299e+03, 8.09264413e+03, 1.64323793e+04, 8.03628410e+03,

1.02650134e+02, -4.12283436e+00, 5.90682078e+00, 5.95790294e+01,

1.08375388e+04, 7.03506540e+00])