AI Fairness as a contextual and multi-valent concept¶

AI Fairness must be treated as a contextual and multi-valent concept that manifests in a variety of ways and in a variety of social, technical, and sociotechnical environments. It must, accordingly, be understood in and differentiated by the specific settings in which it arises. This means that our operating notion of AI fairness should distinguish between the kinds of fairness concerns that surface in

- the context of the social world that precedes and informs approaches to fairness in AI/ML innovation activities—i.e., in general normative and ethical notions of fairness, equity, and social justice, in human rights laws related to equality, non-discrimination, and inclusion, and in anti-discrimination laws and equality statutes;

- the data context—i.e. in criteria of fairness and equity that are applied to responsibly collected and maintained datasets;

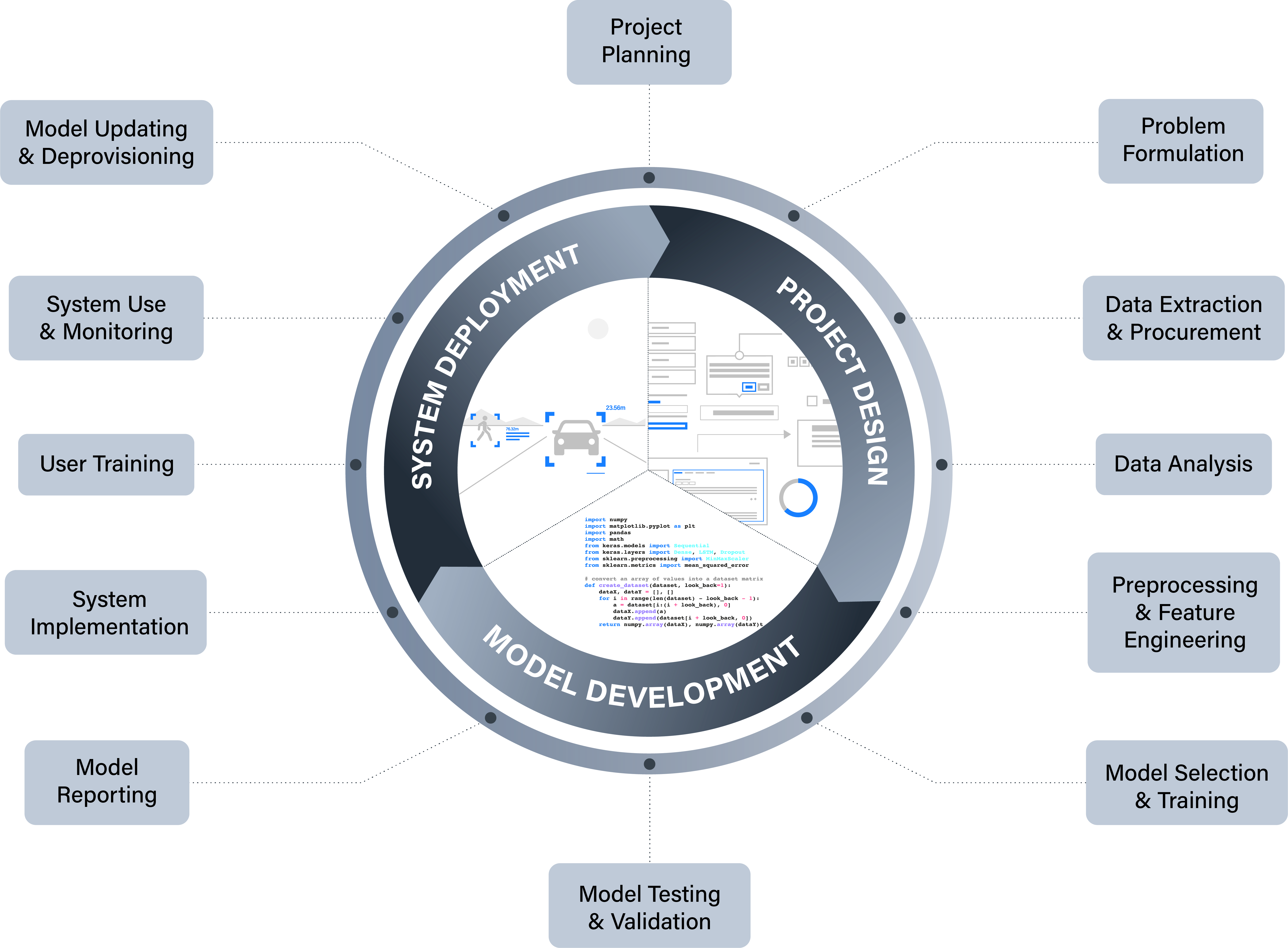

- the design, development, and deployment context—i.e. in criteria of fairness and equity that are applied (a) in setting the research agendas and policy objectives that guide decisions made about where, when, and how to use AI systems and (b) in actual model design and development and system implementation environments as well as in the technical instrumentalisation of formal fairness metrics that allocate error rates and the distribution of outcomes through the retooling of model architectures and parameters; and

- the ecosystem context—i.e. in criteria of fairness and equity that are applied to the wider economic, legal, cultural, and political structures or institutions in which the AI project lifecycle is embedded—and to the policies, norms, and procedures through which these structures and institution influence actions and decisions throughout the AI innovation ecosystem.

Each of these contexts will generate different sets of fairness concerns. In applying the principle of discriminatory non-harm to the AI project lifecycle we will accordingly break down the principle of fairness into six subcategories that correspond to their relevant practical contexts:

Data Fairness: The AI system is trained and tested on properly representative, fit-for-purpose, relevant, accurately measured, and generalisable datasets.

Application Fairness: The policy objectives and agenda-setting priorities that steer the design, development, and deployment of an AI system and the decisions made about where, when, and how to use it do not create or exacerbate inequity, structural discrimination, or systemic injustices and are acceptable to and line up with the aims, expectations, and sense of justice possessed by impacted people.

Model Design and Development Fairness: The AI system has a model architecture that does not include target variables, features, processes, or analytical structures (correlations, interactions, and inferences) which are discriminatory, unreasonable, morally objectionable, or unjustifiable or that encode social and historical patterns of discrimination.

Metric-Based Fairness: Lawful, clearly defined, and justifiable formal metrics of fairness have been operationalised in the AI system have been made transparently accessible to relevant stakeholders and impacted people.

System Implementation Fairness: The AI system is deployed by users sufficiently trained to implement it with an appropriate understanding of its limitations and strengths and in a bias-aware manner that gives due regard to the unique circumstances of affected individuals.

Ecosystem Fairness: The wider economic, legal, cultural, and political structures or institutions in which the AI project lifecycle is embedded—and the policies, norms, and procedures through which these structures and institution influence actions and decisions throughout the AI innovation ecosystem —do not steer AI research and innovation agendas in ways that entrench or amplify asymmetrical and discriminatory power dynamics or that generate inequitable outcomes for protected, marginalised, vulnerable, or disadvantaged social groups.

Data fairness¶

Responsible data acquisition, handling, and management is a necessary component of algorithmic fairness. If the results of an AI project are generated by a model that has been trained and tested on biased, compromised, or skewed datasets, affected stakeholders will not be adequately protected from discriminatory harm. Data fairness therefore involves the following key elements:

-

Representativeness Depending on the context, either under representation or over representation of demographic, marginalised or legally protected groups in the data sample may lead to the systematic disadvantaging of vulnerable or marginalised stakeholders in the outcomes of the trained model. To avoid such kinds of sampling and selection biases, domain expertise is crucial, for it enables the assessment of the fit between the data collected or procured and the underlying population to be modelled.

-

Fit-for-Purpose and Sufficiency An important question to consider in the data collection and procurement process is: Will the amount of data collected be sufficient for the intended purpose of the project? The quantity of data collected or procured has a significant impact on the accuracy and reasonableness of the outputs of a trained model. A data sample not large enough to represent with sufficient richness the significant or qualifying attributes of the members of a population to be classified may lead to unfair outcomes. Insufficient datasets may not equitably reflect the qualities that should rationally be weighed in producing a justified outcome that is consistent with the desired purpose of the AI system. Members of the project team with technical and policy competences should collaborate to determine if the data quantity is, in this respect, sufficient and fit-for-purpose.

-

Source Integrity and Measurement Accuracy Effective bias mitigation begins at the very commencement of data extraction and collection processes. Both the sources and instruments of measurement may introduce discriminatory factors into a dataset. When incorporated as inputs in the training data, biased prior human decisions and judgments—such as prejudiced scoring, ranking, interview-data or evaluation—will become the ‘ground truth’ of the model and replicate the bias in the outputs of the system. In order to secure discriminatory non-harm, you must do your best to make sure your data sample has optimal source integrity. This involves securing or confirming that the data gathering processes involved suitable, reliable, and impartial sources of measurement and sound methods of collection.

-

Timeliness and Recency If your datasets include outdated data then changes in the underlying data distribution may adversely affect the generalisability of your trained model. Provided these distributional drifts reflect changing social relationship or group dynamics, this loss of accuracy with regard to the actual characteristics of the underlying population may introduce bias into your AI system. In preventing discriminatory outcomes, you should scrutinise the timeliness and recency of all elements of the data that constitute your datasets.

-

Relevance, Appropriateness and Domain Knowledge The understanding and utilisation of the most appropriate sources and types of data are crucial for building a robust and bias-mitigating AI system. Solid domain knowledge of the underlying population distribution and of the predictive or classificatory goal of the project is instrumental for choosing optimally relevant measurement inputs that contribute to the reasonable determination of the defined solution. You should make sure that domain experts collaborate closely with your technical team to assist in the determination of the optimally appropriate categories and sources of measurement.

To ensure the uptake of best practices for responsible data acquisition, handling, and management across your AI project delivery workflow, you should initiate the creation of a Dataset Factsheet at the alpha stage of your project. This factsheet should be maintained diligently throughout the design and implementation lifecycle in order to secure optimal data quality, deliberate bias-mitigation aware practices, and optimal auditability. It should include a comprehensive record of data provenance, procurement, pre-processing, lineage, storage, and security as well as qualitative input from team members about determinations made with regard to data representativeness, data sufficiency, source integrity, data timeliness, data relevance, training/ testing/validating splits, and unforeseen data issues encountered across the workflow.

Application Fairness¶

Fairness considerations should enter into your AI project at the earliest possible stage of horizon scanning, problem selection, and project planning. This is because, the overall fairness and equity of an AI application is significantly determined by the objectives, goals, and policy choices that lie behind initial decisions to dedicate time and resources to its design, development, and deployment. For example, the choice made to build a biometric identification system, which uses live facial recognition technology to identify criminal suspects at public events, may be motivated by the objective to increase public safety and security. However, many members of the public may find this use of AI technology unreasonable, disproportionate, and potentially discriminatory. In particular, members of communities historically targeted by disproportionate levels of surveillance in law enforcement contexts may be especially concerned about the potential for abuse and harm. Appropriate fairness and equity considerations should, in this case, occur at the horizon scanning and project planning stage (e.g., as part of a stakeholder impact assessment process that includes engagement with potentially affected individuals and communities). Aligning the policy goals of a project team with the reasonable expectations and potential equity concerns of those affected is a key component of the fairness of the application.

Application fairness, therefore, entails that the policy objectives of an AI project are non-discriminatory and are acceptable to and line up with the aims, expectations, and sense of justice possessed by those affected.[@dobbe2018]-[@eckhouse2018]-[@green2018a]-[@green2018b]-[@mitchell2021]-[@passi2019]

As such, whether the decision to engage in the production and use of an AI technology can be described as “fairness-aware” depends upon ethical and policy considerations that are external and prior to considerations of the technical feasibility of building an accurate and optimally performing system or the practical feasibility of accessing, collecting, or acquiring enough and the right kind of data.

Beyond this priority of assuring the equity and ethical permissibility of policy goals, application fairness requires additional considerations in framing decisions made at the horizon scanning and project scoping or planning stage:

1

Equity considerations surrounding real-world context of the policy issue to be solved: When your project team is assessing the fairness of using an AI solution to address a particular policy issue, it is important to consider how equity considerations extend beyond the statistical and sociotechnical contexts of designing, developing, and deploying the system. Applied concepts of AI fairness and equity should not be treated, in a technology-centred way, as originating exclusively from the design and use of any particular AI system. Nor should they exclusively be treated as abstractions that can be engineered into an AI application through technical or mathematical retooling (e.g., by operationalising formal fairness criteria).[@fazelpour2020]-[@green2020]-[@leslie2020]-[@selbst2019] When designers of algorithmic systems limit their understanding of the scope of ‘fairness’ or ‘equity’ to these two dimensions, it can artificially constrain their perspectives in such a way that they erroneously treat only the patterns of bias and discrimination that arise in AI innovation practices or that can be measured, formalised, or statistically digested as actionable indicators of inequity.

Rather, equity considerations should be grounded in a human-centred approach, which includes reflection on and critical examination of the wider social and economic patterns of disadvantage, injustice, and discrimination that arise in the real-world contexts surrounding the policy issue in question. Such considerations should include an exploration of how such patterns of inequity may lead, on the one hand, to the disparate distribution of the risks and adverse impacts of the AI system or, on the other, to a lack of equitable access to its potential benefits. For instance, while the development of an AI chatbot to replace a human serviced medical helpline may provide effective healthcare guidance for some, it could have disparate adverse impacts on others, such as vulnerable elderly populations or socioeconomically deprived groups who may face barriers to accessing and using the app. Here, reflection on the real-world contexts surrounding the provision of this type of healthcare support yields a more informed and compassionate awareness of social and economic conditions that could impair the fairness of the application.

2

Equity considerations surrounding the group targeted by the AI innovation intervention: Each AI application that makes predictions about or classifies people, targets a specific subset of the wider population to which they belong. For instance, a résumé filtering system that is used to select desirable candidates in a recruitment process will draw from a pool of job applicants that constitute a subgroup within the broader population. Potential equity issues may arise here because the selection of subpopulations sampled by AI applications is non-random. Instead, the sample selection may reflect particular social patterns, structures, and path dependencies that are unfair or discriminatory.[@mitchell2021] In the case of the résumé filtering system, the sample may reflect long-term hiring patterns where a disproportionate number of male job candidates from elite universities (or those with similarly privileged educational backgrounds) have been actively recruited. Such practices have historically excluded people from other gender identities and socioeconomic and educational backgrounds. As a result, the pattern of inequity surfaces, in this instance, not within the sampled subset of the population but rather in the way that discriminatory social structures have led to the selection of a certain group of individuals into that subset.[@mehrabi2021]-[@olteanu2019]

<!-- (Mitchell et al., 2021). -->

<!-- (Mehrabi et al., 2021; Olteanu et al., 2019) -->

3

Equity considerations surrounding the way that the model’s output shapes the range of possible decision-outcomes: AI applications that assist human decision-making shape and delimit the range of possible outcomes for the problem areas they address.[@mitchell2021] For example, a predictive risk model used in children’s social care may generate an output that directly influences the space of choices available to a social worker. Because the model’s target is the identification of at-risk children, it may lead to social care decisions that focus narrowly on whether a child needs to be taken into care. This centring of negative outcomes could restrict the range of viable choices open to the social worker insofar as it de-emphasises the potential availability of other strengths-based approaches (e.g., stewarding positive family functioning through social supports and identifying and promoting protective factors). These alternative decision-making paths could be closed off in the social care environment given how the predictive risk model’s outputs restrictively shape the range of actions that can be taken to address the problem it is being used to inform.

Model design and development fairness¶

Because human beings have a hand in all stages of the construction of AI systems, fairness-aware design must take precautions across the AI project workflow to prevent bias from having a discriminatory influence:

Design phase¶

Problem formulation At the initial stage of problem formulation and outcome definition, technical and non-technical members of your team should work together to translate project goals into measurable targets.[@fazelpour2021]-[@leslie2019]-[@obermeyer2019]-[@obermeyer2021]-[@passi2019] This will involve the use of both domain knowledge and technical understanding to define what is being optimised in a formalisable way and to translate the project’s objective into a target variable or measurable proxy, which operates as a statistically actionable rendering of the defined outcome.

At each of these points, choices must be made about the design of the algorithmic system that may introduce structural biases which ultimately lead to discriminatory harm. Special care must be taken here to identify affected stakeholders and to consider how vulnerable groups might be negatively impacted by the specification of outcome variables and proxies. Attention must also be paid to the question of whether these specifications are reasonable and justifiable given the general purpose of the project and the potential impacts that the outcomes of the system’s use will have on the individuals and communities involved.

These challenges of fairness aware design at the problem formulation stage show the need for making diversity and inclusive participation a priority from the start of the AI project lifecycle. This involves both the collaboration of the entire team and the attainment of stakeholder input about the acceptability of the project plan. This also entails collaborative deliberation across the project team and beyond about the ethical impacts of the design choices made.

Development phase¶

Data pre-processing Human judgement enters into the process of algorithmic system construction at the stage of labelling, annotating, and organising the training data to be utilised in building the model. Choices made about how to classify and structure raw inputs must be taken in a fairness-aware manner with due consideration given to the sensitive social contexts that may introduce bias into such acts of classification. Similar fairness aware processes should be put in place to review automated or outsourced classifications. Likewise, efforts should be made to attach solid contextual information and ample metadata to the datasets, so that downstream analyses of data processing have access to properties of concern in bias mitigation.

The constructive task of selecting the attributes or features that will serve as input variables for a model requires human decisions to be made about the sorts of information that may or may not be relevant or rationally required to yield an accurate and unbiased classification or prediction. Moreover, the feature engineering tasks of aggregating, extracting, or decomposing attributes from datasets may introduce human appraisals that have biasing effects.

At this stage, human decisions about how to group or disaggregate input features (e.g., how to carve up categories of gender or ethnic groups) or about which input features to exclude altogether (e.g., leaving out deprivation indicators in a predictive model for clinical diagnostics) can have significant downstream influences on the fairness and equity of an AI system. This applies even when algorithmic techniques are employed to extract and engineer features, or support the selection of features (e.g., to optimise predictive power).

For this reason, discrimination awareness should play a large role at this stage of the AI model-building workflow as should domain knowledge and policy expertise. Your team should proceed in the model development stage aware that choices made about grouping or separating and including or excluding features as well as more general judgements about the comprehensiveness or coarseness of the total set of features may have significant consequences for historically marginalised, vulnerable, or protected groups.

Model selection The model selection stage determines the model type and structure that will be produced in the next stages. In some projects, this will involve the selection of multiple prospective models for the purpose of comparison based on some performance metric, such as accuracy or sensitivity. The set of relevant models is likely to have been highly constrained by many of the issues dealt with in previous stages (e.g., available resources and skills, problem formulation), for instance, where the problem demands a supervised learning algorithm instead of an unsupervised learning algorithm.

Fairness and equity issues can surface in model selection processes in at least two ways: First, they can arise when the choice between algorithms has implications for explainability. For instance, it may be the case that there are better performing models in the pool of available options but which are less interpretable than others. This difference becomes significant when the processing of social or demographic data increases the risk that biases or discriminatory proxies lurk in the algorithmic architecture. Model interpretability can increase the likelihood of detecting and redressing such discriminatory elements.

Second, fairness and equity issues can arise when the choice between algorithms has implications for the differential performance of the final model for subgroups of the population. For instance, where several different learning algorithms are simultaneously trained and tested, one of the resulting models could have the highest overall level of accuracy while, at the same time, being less accurate than others in the way it performs for one or more marginalised sub-groups. In cases like this, technical members of your project team should proceed with attentiveness to mitigating any possible discriminatory effects of choosing one model over another and should consult members of the wider team—and impacted stakeholders, where possible—about the acceptability of any trade-offs between overall model accuracy and differential performance.

Model training, testing, and validation

The process of tuning hyperparameters, setting metrics, and resampling data at the training, validation, and testing stages also involves human choices that may have fairness and equity consequences in the trained model. For instance, the way your project team determines the training-testing split of the dataset can have a considerable impact on the need for external validation to ensure that the model’s performance “in the wild” meets reasonable expectations. Therefore, your technical team should proceed with an attentiveness to bias risks, and continual iterations of peer review and project team consultation should be encouraged to ensure that choices made in adjusting the dials, parameters, and metrics of the model are in line with bias mitigation and discriminatory non-harm goals.

Evaluating and validating model structures

Design fairness also demands close assessment of the existence in the trained model of lurking or hidden proxies for discriminatory features that may act as significant factors in its output. Including such hidden proxies in the structure of the model may lead to implicit ‘redlining’ (the unfair treatment of a sensitive group on the basis of an unprotected attribute or interaction of attributes that ‘stands in’ for a protected or sensitive one).

Designers must additionally scrutinise the moral justifiability of the significant correlations and inferences that are determined by the model’s learning mechanisms themselves, for these correlations and inferences could encode social and historical patterns of discrimination where these are baked into the dataset. In cases of the processing of social or demographic data related to human features, where the complexity and high dimensionality of machine learning models preclude the confirmation of the discriminatory non-harm of these inferences (for reason of their opaqueness by human assessors), these models should be avoided.[@icoturing2020]-[@mitchell2021]-[@rudin2019] In cases where this is not possible, a different, more transparent and explainable model or portfolio of models should be chosen.

Model structures must also be confirmed to be procedurally fair, in a strict technical sense. This means that any rule or procedure employed in the processing of data by an algorithmic system should be consistently and uniformly applied to every decision subject whose information is being processed by that system. AI project teams should be able to certify that any relevant rule or procedure is applied universally and uniformly to all relevant individuals.

Implementers of the system, in this respect, should be able to show that any model output is replicable when the same rules and procedures are applied to the same inputs. Such a uniformity of the application of rules and procedures secures the equal procedural treatment of decision subjects and precludes any rule-changes in the algorithmic processing targeted at a specific person that may disadvantage that individual vis-à-vis any other. It is important to note that the consistent and uniform application of rules over time apply to deterministic algorithms, whose parameters are static and fixed after model development. Close attention should be paid, in this sense, to the procedural fairness issues that may arise with the use of dynamic learning algorithms. This is because the parameters and inferences of such systems evolve over time and can yield different outputs for the same inputs at different points in system’s lifespan.

Metric-based fairness¶

As part of the safeguarding of discriminatory non-harm and diligent fairness and equity considerations, well-informed consideration must be put into how you are going to define and measure the formal metrics of fairness that can be operationalised into the AI system you are developing.

Metric-based fairness involves the mathematical mechanisms that can be incorporated into an AI model to allocate the distribution of outcomes and error rates for relevant subpopulations (e.g., groups with protected or sensitive characteristics). In formulating your approach to metric-based fairness, your project team will be confronting challenging issues like the justifiability of differential treatment based on sensitive or protected attributes, where differential treatment can indicate differences in the distribution of model outputs or the distribution of error rates and performance indicators like precision or sensitivity.

There is a great diversity of beliefs in this area as to what makes the consequences of an algorithmically supported decision allocatively equitable, fair, and just. Different approaches to metric-based fairness—detailed below—stress different principles: some focus on demographic parity, some on individual fairness, others on error rates equitably distributed across subpopulations. Regardless of this diversity of views, your project team must ensure that the choices made regarding formal fairness metrics are lawful and conform to governing equality, non-discrimination, and human rights laws. Where appropriate, relevant experts should be consulted to confirm the legality of such choices.

Your determination of metric-based fairness should heavily depend both on the specific use case being considered and the technical feasibility of incorporating your chosen criteria into the construction of the AI system. (Note that different fairness-aware methods involve different types of technical interventions at the pre-processing, modelling, or post-processing stages of production). Again, this means that determining your fairness definition should be a cooperative and multidisciplinary effort across the project team.

You will find below a summary table of several of the main definitions of metric-based fairness that have been integrated by researchers into formal models as well as a list of current articles and technical resources, which should be consulted to orient your team to the relevant knowledge base. (Note that this is a rapidly developing field, so your technical team should keep updated about further advances.) The first four fairness types fall under the category of group fairness and allow for comparative criteria of non-discrimination to be considered in model construction and evaluation. The final two fairness types focus instead on cases of individual fairness, where context-specific issues of effective bias are considered and assessed at the level of the individual agent.

Take note, though, that these technical approaches have limited scope in terms of the bigger picture issues of application and design fairness that we have already stressed. Moreover, metric-based approaches face other practical and technical barriers. For instance, to carry out group comparisons, formal approaches to fairness require access to data about sensitive/protected attributes as well as accurate demographic information about the underlying population distribution (both of which may often be unavailable or unreliable, and furthermore, the work of identifying sensitive/protected attributes may pose additional risks of bias). Metric-based approaches also face challenges in the way they handle combinations of protected or sensitive characteristics that may amplify discriminatory treatment. These have been referred to as intersectional attributes (e.g. the combination gender and race characteristics), and they must also be integrated into fairness and equity considerations. Lastly, there are unavoidable trade-offs, inconsistencies, or even incompatibilities, between mathematical definitions of metric-based fairness that must be weighed in determining which of them are best fit for a particular use case. For instance, the desideratum for equalised odds (error rate balance) across subgroups can clash with the desideratum for equalised model calibration (correct predictions of gradated outcomes) or parity of positive predictive values across subgroups.[@chouldechova2017]-[@flores2016]-[@friedler2021]-[@kleinenberg2017]-[@mitchell2021]

Selecting fairness metrics¶

Demographic/statistical parity (group fairness)

An outcome is fair if each group in the selected set receives benefit in equal or similar proportions, i.e. if there is no correlation between a sensitive or protected attribute and the allocative result. [@calders2009]-[@denis2021]-[@dwork2011]-[@feldman2015]-[@zemel2013] This approach is intended to prevent disparate impact, which occurs when the outcome of an algorithmic process disproportionately harms members of disadvantaged or protected groups.

True positive rate parity (group fairness)

An outcome is fair if the ‘true positive’ rates of an algorithmic prediction or classification are equal across groups.[@zafar2017] This approach is intended to align the goals of bias mitigation and accuracy by ensuring that the accuracy of the model is equivalent between relevant population subgroups. This method is also referred to as ‘equal opportunity’ fairness because it aims to secure equalised odds of an advantageous outcome for qualified individuals in a given population regardless of the protected or disadvantaged groups of which they are members.

Equalised odds (group fairness)

An outcome is fair if false positive and true positive rates are equal across groups. In other words, both the probability of incorrect positive predictions and the probability of correct positive predictions should be the same across protected and privileged groups.[@hardt2016]-[@verma2018] This approach is motivated by the position that sensitive groups and advantaged groups should have similar error rates in outcomes of algorithmic decisions.

Positive predictive value parity (group fairness)

An outcome is fair if the rates of positive predictive value (the fraction of correctly predicted positive cases out of all predicted positive cases) are equal across sensitive and advantaged groups.[@chouldechova2017] Outcome fairness is defined here in terms of a parity of precision, where the probability of members from different groups actually having the quality they are predicted to have is the same across groups.

Individual fairness (individual fairness)

An outcome is fair if it treats individuals with similar relevant qualifications similarly. This approach relies on the establishment of a similarity metric that shows the degree to which pairs of individuals are alike with regard to a specific task.[@dwork2012]-[@kusner2017]

Counterfactual fairness (individual fairness)

An outcome is fair if an automated decision made about an individual belonging to a sensitive group would have been the same were that individual a member of a different group in a closest possible alternative (or counterfactual) world.[@kusner2017] Like the individual fairness approach, this method of defining fairness focuses on the specific circumstances of an affected decision subject, but, by using the tools of contrastive explanation, it moves beyond individual fairness insofar as it brings out the causal influences behind the algorithmic output. It also presents the possibility of offering the subject of an automated decision knowledge of what factors if changed, could have influenced a different outcome. This could provide them with actionable recourse to change an unfavourable decision.

System implementation fairness¶

When your project team is approaching the beta stage, you should begin to build out your plan for implementation training and support. This plan should include adequate preparation for the responsible and unbiased deployment of the AI system by its on-the-ground users. Automated decision-support systems present novel risks of bias and misapplication at the point of delivery, so special attention should be paid to preventing harmful or discriminatory outcomes at this critical juncture of the AI project lifecycle. In order to design an optimal regime of implementer training and support, you should pay special attention to the unique pitfalls of bias-in-use to which the deployment of AI technologies give rise. These can be loosely classified as decision-automation bias (more commonly just ‘automation bias’) and automation-distrust bias:

Decision-Automation Bias I (over-reliance)

Users of automated decision-support systems may tend to become hampered in their critical judgement, rational agency, and situational awareness as a result of their faith in the perceived objectivity, neutrality, certainty, or superiority of the AI system (Gaube et al., 2021). This may lead to over-reliance or errors of omission, where implementers lose the capacity to identify and respond to the faults, errors, or deficiencies, which might arise over the course of the use of an automated system, because they become complacent and overly deferent to its directions and cues.[@bussone2015]

Decision-Automation Bias II (over-reliance)

Decision-automation bias may also lead to over-compliance or errors of commission where implementers defer to the perceived infallibility of the system and thereby become unable to detect problems emerging from its use for reason of a failure to hold the results against available information. Both over-reliance and over-compliance may lead to what is known as out-of-loop syndrome where the degradation of the role of human reason and the de-skilling of critical thinking hampers the user’s ability to complete the tasks that have been automated. This condition may bring about a loss of the ability to respond to system failure and may lead both to safety hazards and to dangers of discriminatory harm. To combat risks of decision-automation bias, you should operationalise strong regimes of accountability at the site of user deployment to steer human decision-agents to act on the basis of good reasons, solid inferences, and critical judgment.

Automation-Distrust Bias

At the other extreme, users of an automated decision-support system may tend to disregard its salient contributions to evidence-based reasoning either as a result of their distrust or scepticism about AI technologies in general or as a result of their over-prioritisation of the importance of prudence, common sense, and human expertise.[@longoni2019] An aversion to the non-human and amoral character of automated systems may also influence decision subjects’ hesitation to consult these technologies in high impact contexts such as healthcare, transportation, and law.[@dietvorst2015] ‘Research shows that people often prefer humans’ forecasts to algorithms’ forecasts,[@diab2011]-[@eastwood2012] more strongly weigh human input than algorithmic input,[@onkal2009]-[@promberger2006] and more harshly judge professionals who seek out advice from an algorithm rather than from a human.[@shaffer2013]-[@dietvorst2015]

Taking account of the context of impacted individuals in system implementation¶

In cases where you are utilising a decision-support AI system that draws on statistical inferences to determine outcomes which affect individual persons (e.g., a predictive risk model that helps an adult social care work determine an optimal path to caring for an elderly patient), fair implementation processes should include considerations of the specific context of each impacted individual.[@binns2017]-[@binns2018]-[@binns2019]-[@eiselson2013] Any application of this kind of system’s recommendation will be based on statistical generalisations, which pick up relationships between the decision recipient’s input data and patterns or trends that the AI model has extracted from the underlying distribution of that model’s original dataset. Such generalisations will be predicated on inferences about a decision subject’s future behaviours (or outcomes) that are based on populational-level correlations with the historical characteristics and attributes of the members of groups to which that person belongs rather than on the specific qualities of the person themself.

Fair and equitable treatment of decision subjects entails that their unique life circumstances and individual contexts be taken into account in decision-making processes that are supported by AI-enabled statistical generalisations. For this reason, you should train your implementers to think contextually and holistically about how these statistical generalisations apply to the specific situation of the decision recipient. This training should involve preparing implementers to work with an active awareness of the socio-technical aspect of implementing AI decision-assistance technologies from an integrative and human- centred point of view. You should train implementers to apply the statistical results to each particular case with appropriate context-sensitivity and ‘big picture’ sensibility. This means that the dignity they show to decision subjects can be supported by interpretive understanding, reasonableness, and empathy.

Ecosystem fairness¶

The AI project lifecycle does not exist in isolation from, or independent of, the wider social system of economic, legal, cultural, and political structures or institutions in which the production and use of AI systems take place. Rather, because it is embedded in these structures and institutions, the policies, norms, and procedures through which such structures and institutions influence human action also influence the AI project lifecycle itself. Inequities and biases at this ecosystem level can steer or shape AI research and innovation agendas in ways that can generate inequitable outcomes for protected, marginalised, vulnerable, or disadvantaged social groups. Such ecosystem-level inequities and biases may originate in and further reinforce asymmetrical power structures, unfair market dynamics, and skewed research funding schemes that favour or bring disproportionate benefit to those in the majority, or those who wield disproportionate power in society, at the cost of those who are disparately impacted by the discriminatory outcomes of the design, development, and use of AI technologies.

Ecosystem-level inequities can occur across a wide range of AI research and innovation contexts. For instance, when AI-enabled health interventions such as mobile-phone-based symptom checker apps or remote AI-assisted medical triaging or monitoring are designed without regard for the barriers to access faced by protected, vulnerable, or disadvantaged groups, they will disproportionately benefit users from other, more advantaged groups. Likewise, where funding of the development of AI technologies, which significantly affect the public interest, is concentrated in the hands of firms or vendors who singly pursue commercial or financial gain, this may result in exclusionary research and innovation environments, AI systems that are built without due regard for broad fairness and equity impacts, and limitations on the development and deployment of wide-scale, publicly beneficial technology infrastructure. Moreover, widespread structural and institutional barriers to diversity and inclusion can create homogeneity on AI project teams (and among organisational policy owners) that has consequential fairness impacts on application decisions, resource allocation choices, and system deployment strategies.

The concept of ecosystem fairness highlights the importance of mitigating the range of inequity-generating path dependencies that originate at the ecosystem level and that are often neglected by or omitted from analyses of AI project lifecycles. Ecosystem fairness therefore focuses both on rectifying the social structures and institutions that engender indirect discrimination and on addressing the structural and institutional changes needed for the corrective modification of social patterns that engender discriminatory impacts and socio-economic disadvantage. In this way, ecosystem fairness involves the transformation of unjust economic, legal, cultural, and political structures or institutions with the aim of the universal realisation of equitable social arrangements.