Transparency, Explainability, and CARE & ACT Principles¶

Chapter Outline¶

- Transparency and Explainability

- Consider context

- Anticipate impacts

- Reflect on Purpose, Positionality, and Power

- Engage inclusively

- Act Responsibly

Chapter Summary

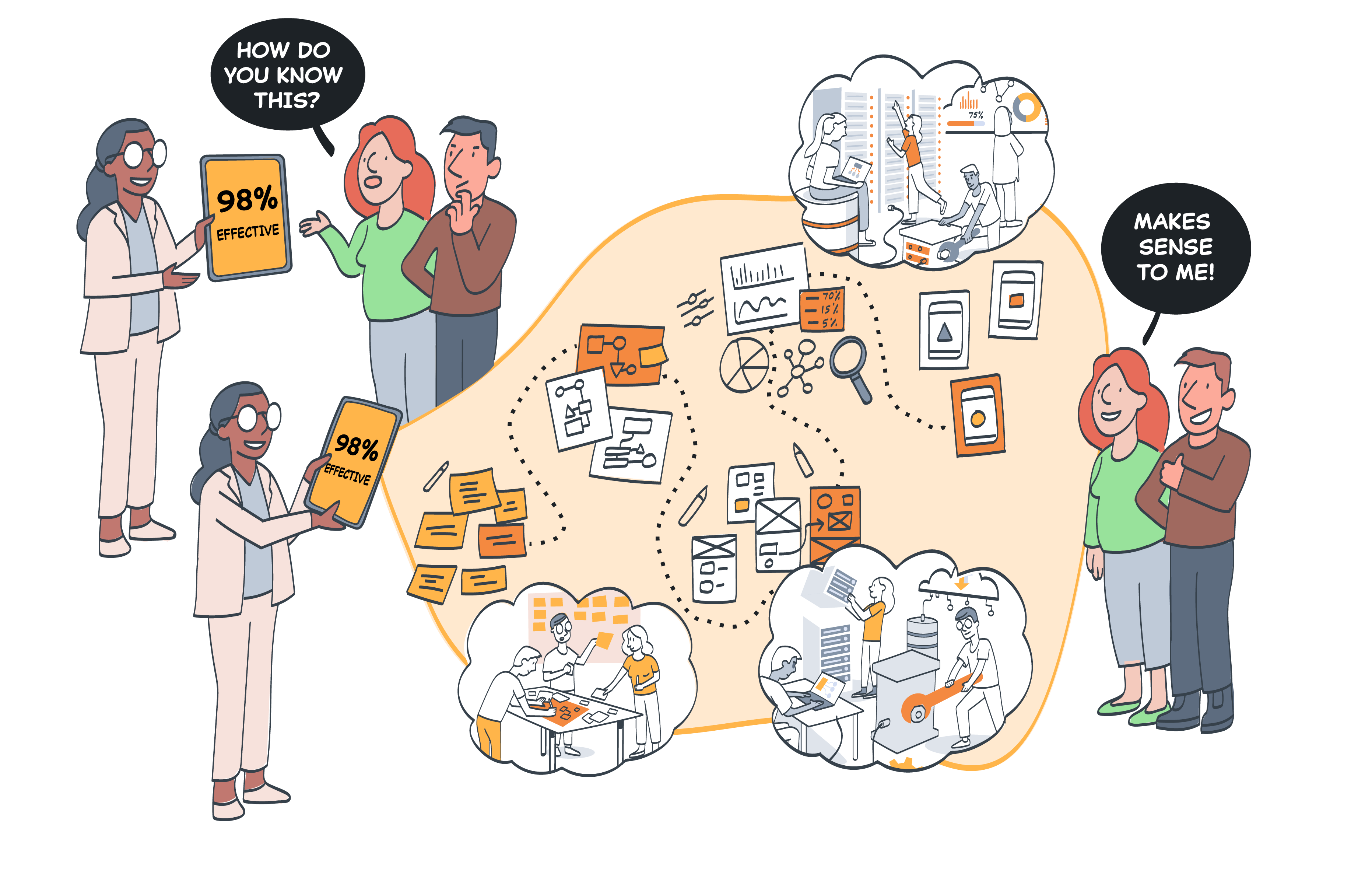

The last chapter of the course is divided into two sections. First, we will delve into the concepts of transparency and explainability in AI, looking at the difference between the two, and the various types of AI explanations.

In the second half of the chapter we will bring everything back together with a review of the CARE & ACT principles. These principles serve as a practical tool to ensure AI systems are developed in an ethical and responsible manner. They are the following:

- Consider context

- Anticipate impacts

- Reflect on purpose, positionality, and power

- Engage inclusively

- Act transparently and responsibly

Learning Objectives

- Familiarise yourself with the concepts of transparency and explainability in the context of AI systems and what the difference between them is.

- Understand the difference between process-based and outcome-based explanations.

- Learn about the different types of explanations that may be required in terms of AI-assisted decisions, and what is required of each type.

- Familiarise yourself with the CARE & ACT principles, and how they can be used as a practical tool for thinking about ethical and responsible design and development of AI systems.